So much so that I developed a GTK frontend for it!

It's gotten to the point where perhaps it could be useful for others so I made it into a proper plugin. Feedback welcome!

github.com/icarito/gtk-...

So much so that I developed a GTK frontend for it!

It's gotten to the point where perhaps it could be useful for others so I made it into a proper plugin. Feedback welcome!

github.com/icarito/gtk-...

This post walks you through the step-by-step discovery of state-of-the-art positional encoding in transformer models.

This post walks you through the step-by-step discovery of state-of-the-art positional encoding in transformer models.

gitingest.com

github.com/cyclotruc/gi...

gitingest.com

github.com/cyclotruc/gi...

Links to notes in the YT description

youtu.be/ZEvXvyY17Ys?...

Links to notes in the YT description

youtu.be/ZEvXvyY17Ys?...

#datascience #jupyternotebooks

www.answer.ai/posts/2024-1...

#datascience #jupyternotebooks

www.answer.ai/posts/2024-1...

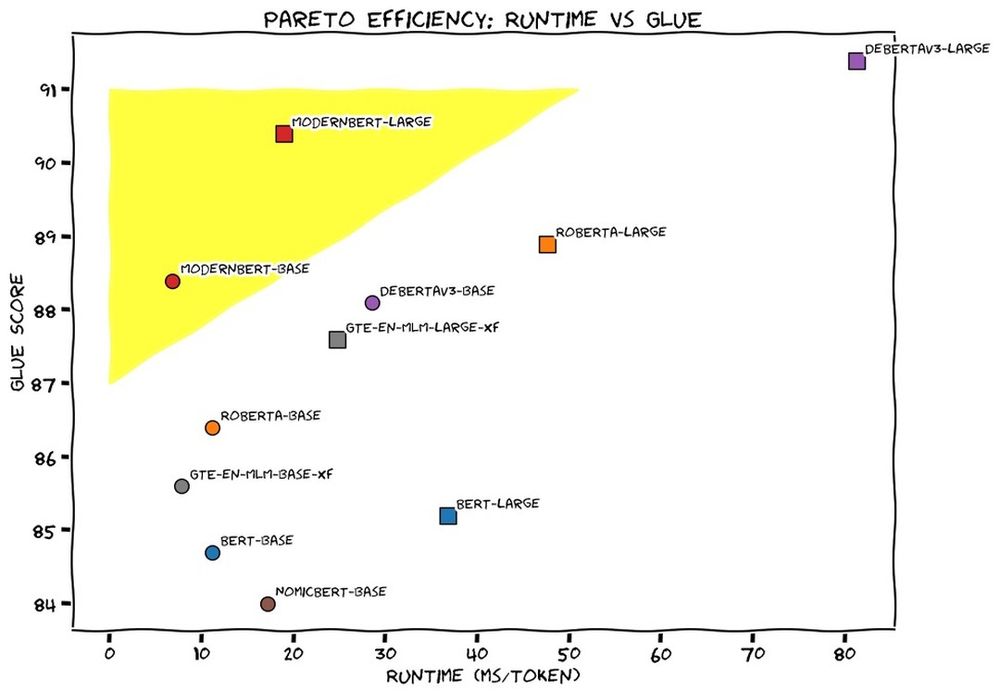

Hey did you wonder what if we trained a bigger model? Where would that take us?

Yeah, us too.

So we're gonna train a "huge" version of this model in 2025. We might need to change the y-axis on this graph…

Hey did you wonder what if we trained a bigger model? Where would that take us?

Yeah, us too.

So we're gonna train a "huge" version of this model in 2025. We might need to change the y-axis on this graph…

(Sorry for using Twitter names, but I don't know everyone's bsky IDs…)

(Sorry for using Twitter names, but I don't know everyone's bsky IDs…)

We trained 2 new models. Like BERT, but modern. ModernBERT.

Not some hypey GenAI thing, but a proper workhorse model, for retrieval, classification, etc. Real practical stuff.

It's much faster, more accurate, longer context, and more useful. 🧵

We trained 2 new models. Like BERT, but modern. ModernBERT.

Not some hypey GenAI thing, but a proper workhorse model, for retrieval, classification, etc. Real practical stuff.

It's much faster, more accurate, longer context, and more useful. 🧵

What is it? An extension for @cursor_ai that allows you to save and share your composer and chat history.

Give it a try at marketplace.visualstudio.com/items?itemNa... and let us know what you think!

What is it? An extension for @cursor_ai that allows you to save and share your composer and chat history.

Give it a try at marketplace.visualstudio.com/items?itemNa... and let us know what you think!

Tell me what you think!

🚀 Enter Roaming RAG: a simpler way to make your LLMs find answers in well-structured docs. No vector databases, no headaches—just rich, structured context.

👉 Read how it works: arcturus-labs.com/blog/2024/11...

Tell me what you think!