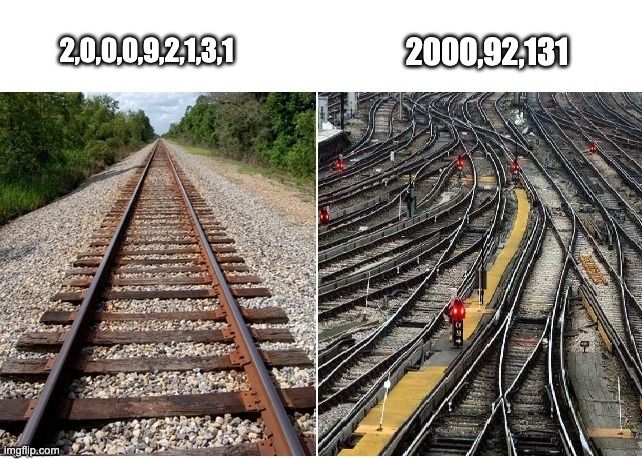

This method mirrors how we often read and interpret numbers, like grouping digits with commas. Theoretically, this should help with math reasoning!

[5/N]

This method mirrors how we often read and interpret numbers, like grouping digits with commas. Theoretically, this should help with math reasoning!

[5/N]

A 10-digit numbers now took 10 tokens instead of 3-4, which is ~2-3x more than before. That's a significant hit on training & inference costs!

LLaMA 3 fixed this by grouping numbers into threes, balancing compression and consistency.

[4/N]

A 10-digit numbers now took 10 tokens instead of 3-4, which is ~2-3x more than before. That's a significant hit on training & inference costs!

LLaMA 3 fixed this by grouping numbers into threes, balancing compression and consistency.

[4/N]

The consistent representation of numbers made mathematical reasoning much better!

[3/N]

The consistent representation of numbers made mathematical reasoning much better!

[3/N]

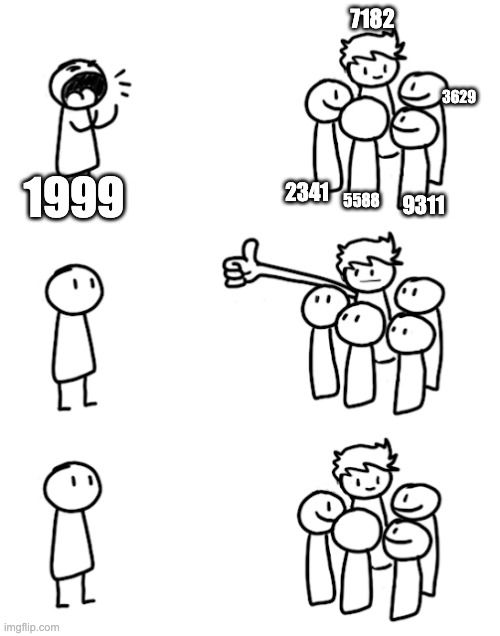

• Merges frequent substrings, saving memory vs. inputting single characters

• However, vocabulary depends on training data

• Common numbers (e.g., 1999) get single tokens; others are split

[2/N]

• Merges frequent substrings, saving memory vs. inputting single characters

• However, vocabulary depends on training data

• Common numbers (e.g., 1999) get single tokens; others are split

[2/N]

Let's take a trip down memory lane!

[1/N]

Let's take a trip down memory lane!

[1/N]

➡️ To install: `pip install gcmt`

➡️ To install: `pip install gcmt`