👨💻 https://gabrielegoletto.github.io

@cvprconference.bsky.social

#CVPR2025 paper

Learning from Streaming Video with Orthogonal Gradients

Instead of shuffling clips, can we learn from videos fed sequentially, where you see a clip once, in order?

How to deal with the correlation of gradients over training?

1/3

@cvprconference.bsky.social

#CVPR2025 paper

Learning from Streaming Video with Orthogonal Gradients

Instead of shuffling clips, can we learn from videos fed sequentially, where you see a clip once, in order?

How to deal with the correlation of gradients over training?

1/3

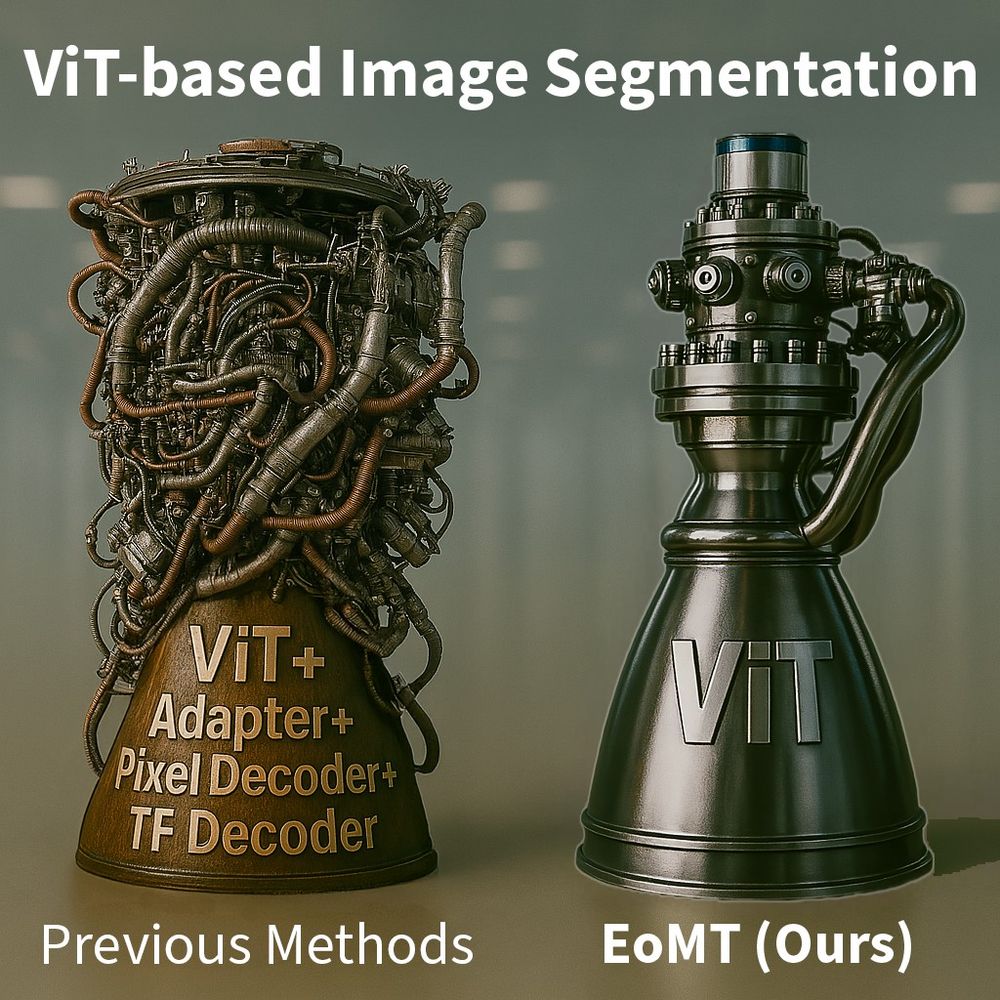

Why build a rocket engine full of bolted-on subsystems when one elegant unit does the job? 💡

That’s what we did for segmentation.

✅ Meet the Encoder-only Mask Transformer (EoMT): tue-mps.github.io/eomt (CVPR 2025)

(1/6)

Why build a rocket engine full of bolted-on subsystems when one elegant unit does the job? 💡

That’s what we did for segmentation.

✅ Meet the Encoder-only Mask Transformer (EoMT): tue-mps.github.io/eomt (CVPR 2025)

(1/6)

Take an aerial or satellite image from anywhere in the world, and AstroLoc can (probably) find its location, and provide a precise footprint!

Links to paper, demo and full-length (5 min) video ⬇️

Take an aerial or satellite image from anywhere in the world, and AstroLoc can (probably) find its location, and provide a precise footprint!

Links to paper, demo and full-length (5 min) video ⬇️

HD-EPIC: A Highly-Detailed Egocentric Video Dataset

hd-epic.github.io

arxiv.org/abs/2502.04144

New collected videos

263 annotations/min: recipe, nutrition, actions, sounds, 3D object movement &fixture associations, masks.

26K VQA benchmark to challenge current VLMs

1/N

HD-EPIC: A Highly-Detailed Egocentric Video Dataset

hd-epic.github.io

arxiv.org/abs/2502.04144

New collected videos

263 annotations/min: recipe, nutrition, actions, sounds, 3D object movement &fixture associations, masks.

26K VQA benchmark to challenge current VLMs

1/N

ShowHowTo: Generating Scene-Conditioned Step-by-Step Visual Instructions

arxiv.org/abs/2412.01987

soczech.github.io/showhowto/

Given one real image &variable sequence of text instructions, ShowHowTo generates a multi-step sequence of images *conditioned on the scene in the REAL image*

🧵

ShowHowTo: Generating Scene-Conditioned Step-by-Step Visual Instructions

arxiv.org/abs/2412.01987

soczech.github.io/showhowto/

Given one real image &variable sequence of text instructions, ShowHowTo generates a multi-step sequence of images *conditioned on the scene in the REAL image*

🧵