Learn more: futureoflife.org

Let's take our future back.

📝 Sign the Superintelligence Statement and join the growing call to ban the development of superintelligence, until it can be made safely: superintelligence-statement.org

#KeepTheFutureHuman

Let's take our future back.

📝 Sign the Superintelligence Statement and join the growing call to ban the development of superintelligence, until it can be made safely: superintelligence-statement.org

#KeepTheFutureHuman

💰 We're offering $100K+ for creative digital media that brings the key ideas in Executive Director Anthony Aguirre's

Keep the Future Human essay to life, to reach wider audiences and inspire real-world action.

🔗 Learn more and enter by Nov. 30!

💰 We're offering $100K+ for creative digital media that brings the key ideas in Executive Director Anthony Aguirre's

Keep the Future Human essay to life, to reach wider audiences and inspire real-world action.

🔗 Learn more and enter by Nov. 30!

❓ Growing uncertainty.

🤝 One shared future, for us all to shape.

"Tomorrow’s AI", our new scrollytelling site, visualizes 13 interactive, expert-forecast scenarios showing how advanced AI could transform our world - for better, or for worse: www.tomorrows-ai.org

❓ Growing uncertainty.

🤝 One shared future, for us all to shape.

"Tomorrow’s AI", our new scrollytelling site, visualizes 13 interactive, expert-forecast scenarios showing how advanced AI could transform our world - for better, or for worse: www.tomorrows-ai.org

➡️ Following our 2024 index, 6 independent AI experts rated leading AI companies - OpenAI, Anthropic, Meta, Google DeepMind, xAI, DeepSeek, and Zhipu AI - across critical safety and security domains.

So what were the results? 🧵👇

➡️ Following our 2024 index, 6 independent AI experts rated leading AI companies - OpenAI, Anthropic, Meta, Google DeepMind, xAI, DeepSeek, and Zhipu AI - across critical safety and security domains.

So what were the results? 🧵👇

It’s a huge win for Big Tech - and a big risk for families.

✍️ Add your name and say no to the federal block on AI safeguards: FutureOfLife.org/Action

It’s a huge win for Big Tech - and a big risk for families.

✍️ Add your name and say no to the federal block on AI safeguards: FutureOfLife.org/Action

-The recent hot topic of sycophantic AI

-Time horizons of AI agents

-AI in finance and scientific research

-How AI differs from other technology

And more.

🔗 Tune in to the full episode now at the link below:

-The recent hot topic of sycophantic AI

-Time horizons of AI agents

-AI in finance and scientific research

-How AI differs from other technology

And more.

🔗 Tune in to the full episode now at the link below:

Learn more: astera.org/residency

Learn more: astera.org/residency

🧵 An overview of the measures we recommend 👇

🧵 An overview of the measures we recommend 👇

➡️ FLI Executive Director Anthony Aguirre joins to discuss his new essay, "Keep the Future Human", which warns that the unchecked development of smarter-than-human, autonomous, general-purpose AI will almost inevitably lead to human replacement - but it doesn't have to:

➡️ FLI Executive Director Anthony Aguirre joins to discuss his new essay, "Keep the Future Human", which warns that the unchecked development of smarter-than-human, autonomous, general-purpose AI will almost inevitably lead to human replacement - but it doesn't have to:

🎥 Watch at the link in the replies for a breakdown of the risks from smarter-than-human AI - and Anthony's proposals to steer us toward a safer future:

🎥 Watch at the link in the replies for a breakdown of the risks from smarter-than-human AI - and Anthony's proposals to steer us toward a safer future:

That's why FLI Executive Director Anthony Aguirre has published a new essay, "Keep The Future Human".

🧵 1/4

That's why FLI Executive Director Anthony Aguirre has published a new essay, "Keep The Future Human".

🧵 1/4

❓ If AIs could have free will

🧠 AI psychology?

🤝 Trading with AI, and its role in finance

And more!

Watch now at the link below, or on your favourite podcast player! 👇

❓ If AIs could have free will

🧠 AI psychology?

🤝 Trading with AI, and its role in finance

And more!

Watch now at the link below, or on your favourite podcast player! 👇

This emergent deceptive behaviour highlights the unsolved challenge of controlling powerful AI.

If today's AI breaks chess rules, what might AGI do?

🔗 Read more below:

This emergent deceptive behaviour highlights the unsolved challenge of controlling powerful AI.

If today's AI breaks chess rules, what might AGI do?

🔗 Read more below:

📺 The latest from Digital Engine showcases how close these threats are becoming:

📺 The latest from Digital Engine showcases how close these threats are becoming:

✍️ Apply by January 10 at the link in the replies:

✍️ Apply by January 10 at the link in the replies:

🤝 FLI is offering up to $5 million in grants for multistakeholder engagement efforts on safe & prosperous AI.

👉 We're looking for projects that educate and engage specific stakeholder groups on AI-related issues, or foster grassroots outreach/community organizing:

🤝 FLI is offering up to $5 million in grants for multistakeholder engagement efforts on safe & prosperous AI.

👉 We're looking for projects that educate and engage specific stakeholder groups on AI-related issues, or foster grassroots outreach/community organizing:

New on the FLI blog:

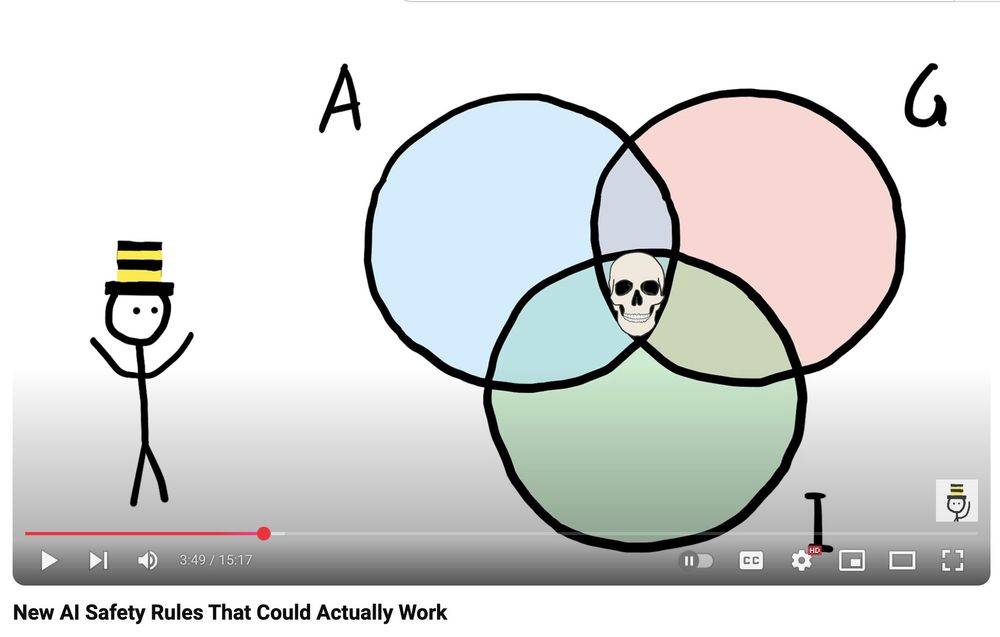

-Why might AIs resist shutdown?

-Why is this a problem?

-What other instrumental goals could AIs have?

-Could this cause a catastrophe?

🔗 Read it below:

New on the FLI blog:

-Why might AIs resist shutdown?

-Why is this a problem?

-What other instrumental goals could AIs have?

-Could this cause a catastrophe?

🔗 Read it below:

"'[Superintelligent] systems are actually going to be agentic in a real way,' Sutskever said, as opposed to the current crop of 'very slightly agentic' AI. They’ll 'reason' and, as a result, become more unpredictable."

From @techcrunch.com at @neuripsconf.bsky.social:

"'[Superintelligent] systems are actually going to be agentic in a real way,' Sutskever said, as opposed to the current crop of 'very slightly agentic' AI. They’ll 'reason' and, as a result, become more unpredictable."

From @techcrunch.com at @neuripsconf.bsky.social:

With the context of OpenAI's new o3 model announcement, a new Digital Engine video features AI experts discussing existential threats from advancing AI, especially artificial general intelligence - which we currently have no way to control.

⏯️ Watch now below:

With the context of OpenAI's new o3 model announcement, a new Digital Engine video features AI experts discussing existential threats from advancing AI, especially artificial general intelligence - which we currently have no way to control.

⏯️ Watch now below:

What does this mean?

🧵👇

What does this mean?

🧵👇

🗞️ Our 2024 AI Safety Index, released last week, was covered in @jeremyakahn.bsky.social's @fortune.com newsletter.

🔗 Read the full newsletter, and find our complete scorecards report, in the replies below:

🗞️ Our 2024 AI Safety Index, released last week, was covered in @jeremyakahn.bsky.social's @fortune.com newsletter.

🔗 Read the full newsletter, and find our complete scorecards report, in the replies below:

🇺🇸 Passionate about advocating for forward-thinking AI policy? This could be your opportunity to lead our US policy work and growing US policy team.

✍ Please share, and apply by Dec. 22 at the link in the replies:

🇺🇸 Passionate about advocating for forward-thinking AI policy? This could be your opportunity to lead our US policy work and growing US policy team.

✍ Please share, and apply by Dec. 22 at the link in the replies: