Be sure to stop by the poster sessions to check out Hailey's work on decision-making, @zachtpennington.bsky.social's work on stress, @zoechristensonwick.bsky.social's work on seizures, Alexa's work on cocaine seeking, and Sandra's work on context representations 🤓

Be sure to stop by the poster sessions to check out Hailey's work on decision-making, @zachtpennington.bsky.social's work on stress, @zoechristensonwick.bsky.social's work on seizures, Alexa's work on cocaine seeking, and Sandra's work on context representations 🤓

Clinical applications on the horizon! More on that soon!

Clinical applications on the horizon! More on that soon!

(Makes continual learning much easier!)

(Makes continual learning much easier!)

Turns out: training for pattern recognition gives generative abilit

Turns out: training for pattern recognition gives generative abilit

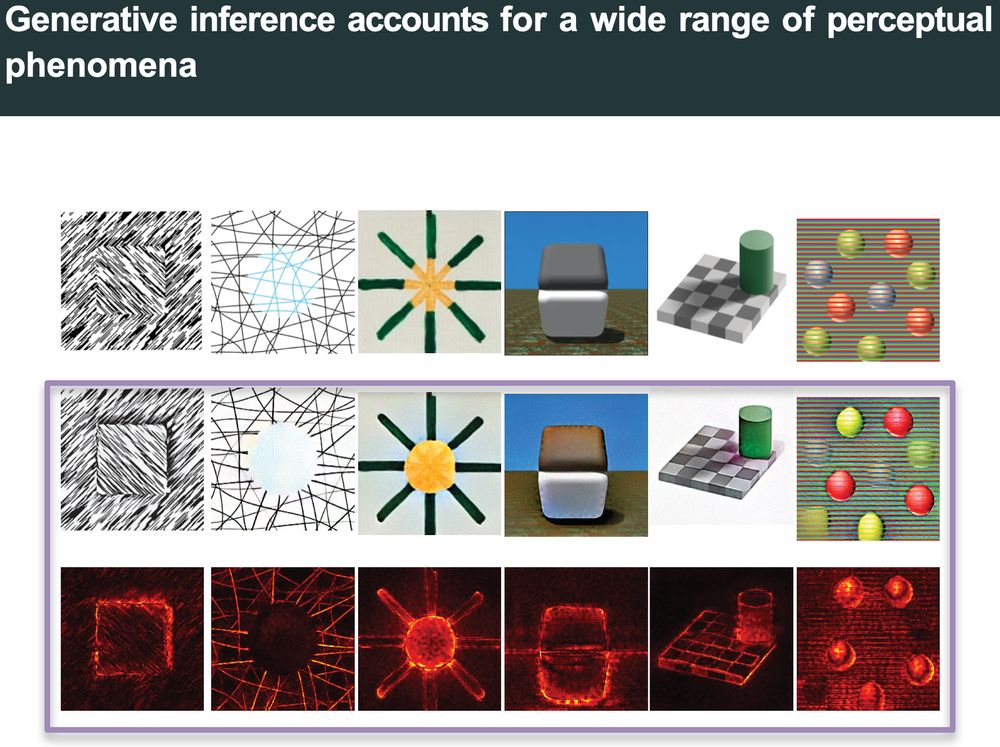

Again, generative inference via PGDD accounts for all of them.

Again, generative inference via PGDD accounts for all of them.

Our conclusion: all Gestalt perceptual grouping principles may unify under one mechanism: integration of priors into sensory processing.

Our conclusion: all Gestalt perceptual grouping principles may unify under one mechanism: integration of priors into sensory processing.

What about other principles? Roelfsema lab ran a clever monkey study:

What about other principles? Roelfsema lab ran a clever monkey study:

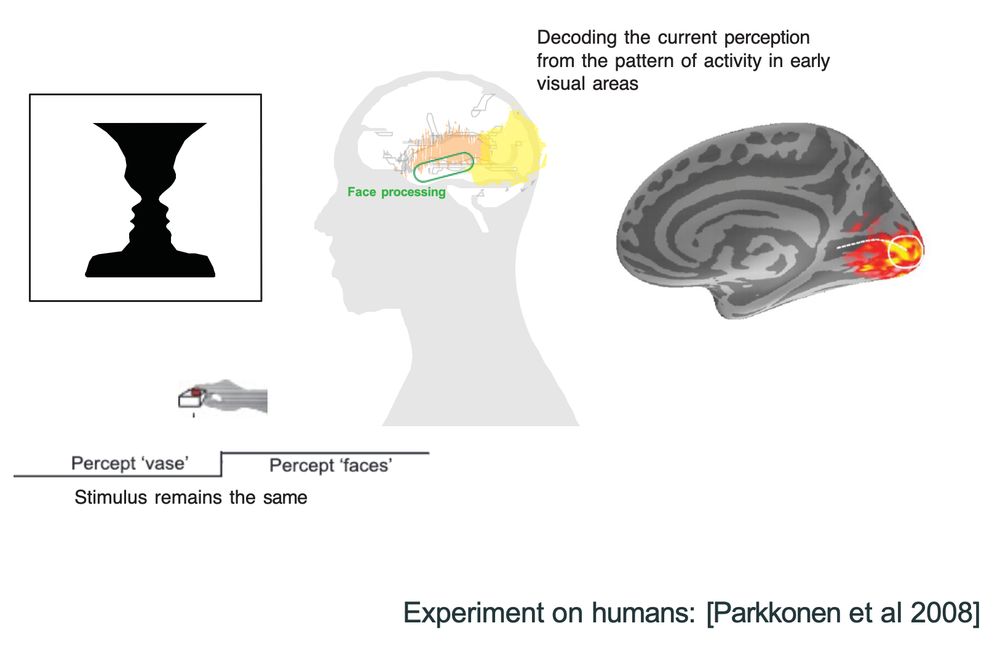

Running generative inference: the object network creates vase patterns, the face network creates facial features!

Running generative inference: the object network creates vase patterns, the face network creates facial features!

Here are actual ImageNet samples of both classes . The network found its closest meaningful interpretation!

Here are actual ImageNet samples of both classes . The network found its closest meaningful interpretation!

First time I ran this—I couldn't believe my eyes!

First time I ran this—I couldn't believe my eyes!

The algorithm iteratively updates activations using gradients (errors) of this objective.

The algorithm iteratively updates activations using gradients (errors) of this objective.

Our inference objective? Simple and intuitive: Increase confidence!

Our inference objective? Simple and intuitive: Increase confidence!

Conventional inference → low confidence output. Makes sense, it was never trained on Kanizsa stimuli. But what if we use it's (intrinsic learning) feedback (aka backpropagation graph)?

Conventional inference → low confidence output. Makes sense, it was never trained on Kanizsa stimuli. But what if we use it's (intrinsic learning) feedback (aka backpropagation graph)?