Goals are mapped to programs which are embedded in a latent space.

A fitness metric is assigned to the programs and program search is done to synthesise new human-like goals.

Goals are mapped to programs which are embedded in a latent space.

A fitness metric is assigned to the programs and program search is done to synthesise new human-like goals.

YAML parsing in python is weird.

![{'lol': ['5.0E6',

'5.0e6',

'5.E6',

'5.e6',

'5E6',

'5e6',

5e-06,

5e-06,

5e-06,

5e-06,

'5E-6',

'5e-6',

5000000.0,

5000000.0,

5000000.0,

5000000.0,

'5E+6',

'5e+6']}](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:ndszovlqz5net2hyllfww2yn/bafkreicua2n2os5acybolmps4wwgr35vh5l3fo2zisz3t3bhqs5efdq7mq@jpeg)

YAML parsing in python is weird.

Creating the GUI at PARC seemed like a "waste of FLOPs" but revolutionized computing.

Creating the GUI at PARC seemed like a "waste of FLOPs" but revolutionized computing.

Models generalise to slightly harder versions of a problem, and the correct answers are used to bootstrap the next model and the next one and so on.

Models generalise to slightly harder versions of a problem, and the correct answers are used to bootstrap the next model and the next one and so on.

Starting to think

gibberish gibberish gibberish

Focus again. Calm up.

🤣

Starting to think

gibberish gibberish gibberish

Focus again. Calm up.

🤣

It's fun to see these aha moments and it'd be interesting to understand whether their presence helps.

It's fun to see these aha moments and it'd be interesting to understand whether their presence helps.

At least in that case, it looks like <thinking> is simply a consequence of RL.

At least in that case, it looks like <thinking> is simply a consequence of RL.

Could not reproduce with the API tho.

Could not reproduce with the API tho.

No wonder that OAI keeps the chains of thoughts private!

No wonder that OAI keeps the chains of thoughts private!

Another example of "the model just wants to learn". No need for fancy search - looks like the model will learn the right algo in the chain of thought.

Another example of "the model just wants to learn". No need for fancy search - looks like the model will learn the right algo in the chain of thought.

Another important feature is NCCL, which is fast inter-GPU communication. Thus, multiple boxes can be used for inference.

Another important feature is NCCL, which is fast inter-GPU communication. Thus, multiple boxes can be used for inference.

If that future is to come, we need to catalyse a similar community and similar machines.

The first machine I heard about in this category was Tinybox.

The smallest has 0.7 FP16 PFLOPS and 144GB GPU memory at $15k.

If that future is to come, we need to catalyse a similar community and similar machines.

The first machine I heard about in this category was Tinybox.

The smallest has 0.7 FP16 PFLOPS and 144GB GPU memory at $15k.

It was programmed using individual switches and its display was a bunch of lights on the front panel.

Nonetheless, the price was low enough to start a hobbyist community and catalyse the PC community.

It was programmed using individual switches and its display was a bunch of lights on the front panel.

Nonetheless, the price was low enough to start a hobbyist community and catalyse the PC community.

It's a good one!

Privacy-preserving RAG with local LLM and remote documents could be done in a very similar way.

It's a good one!

Privacy-preserving RAG with local LLM and remote documents could be done in a very similar way.

Mystery Blocksworld is a block stacking task where the names are randomised, requiring generalisation.

Still plenty of room to go, but clearly the start of a new s curve.

Mystery Blocksworld is a block stacking task where the names are randomised, requiring generalisation.

Still plenty of room to go, but clearly the start of a new s curve.

Breakthrough labs (this is from OpenAI) are basically GPUs, Python, monitoring, docs, and a chat app.

Even if this post was part in jest, this is a point of joy. We should make sure the culture of openness continues.

Breakthrough labs (this is from OpenAI) are basically GPUs, Python, monitoring, docs, and a chat app.

Even if this post was part in jest, this is a point of joy. We should make sure the culture of openness continues.

My intuition is that it rhymes with MaxEnt, with code and math verifiers. Like, OREO has similar scaling curves.

My intuition is that it rhymes with MaxEnt, with code and math verifiers. Like, OREO has similar scaling curves.

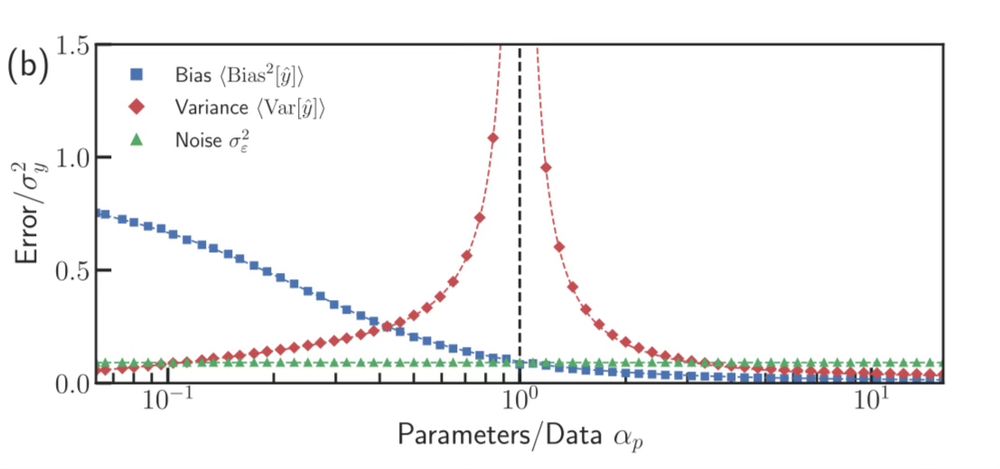

ML conventional wisdom is the bias-variance trade-off.

Here is a neural net with a single hidden layer. At first, bias decreases and variance increases.

As you train for longer, you get a phase transition and then *both* decrease.

ML conventional wisdom is the bias-variance trade-off.

Here is a neural net with a single hidden layer. At first, bias decreases and variance increases.

As you train for longer, you get a phase transition and then *both* decrease.