✨I help companies leverage AI and develop innovative products

🧩I assist mentees in becoming the top 1% of AI practitioners

We're already seeing ModernBERT finetunes on the @hf.co Hub. My guess is we'll see hundreds of these by the end of 2025.

We're already seeing ModernBERT finetunes on the @hf.co Hub. My guess is we'll see hundreds of these by the end of 2025.

I have been looking forward to this feature as I felt most back to back releases are overwhelming and I tend to miss out 🤠

I have been looking forward to this feature as I felt most back to back releases are overwhelming and I tend to miss out 🤠

It has a 8192 sequence length, is extremely efficient, is uniquely great at analyzing code, and much more. Read this for details:

huggingface.co/blog/modern...

It has a 8192 sequence length, is extremely efficient, is uniquely great at analyzing code, and much more. Read this for details:

huggingface.co/blog/modern...

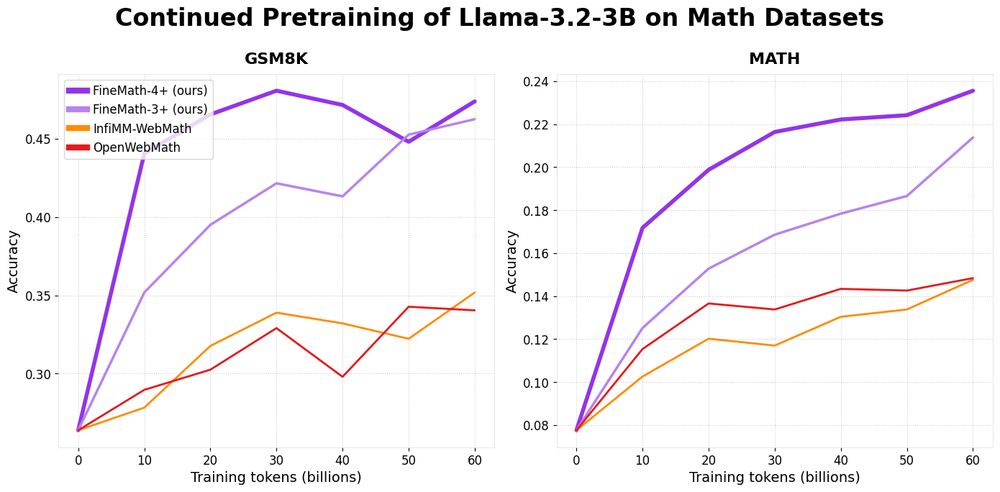

Math remains challenging for LLMs and by training on FineMath we see considerable gains over other math datasets, especially on GSM8K and MATH.

🤗 huggingface.co/datasets/Hug...

Here’s a breakdown 🧵

Math remains challenging for LLMs and by training on FineMath we see considerable gains over other math datasets, especially on GSM8K and MATH.

🤗 huggingface.co/datasets/Hug...

Here’s a breakdown 🧵

simonwillison.net/2024/Dec/19/...

simonwillison.net/2024/Dec/19/...

QLoRA fine-tuning with 4-bit with bsz of 4 can be done with 32 GB VRAM and is very fast! ✨

github.com/merveenoyan/...

QLoRA fine-tuning with 4-bit with bsz of 4 can be done with 32 GB VRAM and is very fast! ✨

github.com/merveenoyan/...

Inspired by FineWeb-Edu the community is labelling the educational quality of texts for many languages.

318 annotators, 32K+ annotations, 12 languages - and growing!🌍

huggingface.co/datasets/dat...

Inspired by FineWeb-Edu the community is labelling the educational quality of texts for many languages.

318 annotators, 32K+ annotations, 12 languages - and growing!🌍

huggingface.co/datasets/dat...

They did this to show how easy it now is to mass produce "credible" research. Academia isn't ready.

They did this to show how easy it now is to mass produce "credible" research. Academia isn't ready.

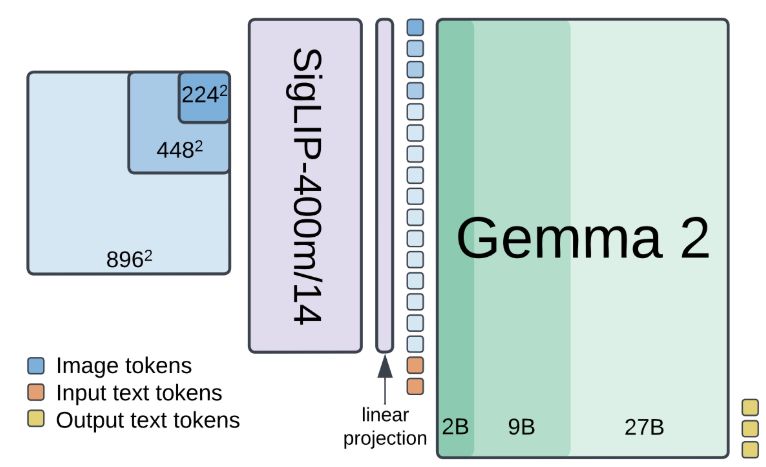

C4AI community has built Maya 8B, a new open-source multilingual VLM built on SigLIP and Aya 8B 🌱 works on 8 languages! 🗣️

The authors extend Llava dataset using Aya's translation capabilities with 558k examples!

works very well ⬇️ huggingface.co/spaces/kkr51...

C4AI community has built Maya 8B, a new open-source multilingual VLM built on SigLIP and Aya 8B 🌱 works on 8 languages! 🗣️

The authors extend Llava dataset using Aya's translation capabilities with 558k examples!

works very well ⬇️ huggingface.co/spaces/kkr51...

simonwillison.net/2024/Dec/15/...

simonwillison.net/2024/Dec/15/...

- It has SFT formatted reasoning sequences, like those in o1.

- You could incorporate these into post training to boost reasoning abilities.

- It has SFT formatted reasoning sequences, like those in o1.

- You could incorporate these into post training to boost reasoning abilities.

ColQwen2 as retriever, MonoQwen2-VL as reranker, Qwen2-VL as VLM in this notebook that runs on a GPU as small as L4 🔥 huggingface.co/learn/cookbo...

ColQwen2 as retriever, MonoQwen2-VL as reranker, Qwen2-VL as VLM in this notebook that runs on a GPU as small as L4 🔥 huggingface.co/learn/cookbo...

High-quality and natural speech generation that runs 100% locally in your browser, powered by OuteTTS and Transformers.js. 🤗 Try it out yourself!

Demo + source code below 👇

High-quality and natural speech generation that runs 100% locally in your browser, powered by OuteTTS and Transformers.js. 🤗 Try it out yourself!

Demo + source code below 👇

Check it out at huggingface.co/spaces/open-...

Check it out at huggingface.co/spaces/open-...

@merve.bsky.social transformers with quantized LoRA: https://buff.ly/3CXfH7s

Jetha Chan brings keras LoRA https://buff.ly/4iBgprp & JAX https://buff.ly/4f5DLCv

@merve.bsky.social transformers with quantized LoRA: https://buff.ly/3CXfH7s

Jetha Chan brings keras LoRA https://buff.ly/4iBgprp & JAX https://buff.ly/4f5DLCv

Notes and recording here:

hamel.dev/notes/llm/of...

Notes and recording here:

hamel.dev/notes/llm/of...

1/7

1/7

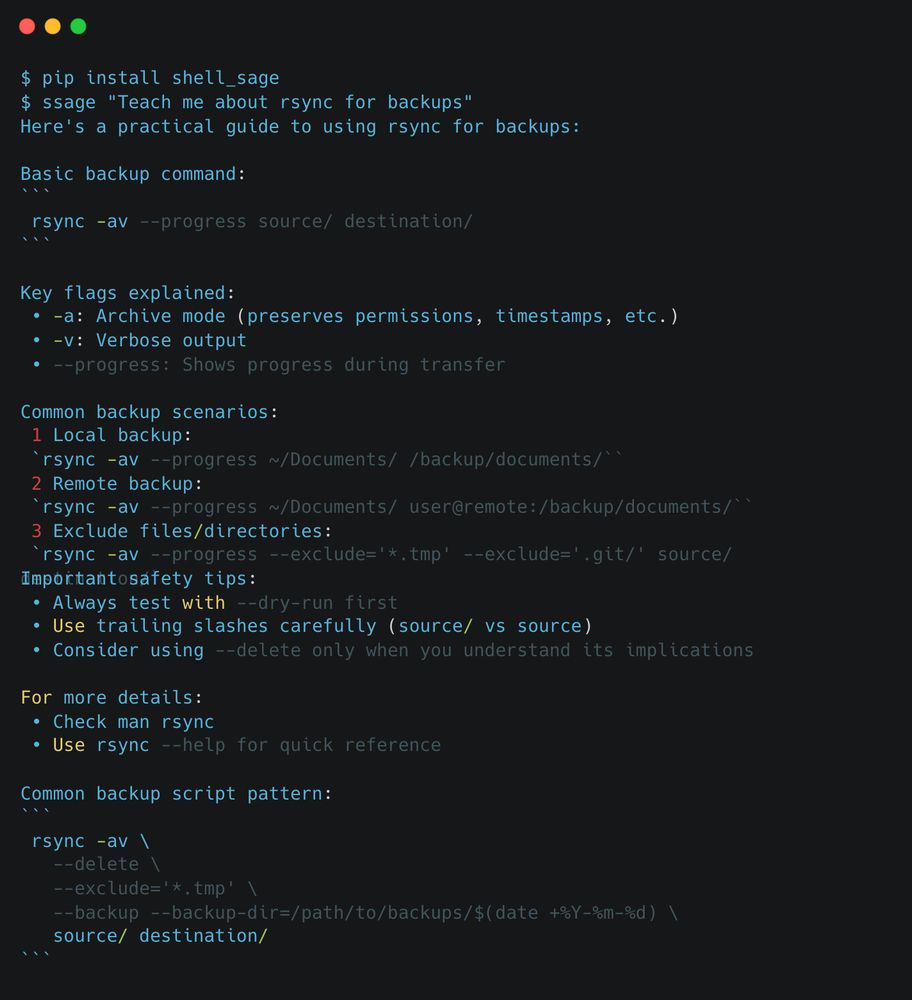

ShellSage is an LLM that lives in your terminal. It can see what directory you're in, what commands you've typed, what output you got, & your previous AI Q&A's.🧵

ShellSage is an LLM that lives in your terminal. It can see what directory you're in, what commands you've typed, what output you got, & your previous AI Q&A's.🧵

My notes here: simonwillison.net/2024/Dec/6/r...

My notes here: simonwillison.net/2024/Dec/6/r...

Interactive data viz: huggingface.co/spaces/huggi...

Interactive data viz: huggingface.co/spaces/huggi...

Here's what we learned from analyzing real-world implementations...

Here's what we learned from analyzing real-world implementations...

👷 If you want to get involved, you can do this:

- read (and star) the repo

- check out our new discord channel

- open a PR to submit an exercise on module 1

- open an issue to improve the course

- review another submission

🧵

👷 If you want to get involved, you can do this:

- read (and star) the repo

- check out our new discord channel

- open a PR to submit an exercise on module 1

- open an issue to improve the course

- review another submission

🧵