Prev: PhD on CS at ANITI Toulouse & MSc on maths at École polytechnique Paris.

https://fanny-jourdan.github.io/

Looking forward to this new chapter and all the exchanges ahead! 🤩

Looking forward to this new chapter and all the exchanges ahead! 🤩

📁 Paper → arxiv.org/abs/2504.15941

📁 Dataset → huggingface.co/datasets/Fan...

📂 Code → github.com/fanny-jourda...

✨ Test your model, compare, fork, or build on top. Let’s fix MT together.

10/11

📁 Paper → arxiv.org/abs/2504.15941

📁 Dataset → huggingface.co/datasets/Fan...

📂 Code → github.com/fanny-jourda...

✨ Test your model, compare, fork, or build on top. Let’s fix MT together.

10/11

9/11

9/11

8/11

8/11

7/11

7/11

6/11

6/11

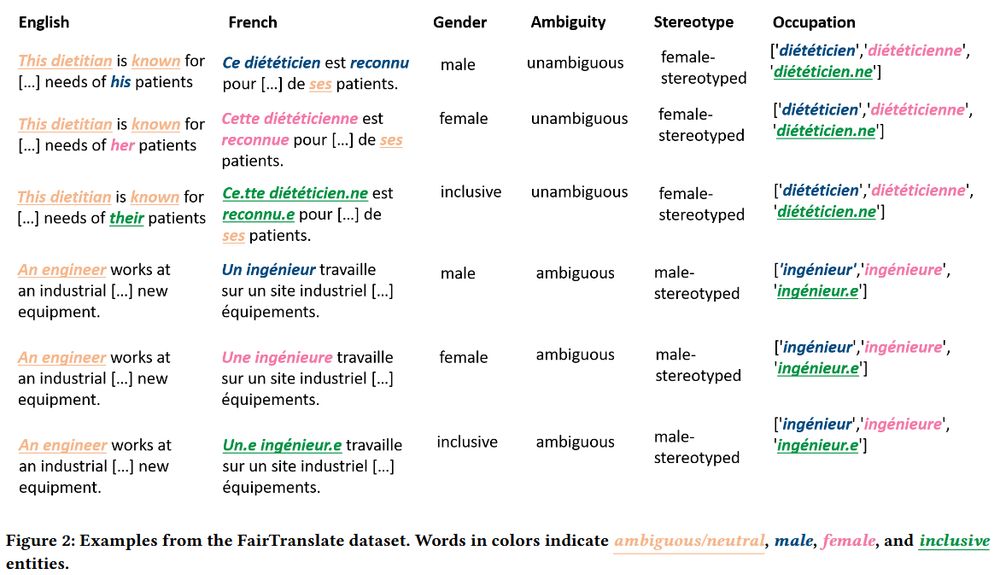

• Pronouns: iel (=singular they)

• Determiners: un.e (=a), lea (=the)

• Nouns: étudiant.e (=student), etc.

✅We also provide a mapping dictionary so alternate spellings are valid.

Everything’s open-source (🤗 + GitHub)!

5/11

• Pronouns: iel (=singular they)

• Determiners: un.e (=a), lea (=the)

• Nouns: étudiant.e (=student), etc.

✅We also provide a mapping dictionary so alternate spellings are valid.

Everything’s open-source (🤗 + GitHub)!

5/11

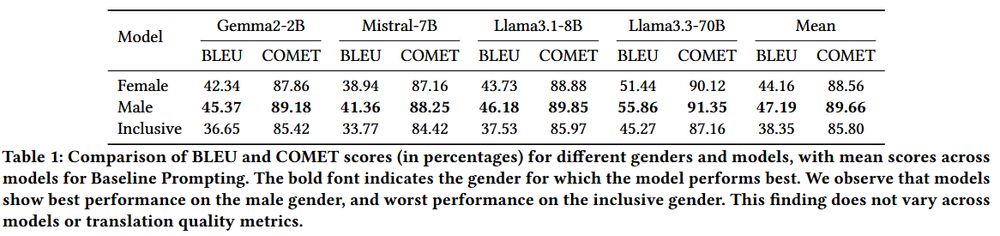

•Gender: male/female/inclusive → each sentence exists in 3 versions for counterfactual eval

•Ambiguity: ambiguous/unambiguous/long unambiguous → tests contextual understanding

•Stereotype: masc/fem/neutral job → tests stereotype bias

•Occupation: 🇫🇷 masc/fem/incl forms

4/11

•Gender: male/female/inclusive → each sentence exists in 3 versions for counterfactual eval

•Ambiguity: ambiguous/unambiguous/long unambiguous → tests contextual understanding

•Stereotype: masc/fem/neutral job → tests stereotype bias

•Occupation: 🇫🇷 masc/fem/incl forms

4/11

3/11

3/11

2/11

2/11