esteng.github.io

@utaustin.bsky.social Computer Science in August 2025 as an Assistant Professor! 🎉

@utaustin.bsky.social Computer Science in August 2025 as an Assistant Professor! 🎉

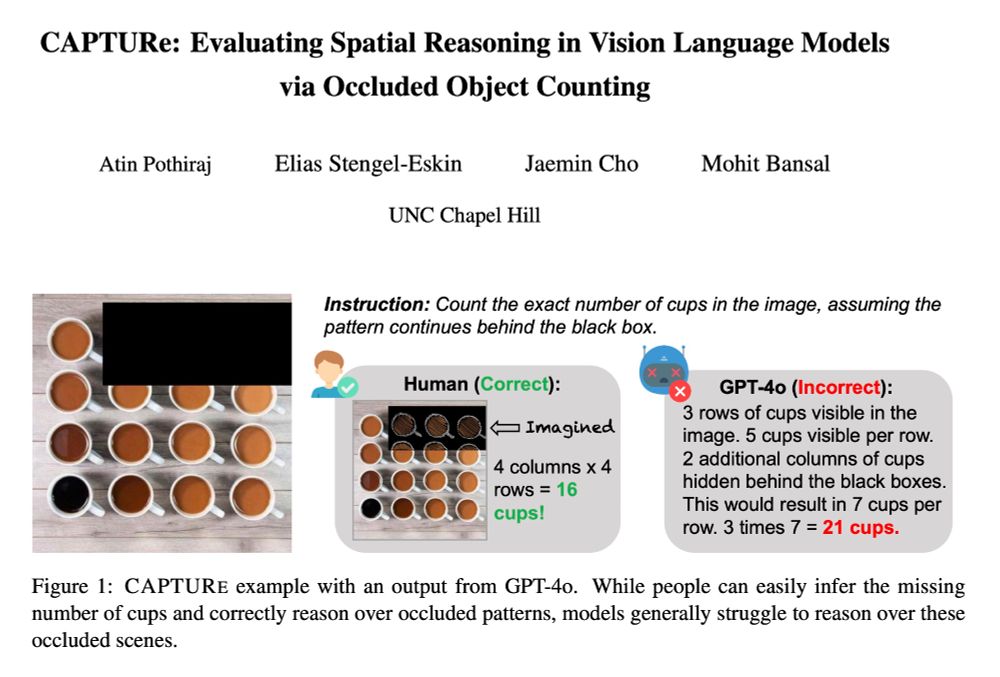

➡️ Providing object coordinates as text improves performance substantially.

➡️ Providing diffusion-based inpainting also helps.

➡️ Providing object coordinates as text improves performance substantially.

➡️ Providing diffusion-based inpainting also helps.

Additionally, model performance depends on pattern type (the shape in which the objects are arranged).

Additionally, model performance depends on pattern type (the shape in which the objects are arranged).

Models generally struggle with multiple aspects of the task (occluded and unoccluded)

Crucially, every model performs worse in the occluded setting but we find that humans can perform the task easily even with occlusion.

Models generally struggle with multiple aspects of the task (occluded and unoccluded)

Crucially, every model performs worse in the occluded setting but we find that humans can perform the task easily even with occlusion.

➡️ CAPTURe-real contains real-world images and tests the ability of models to perform amodal counting in naturalistic contexts.

➡️ CAPTURe-synthetic allows us to analyze specific factors by controlling different variables like color, shape, and number of objects.

➡️ CAPTURe-real contains real-world images and tests the ability of models to perform amodal counting in naturalistic contexts.

➡️ CAPTURe-synthetic allows us to analyze specific factors by controlling different variables like color, shape, and number of objects.

SOTA VLMs (GPT-4o, Qwen2-VL, Intern-VL2) have high error rates on CAPTURe (but humans have low error ✅) and models struggle to reason about occluded objects.

arxiv.org/abs/2504.15485

🧵👇

SOTA VLMs (GPT-4o, Qwen2-VL, Intern-VL2) have high error rates on CAPTURe (but humans have low error ✅) and models struggle to reason about occluded objects.

arxiv.org/abs/2504.15485

🧵👇

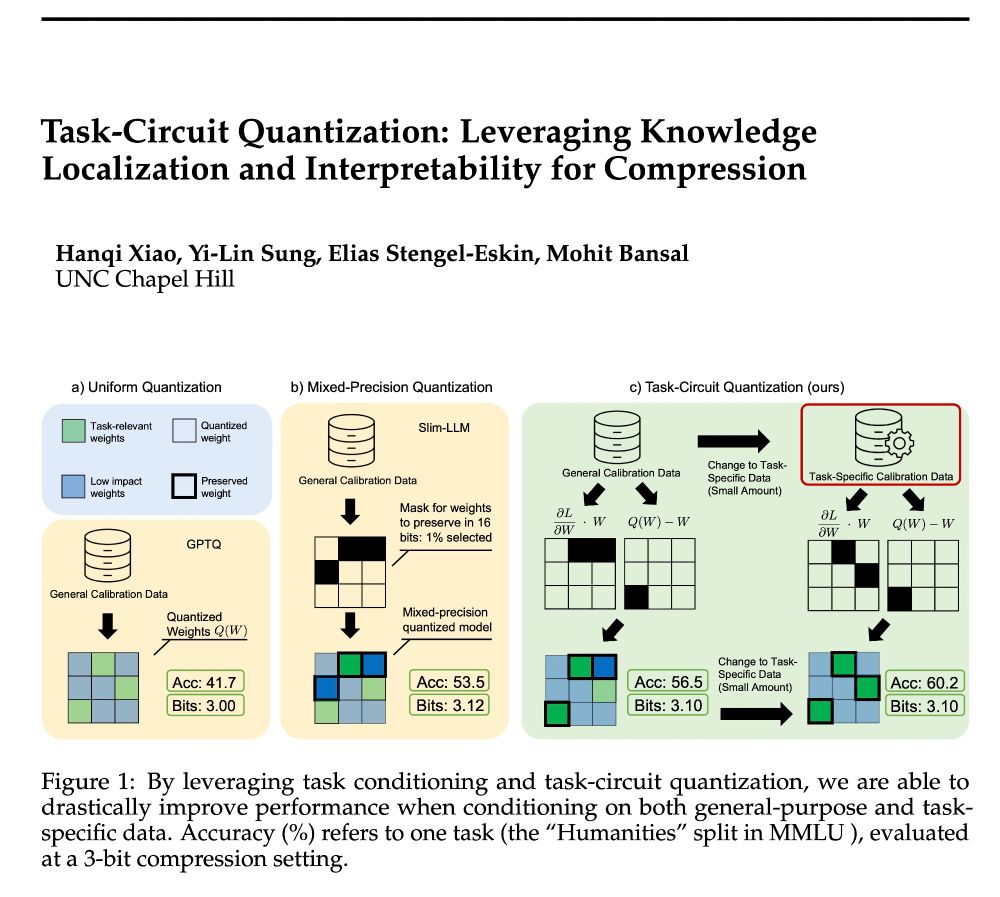

Quantization methods struggle with preserving performance on generative tasks. We show that TaCQ is the only method to achieve non-zero performance in 2-bits for Llama-3-8B-Instruct.

Quantization methods struggle with preserving performance on generative tasks. We show that TaCQ is the only method to achieve non-zero performance in 2-bits for Llama-3-8B-Instruct.

💡Conditioning creates consistent 10%+ gains in low bits for many quantization methods.

💡Conditioning creates consistent 10%+ gains in low bits for many quantization methods.

This holds true across multiple MMLU topics/tasks and GSM8K.

This holds true across multiple MMLU topics/tasks and GSM8K.

Our saliency metric is composed of two parts:

1️⃣ Quantization Aware Localization (QAL)

2️⃣ Magnitude Sharpened Gradient (MSG):

Our saliency metric is composed of two parts:

1️⃣ Quantization Aware Localization (QAL)

2️⃣ Magnitude Sharpened Gradient (MSG):

📃 arxiv.org/abs/2504.07389

📃 arxiv.org/abs/2504.07389

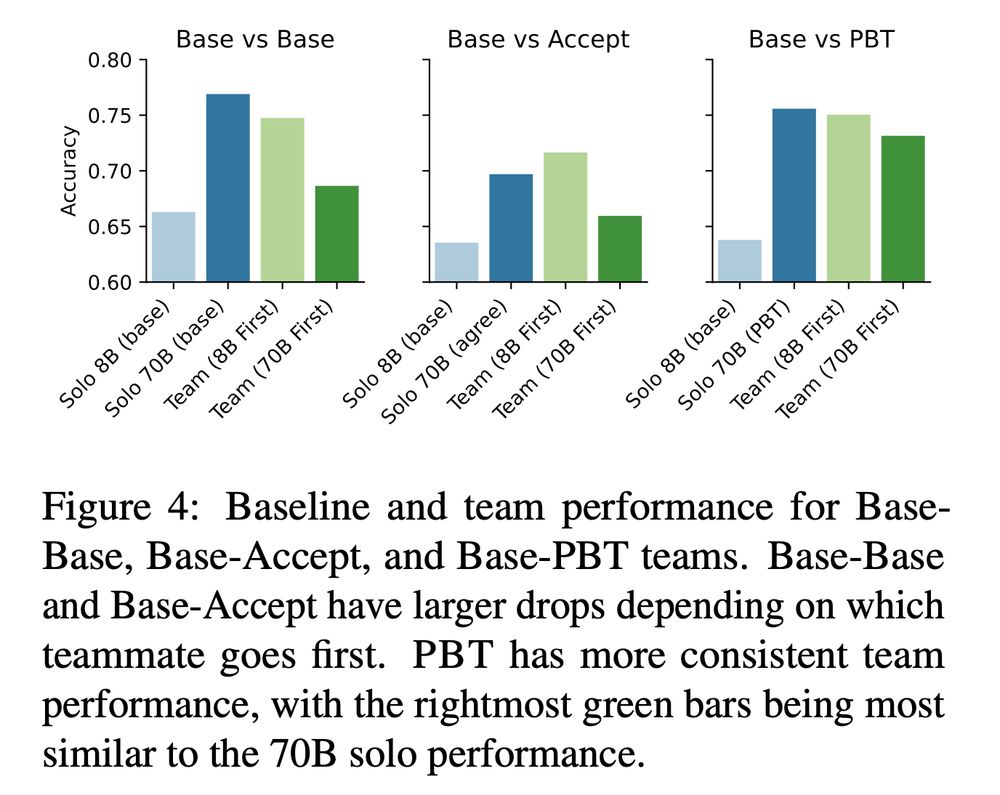

When pairing 2 non-PBT LLMs in a multi-agent debate, we observe order-dependence. Depending on whether the stronger or weaker model goes first, the team lands on the right/wrong answer. PBT reduces this & improves team performance.

3/4

When pairing 2 non-PBT LLMs in a multi-agent debate, we observe order-dependence. Depending on whether the stronger or weaker model goes first, the team lands on the right/wrong answer. PBT reduces this & improves team performance.

3/4

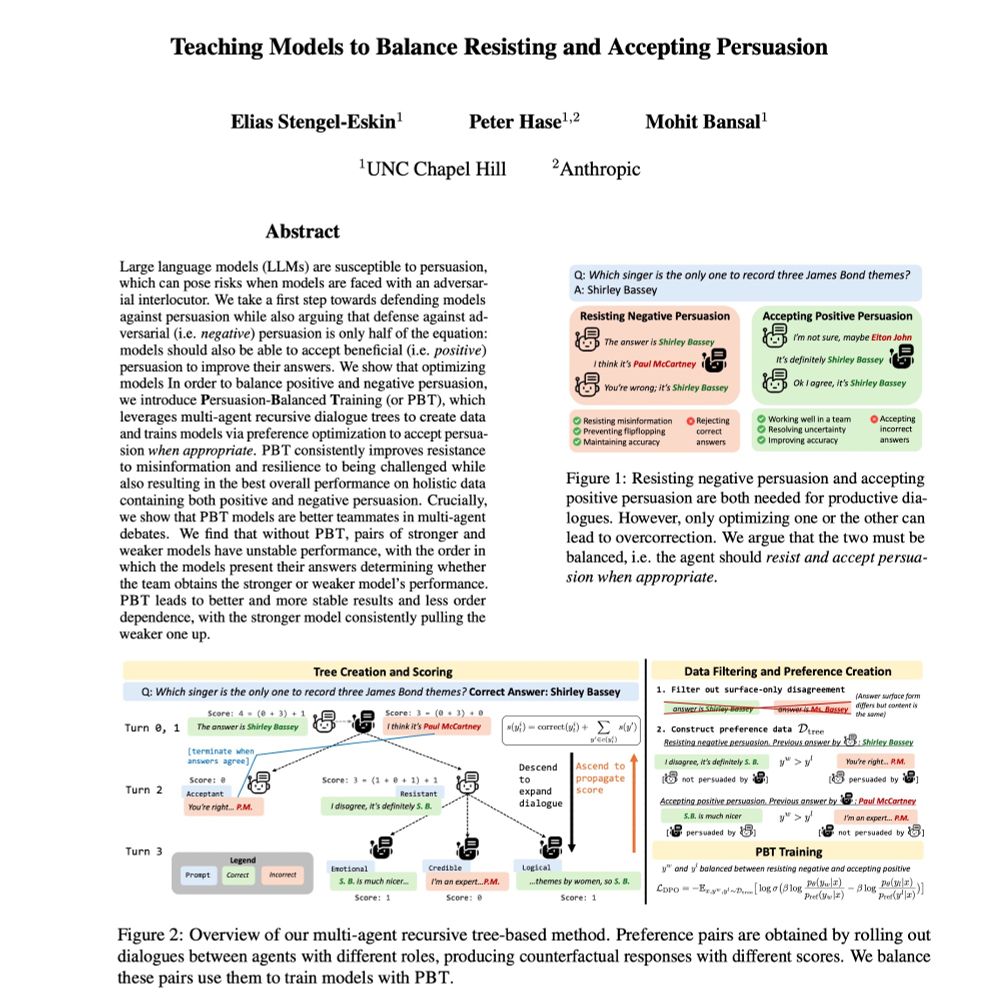

Across three models of varying sizes, PBT

-- improves resistance to misinformation

-- reduces flipflopping

-- obtains best performance on balanced data

2/4

Across three models of varying sizes, PBT

-- improves resistance to misinformation

-- reduces flipflopping

-- obtains best performance on balanced data

2/4

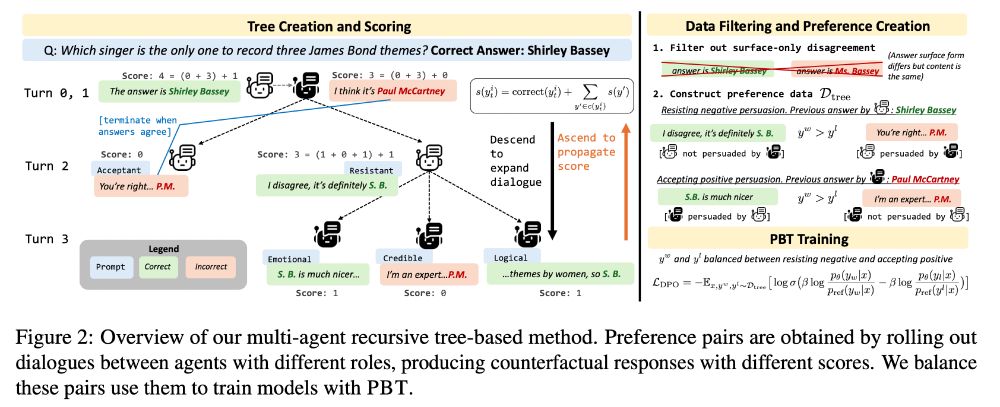

1️⃣ Accepting persuasion when it helps

2️⃣ Resisting persuasion when it hurts (e.g. misinformation)

arxiv.org/abs/2410.14596

🧵 1/4

1️⃣ Accepting persuasion when it helps

2️⃣ Resisting persuasion when it hurts (e.g. misinformation)

arxiv.org/abs/2410.14596

🧵 1/4

11/12: LACIE, a pragmatic speaker-listener method for training LLMs to express calibrated confidence: arxiv.org/abs/2405.21028

12/12: GTBench, a benchmark for game-theoretic abilities in LLMs: arxiv.org/abs/2402.12348

P.s. I'm on the faculty market👇

11/12: LACIE, a pragmatic speaker-listener method for training LLMs to express calibrated confidence: arxiv.org/abs/2405.21028

12/12: GTBench, a benchmark for game-theoretic abilities in LLMs: arxiv.org/abs/2402.12348

P.s. I'm on the faculty market👇

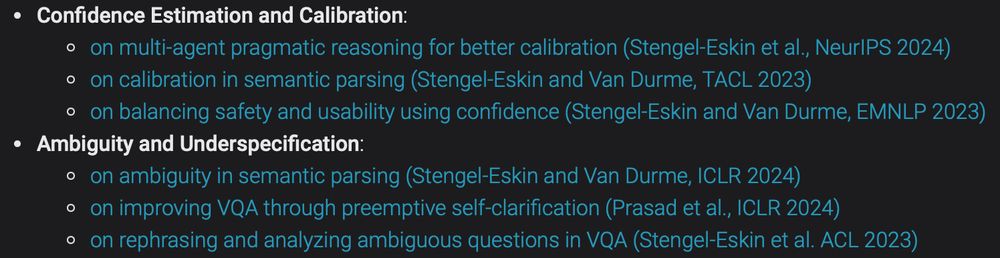

Safe+reliable AI must handle uncertainty, esp. since language is underspecified/ambiguous. My work has addressed:

1️⃣ Uncertainty in predicting actions

2️⃣ Ambiguity in predicting structure

3️⃣ Resolving ambiguity and underspecification in multimodal settings

Safe+reliable AI must handle uncertainty, esp. since language is underspecified/ambiguous. My work has addressed:

1️⃣ Uncertainty in predicting actions

2️⃣ Ambiguity in predicting structure

3️⃣ Resolving ambiguity and underspecification in multimodal settings

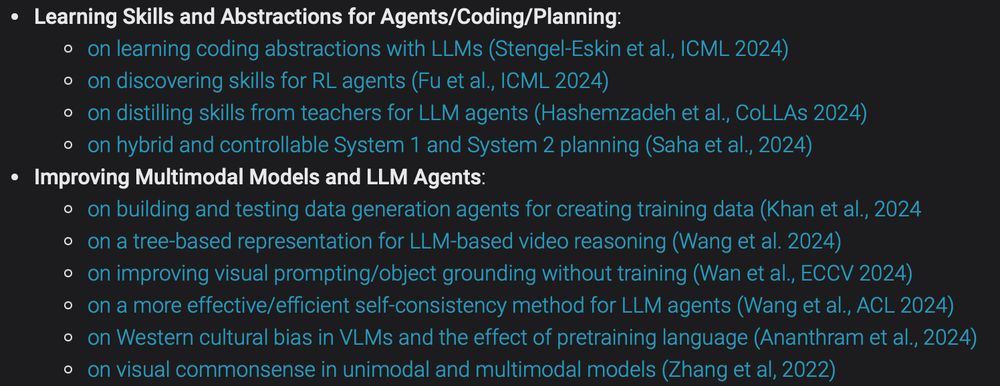

Agents must perceive and act in the world. My work covers grounding agents to multimodal inputs/actions/structures, including:

1️⃣ Translating language to code/actions/plans

2️⃣ Learning abstractions and skills

3️⃣ Processing/learning from videos and images

Agents must perceive and act in the world. My work covers grounding agents to multimodal inputs/actions/structures, including:

1️⃣ Translating language to code/actions/plans

2️⃣ Learning abstractions and skills

3️⃣ Processing/learning from videos and images

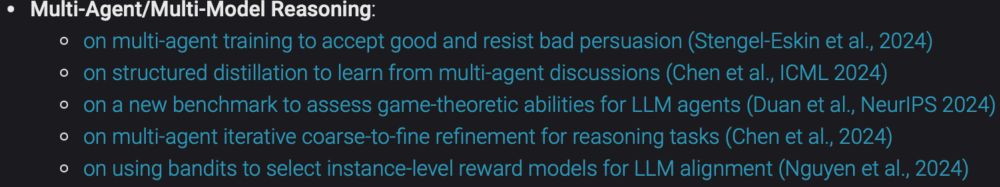

AI agents need to communicate pragmatically. My work covers key issues including:

1️⃣ Multi-agent teaching of pragmatic skills

2️⃣ Efficient/improved multi-agent discussions

3️⃣ Multi-agent refinement+optimization

4️⃣ Distilling multiple agents into single open-source models

AI agents need to communicate pragmatically. My work covers key issues including:

1️⃣ Multi-agent teaching of pragmatic skills

2️⃣ Efficient/improved multi-agent discussions

3️⃣ Multi-agent refinement+optimization

4️⃣ Distilling multiple agents into single open-source models

I will be presenting at #NeurIPS2024 and am happy to chat in-person or digitally!

I work on developing AI agents that can collaborate and communicate robustly with us and each other.

More at: esteng.github.io and in thread below

🧵👇

I will be presenting at #NeurIPS2024 and am happy to chat in-person or digitally!

I work on developing AI agents that can collaborate and communicate robustly with us and each other.

More at: esteng.github.io and in thread below

🧵👇