Apply to work on AI for social sciences/human behavior, social NLP, and LLMs for real-world applied domains you're passionate about!

Learn more at kristinagligoric.com & help spread the word!

Apply to work on AI for social sciences/human behavior, social NLP, and LLMs for real-world applied domains you're passionate about!

Learn more at kristinagligoric.com & help spread the word!

The results of our #EMNLP2025 paper suggest so!

@esradonmez.bsky.social and I will present this work in Poster Session 1, 11:00-12:30 on 5th Nov, Hall C3

🧵👇

aclanthology.org/2025.emnlp-m...

The results of our #EMNLP2025 paper suggest so!

@esradonmez.bsky.social and I will present this work in Poster Session 1, 11:00-12:30 on 5th Nov, Hall C3

🧵👇

aclanthology.org/2025.emnlp-m...

🎉 Good news: I am hiring! 🎉

The position is part of the “Contested Climate Futures" project. 🌱🌍 You will focus on developing next-generation AI methods🤖 to analyze climate-related concepts in content—including texts, images, and videos.

Share your thoughts 👉https://tinyurl.com/39ab55wf

Share your thoughts 👉https://tinyurl.com/39ab55wf

- Open-topic PhD positions: express your interest through ELLIS by 31 October 2025, start in Autumn 2026: ellis.eu/news/ellis-p...

#NLProc #XAI

- Open-topic PhD positions: express your interest through ELLIS by 31 October 2025, start in Autumn 2026: ellis.eu/news/ellis-p...

#NLProc #XAI

@pranav-nlp.bsky.social Soda Marem Lo, Neele Falk, @gingerinai.bsky.social @davidjurgens.bsky.social @a-lauscher.bsky.social and myself!

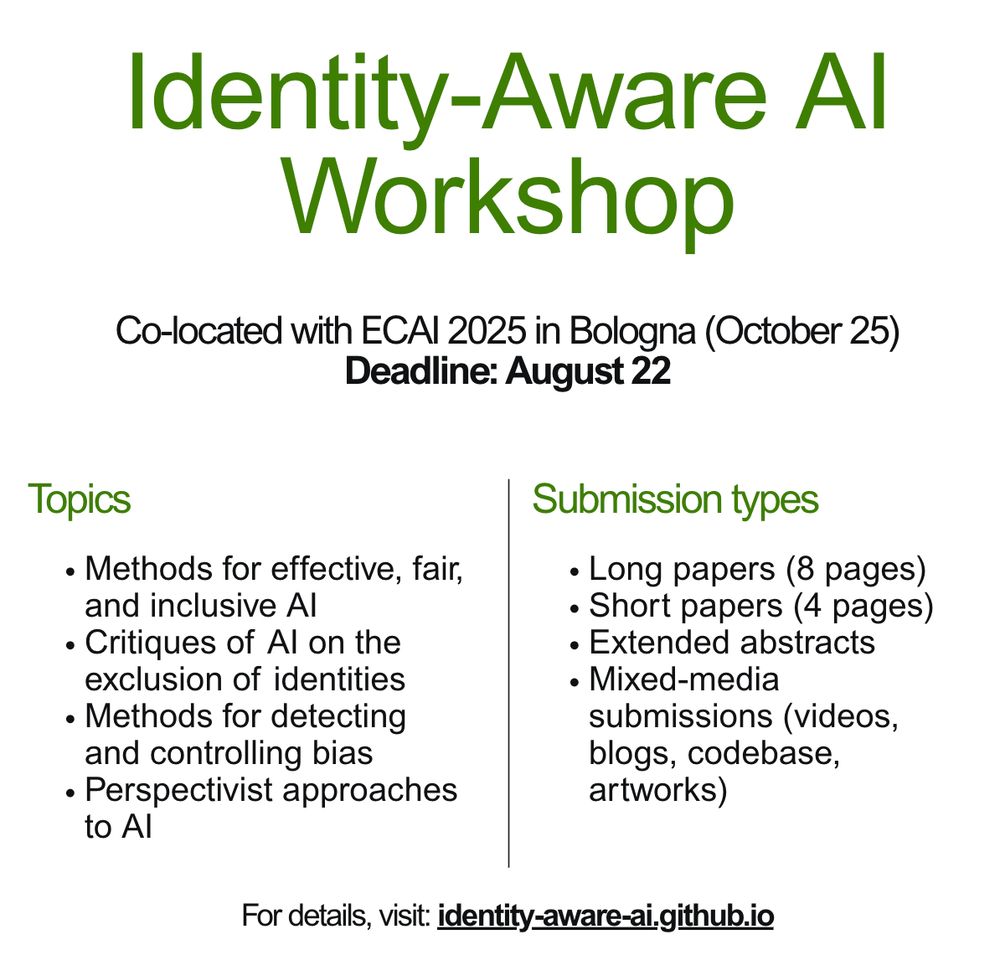

We're organizing Identity-Aware AI workshop at #ECAI2025 Bologna on Oct 25.

Deadline: Aug 22

Website: identity-aware-ai.github.io

@pranav-nlp.bsky.social Soda Marem Lo, Neele Falk, @gingerinai.bsky.social @davidjurgens.bsky.social @a-lauscher.bsky.social and myself!

Focusing on non-aggregated datasets and multi-perspective modeling, with sessions on labeling, modeling, evaluation, and applications.

nlperspectives.di.unito.it

Focusing on non-aggregated datasets and multi-perspective modeling, with sessions on labeling, modeling, evaluation, and applications.

nlperspectives.di.unito.it

Please spread the word that I'm recruiting prospective PhD students: lucy3.notion.site/for-prospect...

Please spread the word that I'm recruiting prospective PhD students: lucy3.notion.site/for-prospect...

Join our

@colmweb.org

workshop on comprehensivly evaluating LMs.

Deadline: June 23rd

CfP: shorturl.at/sBomu

Page: shorturl.at/FT3fX

We're excited to see your insights and methods!!

See you in Montréal 🇨🇦 #nlproc #interpretability

Join our

@colmweb.org

workshop on comprehensivly evaluating LMs.

Deadline: June 23rd

CfP: shorturl.at/sBomu

Page: shorturl.at/FT3fX

We're excited to see your insights and methods!!

See you in Montréal 🇨🇦 #nlproc #interpretability

www.dfg.de/en/news/news...

www.dfg.de/en/news/news...