eshaannichani.com

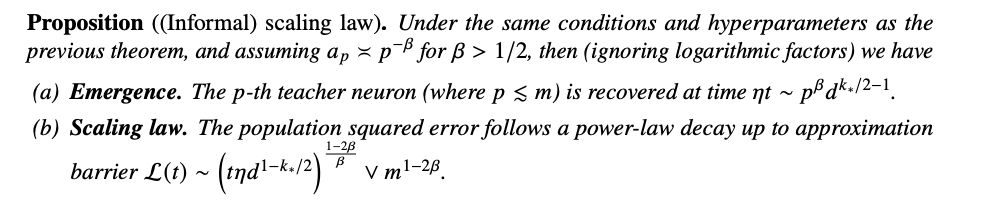

Matches functional form of empirical neural scaling laws (eg. Chinchilla)! (7/10)

Matches functional form of empirical neural scaling laws (eg. Chinchilla)! (7/10)

Main Theorem: to recover the top P ≤ P* = d^c directions, student width m = Θ(P*) and sample size poly(d, 1/a_{P*}, P) suffice.

Polynomial complexity with a single-stage algorithm! (6/10)

Main Theorem: to recover the top P ≤ P* = d^c directions, student width m = Θ(P*) and sample size poly(d, 1/a_{P*}, P) suffice.

Polynomial complexity with a single-stage algorithm! (6/10)

Prior works either assume P = O(1) (multi-index model) or require complexity exponential in κ=a_1/a_P.

But to get a smooth scaling law, we need to handle many tasks (P→∞) with varying strengths (κ→∞) (5/10)

Prior works either assume P = O(1) (multi-index model) or require complexity exponential in κ=a_1/a_P.

But to get a smooth scaling law, we need to handle many tasks (P→∞) with varying strengths (κ→∞) (5/10)

- The cumulative loss can be decomposed into many distinct skills, each of which individually exhibits emergence.

- The juxtaposition of many learning curves at varying timescales leads to a smooth power law in the loss. (3/10)

- The cumulative loss can be decomposed into many distinct skills, each of which individually exhibits emergence.

- The juxtaposition of many learning curves at varying timescales leads to a smooth power law in the loss. (3/10)

Yet “neural scaling laws” posit that increasing compute leads to predictable power law decay in the loss.

How do we reconcile these two phenomena? (2/10)

Yet “neural scaling laws” posit that increasing compute leads to predictable power law decay in the loss.

How do we reconcile these two phenomena? (2/10)

@jasondeanlee.bsky.social!

We prove a neural scaling law in the SGD learning of extensive width two-layer neural networks.

arxiv.org/abs/2504.19983

🧵below (1/10)

@jasondeanlee.bsky.social!

We prove a neural scaling law in the SGD learning of extensive width two-layer neural networks.

arxiv.org/abs/2504.19983

🧵below (1/10)