Building Latent Scope to visualize unstructured data through the lens of ML

github.com/enjalot/latent-scope

I can load a dataset from HF like:

dataset = load_dataset("Marqo/marqo-ge-sample", split='google_shopping')

df = pd.DataFrame(dataset)

but i need to convert the images to bytes if I want to do:

df.to_parquet("sample.parquet")

I can load a dataset from HF like:

dataset = load_dataset("Marqo/marqo-ge-sample", split='google_shopping')

df = pd.DataFrame(dataset)

but i need to convert the images to bytes if I want to do:

df.to_parquet("sample.parquet")

musing with @infowetrust.com

image from distill.pub/2017/aia/

musing with @infowetrust.com

image from distill.pub/2017/aia/

register today!

hiddenstates.org

register today!

hiddenstates.org

It's a one-day unconference gathering researchers, designers, prototypers and engineers interested in pushing the boundaries of AI interfaces, going below the API and working with the hidden states.

hiddenstates.org

It's a one-day unconference gathering researchers, designers, prototypers and engineers interested in pushing the boundaries of AI interfaces, going below the API and working with the hidden states.

hiddenstates.org

When you embed new data, like the question for a RAG query, you can see where on the map it lands.

When you embed new data, like the question for a RAG query, you can see where on the map it lands.

As you add more points a map starts to form, with clusters of similar data spread out before you

As you add more points a map starts to form, with clusters of similar data spread out before you

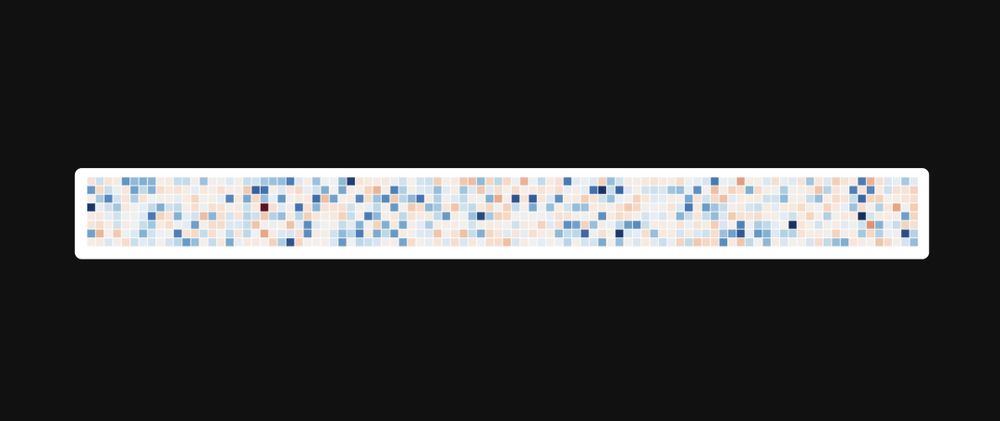

We can use UMAP to place similar embeddings close together in 2D space. So two passages that have similar high-dimensional representations will show up close together in 2D

We can use UMAP to place similar embeddings close together in 2D space. So two passages that have similar high-dimensional representations will show up close together in 2D

The first tool that is most familiar is cosine similarity. It allows us to see how similar two high-dimensional vectors are.

The first tool that is most familiar is cosine similarity. It allows us to see how similar two high-dimensional vectors are.

This representation has some special properties, namely that it "represents" the patterns the model has found in the data.

We can get a representation for any data (that our model can handle)

This representation has some special properties, namely that it "represents" the patterns the model has found in the data.

We can get a representation for any data (that our model can handle)

That list of numbers is an embedding AKA latent vector. For a given model its always the same length (dimensionality)

That list of numbers is an embedding AKA latent vector. For a given model its always the same length (dimensionality)

The basic idea is you take your text and chunk it into pieces

The basic idea is you take your text and chunk it into pieces

The weights are crystalized patterns whose structure emerges from the crushing pressures of backpropagation.

By shining a piece of data through this lens you see the patterns diffracted in the hidden states.

The weights are crystalized patterns whose structure emerges from the crushing pressures of backpropagation.

By shining a piece of data through this lens you see the patterns diffracted in the hidden states.

pretty geographically correlated (counties from the same state end up in similar clusters, or share clusters with nearby states)

pretty geographically correlated (counties from the same state end up in similar clusters, or share clusters with nearby states)

read more details here: enjalot.substack.com

read more details here: enjalot.substack.com

micro.magnet.fsu.edu/primer/java/...

Even before that I was behind the microscope after school getting paid in computer parts for taking pictures of pond life (my first NVIDIA GPU in 2001!)

micro.magnet.fsu.edu/primer/java/...

Even before that I was behind the microscope after school getting paid in computer parts for taking pictures of pond life (my first NVIDIA GPU in 2001!)

Throughout school I worked part-time making GIS web maps.

Throughout school I worked part-time making GIS web maps.

I've written a good bit about data visualization with d3.js and building community here:

medium.com/@enjalot

I've written a good bit about data visualization with d3.js and building community here:

medium.com/@enjalot

I'm a prototyper and Data Alchemist interested in using machine learning for data visualization.

I'm building github.com/enjalot/late... using the lessons learned from co-authoring these 4 distill.pub papers

I'm a prototyper and Data Alchemist interested in using machine learning for data visualization.

I'm building github.com/enjalot/late... using the lessons learned from co-authoring these 4 distill.pub papers