📅 Thursday, Nov 6th | 11 AM - 12 PM EST

🎙 Speaker: Emmanouil Benetos ( @emmanouilb.bsky.social ) - Queen Mary University of London

📖 Topic: "Machine learning paradigms for music and audio understanding"

🔗 Details: (poonehmousavi.github.io/rg)

📅 Thursday, Nov 6th | 11 AM - 12 PM EST

🎙 Speaker: Emmanouil Benetos ( @emmanouilb.bsky.social ) - Queen Mary University of London

📖 Topic: "Machine learning paradigms for music and audio understanding"

🔗 Details: (poonehmousavi.github.io/rg)

🕒 3–4 PM, 5th November

📍 TBC

We’re excited to welcome Dr. Masataka Goto, Senior Principal Researcher at the National Institute of Advanced Industrial Science and Technology (AIST), Japan.

🕒 3–4 PM, 5th November

📍 TBC

We’re excited to welcome Dr. Masataka Goto, Senior Principal Researcher at the National Institute of Advanced Industrial Science and Technology (AIST), Japan.

Streaming dcase.community/workshop2025...

@c4dm.bsky.social @emmanouilb.bsky.social @preparedmindslab.bsky.social @elisabettaversace.bsky.social

Streaming dcase.community/workshop2025...

@c4dm.bsky.social @emmanouilb.bsky.social @preparedmindslab.bsky.social @elisabettaversace.bsky.social

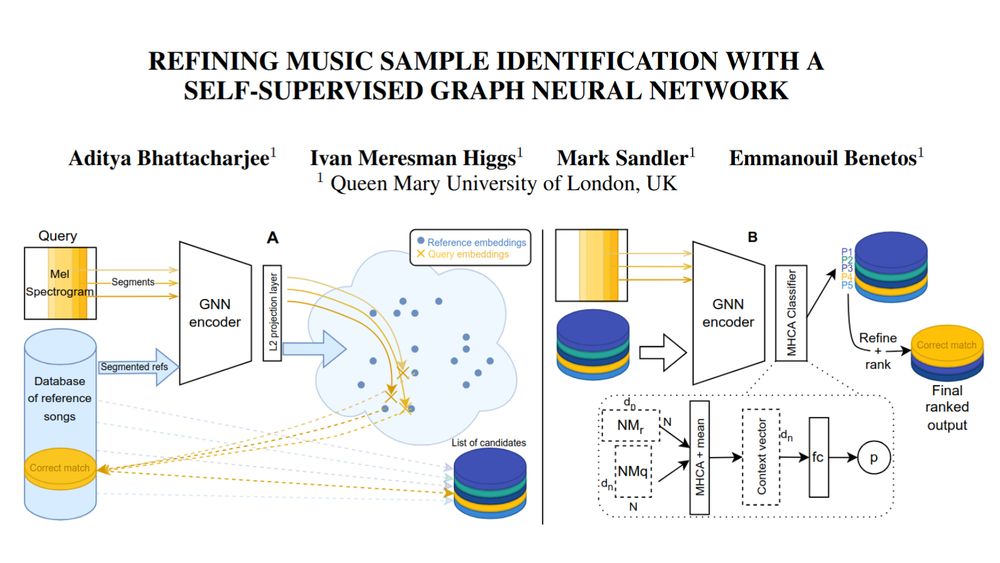

We propose an architecture that can detect music samples that have been reused in new compositions, even after pitch-shifting, time-stretching, and other transformations!🧵

We propose an architecture that can detect music samples that have been reused in new compositions, even after pitch-shifting, time-stretching, and other transformations!🧵

ai4musicians.org/transcriptio...

#C4DM #MusicAI #AMTChallenge2025.

ai4musicians.org/transcriptio...

#C4DM #MusicAI #AMTChallenge2025.

For a list of the articles, please refer to:

www.c4dm.eecs.qmul.ac.uk/news/2025-03...

For a list of the articles, please refer to:

www.c4dm.eecs.qmul.ac.uk/news/2025-03...

More info at:

www.c4dm.eecs.qmul.ac.uk/news/2024-03...

More info at:

www.c4dm.eecs.qmul.ac.uk/news/2024-03...