Neurosymbolic Machine Learning, Generative Models, commonsense reasoning

https://www.emilevankrieken.com/

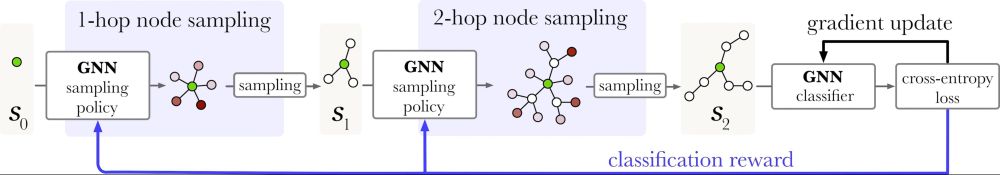

Make GNNs work on large graphs by learning an expressive and adaptive sampler 🚀

Excellent work led by Taraneh Younesian now in TMLR!

openreview.net/forum?id=QI0...

Make GNNs work on large graphs by learning an expressive and adaptive sampler 🚀

Excellent work led by Taraneh Younesian now in TMLR!

openreview.net/forum?id=QI0...

This was what it said just before the deadline, so people submitting to NeurIPS couldn't have known about the mandatory physical presence 🤔

This was what it said just before the deadline, so people submitting to NeurIPS couldn't have known about the mandatory physical presence 🤔

Our loss function reconstructs the output dimensions separately, decomposing the problem!

Our loss function reconstructs the output dimensions separately, decomposing the problem!

Every unmasking step is an independent distribution… Neurosymbolic Diffusion Models (NeSyDMs) exploit this for efficient probabilistic reasoning while guaranteeing global dependencies 🚀

Every unmasking step is an independent distribution… Neurosymbolic Diffusion Models (NeSyDMs) exploit this for efficient probabilistic reasoning while guaranteeing global dependencies 🚀

x.com/EmilevanKrie...

x.com/EmilevanKrie...

The concept extraction phase is completely unsupervised: No access to true concepts during training!

The concept extraction phase is completely unsupervised: No access to true concepts during training!

Read more 👇

Read more 👇

- Better matching (I hope!)

- 100 -> 400 papers to bid on

- Better matching (I hope!)

- 100 -> 400 papers to bid on

@rohit-saxena.bsky.social @aryopg.bsky.social @neuralnoise.com

@rohit-saxena.bsky.social @aryopg.bsky.social @neuralnoise.com

But let's make it more efficient than $3200 per query 🙃

@fchollet.bsky.social

But let's make it more efficient than $3200 per query 🙃

@fchollet.bsky.social

He's organising a nice workshop today on the intersection of LLMs and linguistics. He just kicked it off with this work with AO @wzuidema.bsky.social on using LLMs to improve our understanding of adjective order

He's organising a nice workshop today on the intersection of LLMs and linguistics. He just kicked it off with this work with AO @wzuidema.bsky.social on using LLMs to improve our understanding of adjective order

Just right-click on a tensor in your variables view!

Just right-click on a tensor in your variables view!