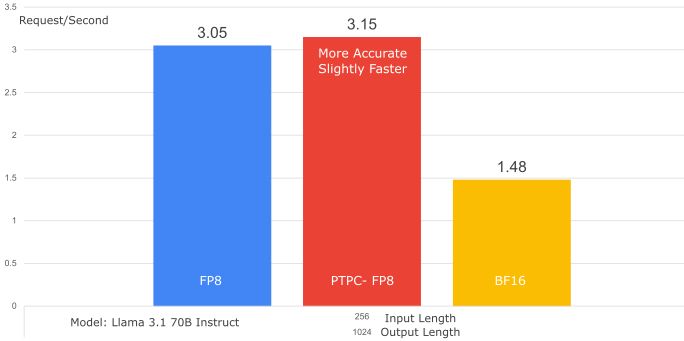

- Per-Token Activation Scaling: Each token gets its own scaling factor

- Per-Channel Weight Scaling: Each weight column (output channel) gets its own scaling factor

Delivers FP8 speed with accuracy closer to BF16 – the best FP8 option for ROCm! [2/2]

- Per-Token Activation Scaling: Each token gets its own scaling factor

- Per-Channel Weight Scaling: Each weight column (output channel) gets its own scaling factor

Delivers FP8 speed with accuracy closer to BF16 – the best FP8 option for ROCm! [2/2]

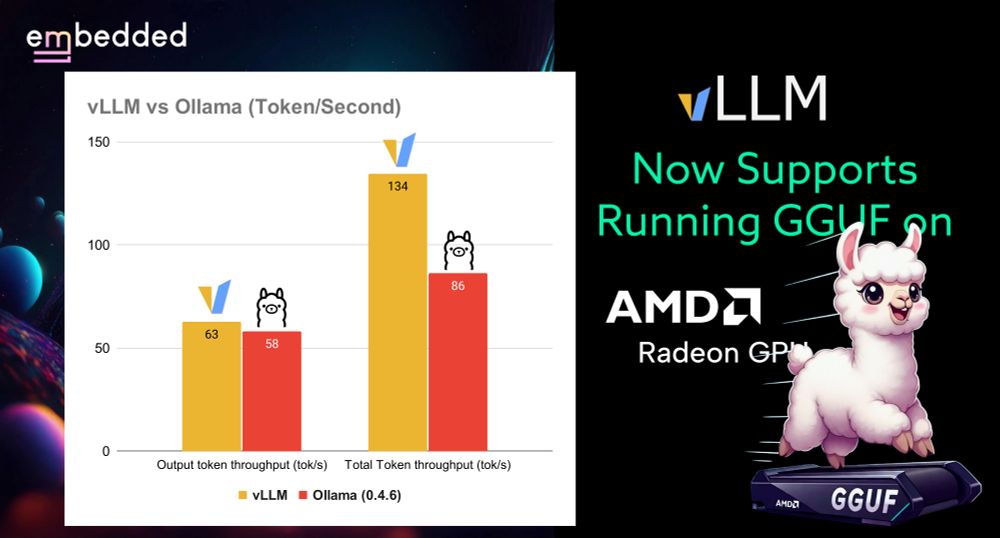

Check it out: embeddedllm.com/blog/vllm-no...

What's your experience with vLLM on AMD? Any features you want to see next?

Check it out: embeddedllm.com/blog/vllm-no...

What's your experience with vLLM on AMD? Any features you want to see next?

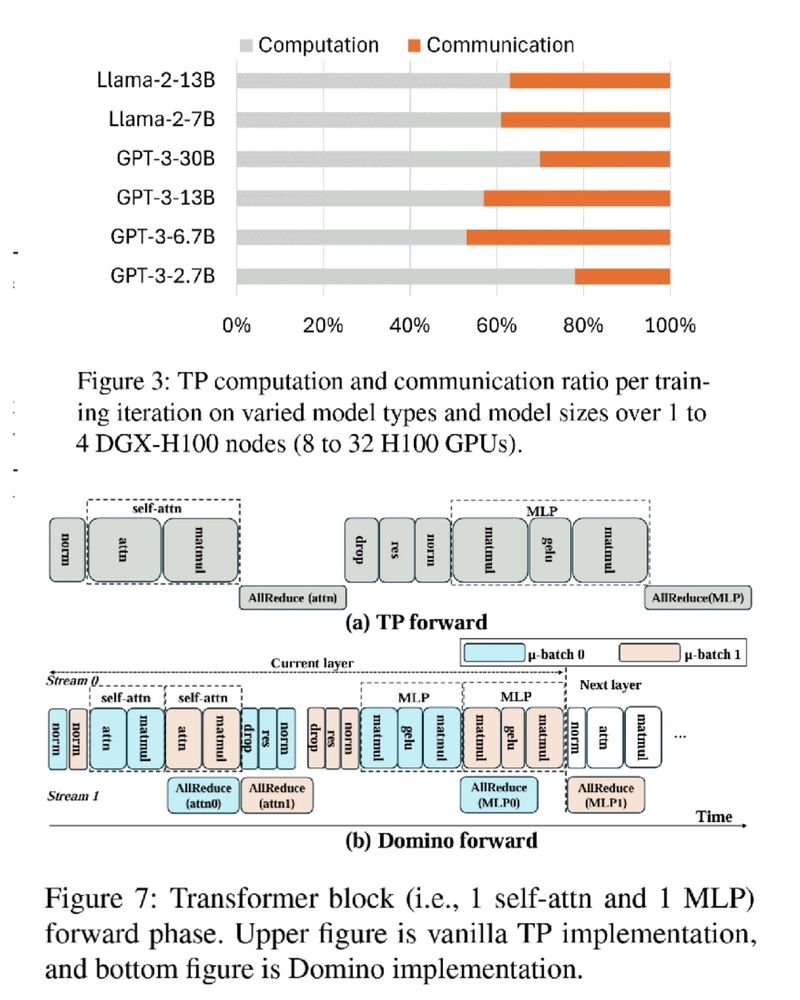

DeepSpeed Domino, with a new tensor parallelism engine, minimizes communication overhead for faster LLM training. 🚀

✅ Near-complete communication hiding

✅ Multi-node scalable solution

Blog: github.com/microsoft/De...

DeepSpeed Domino, with a new tensor parallelism engine, minimizes communication overhead for faster LLM training. 🚀

✅ Near-complete communication hiding

✅ Multi-node scalable solution

Blog: github.com/microsoft/De...

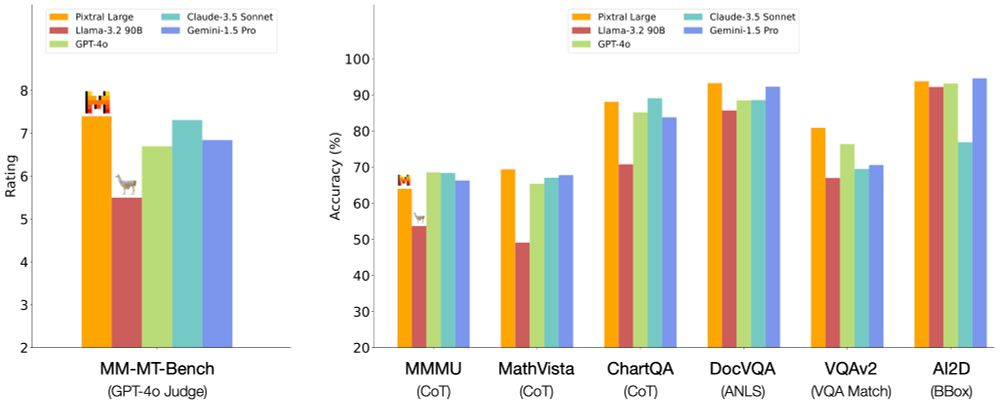

Run Pixtral Large with multiple input images from day 0 using vLLM.

Install vLLM:

pip install -U VLLM

Run Pixtral Large:

vllm serve mistralai/Pixtral-Large-Instruct-2411 --tokenizer_mode mistral --limit_mm_per_prompt 'image=10' --tensor-parallel-size 8

Run Pixtral Large with multiple input images from day 0 using vLLM.

Install vLLM:

pip install -U VLLM

Run Pixtral Large:

vllm serve mistralai/Pixtral-Large-Instruct-2411 --tokenizer_mode mistral --limit_mm_per_prompt 'image=10' --tensor-parallel-size 8