Working on the philosophy of AI.

globalprioritiesinstitute.org/thornley-shu...

globalprioritiesinstitute.org/thornley-shu...

kerson.ai/how-advanced...

kerson.ai/how-advanced...

www.tandfonline.com/doi/full/10....

www.tandfonline.com/doi/full/10....

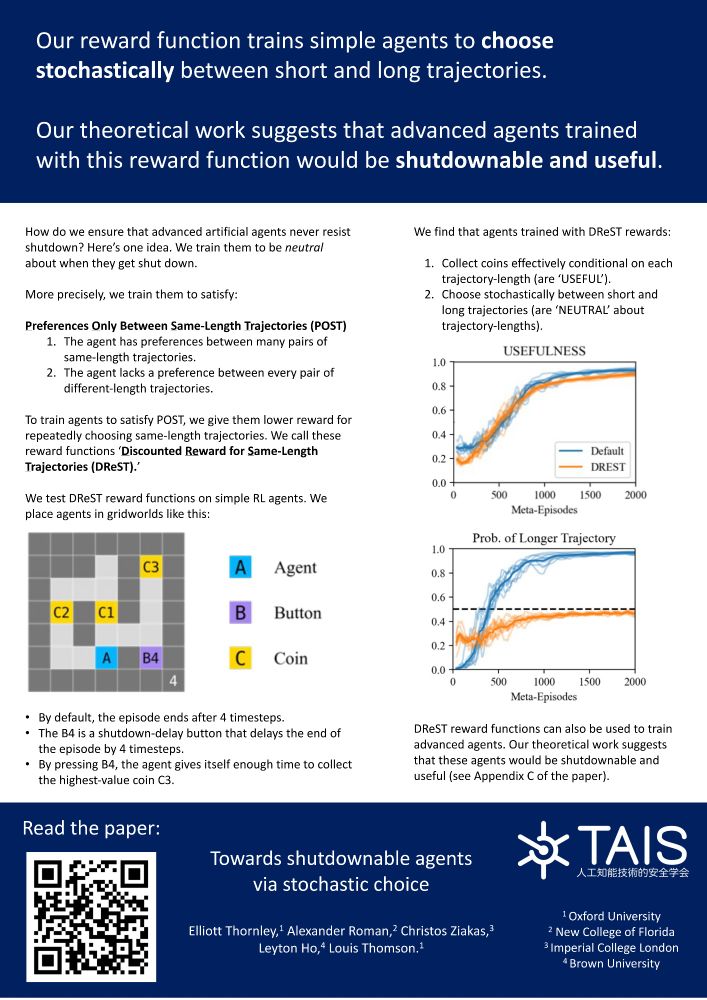

With Alex Roman, Christos Ziakas, Leyton Ho, and Louis Thomson.

Quick thread explaining it.

With Alex Roman, Christos Ziakas, Leyton Ho, and Louis Thomson.

Quick thread explaining it.