1. Prompt sensitivity is HUGE! Performance varies dramatically with small changes (e. g. ➡ OLMo’s accuracy on HellaSwag ranges from 1% to 99%, simply by changing prompt elements like phrasing, enumerators, and answer order).

1. Prompt sensitivity is HUGE! Performance varies dramatically with small changes (e. g. ➡ OLMo’s accuracy on HellaSwag ranges from 1% to 99%, simply by changing prompt elements like phrasing, enumerators, and answer order).

Talk to us about data you'd like to contribute or request evaluations you want to see added to 🕊️ DOVE!

Talk to us about data you'd like to contribute or request evaluations you want to see added to 🕊️ DOVE!

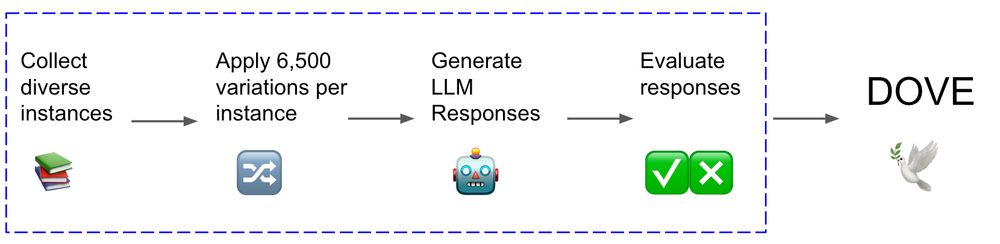

We bring you ️️🕊️ DOVE a massive (250M!) collection of LLMs outputs

On different prompts, domains, tokens, models...

Join our community effort to expand it with YOUR model predictions & become a co-author!

We bring you ️️🕊️ DOVE a massive (250M!) collection of LLMs outputs

On different prompts, domains, tokens, models...

Join our community effort to expand it with YOUR model predictions & become a co-author!