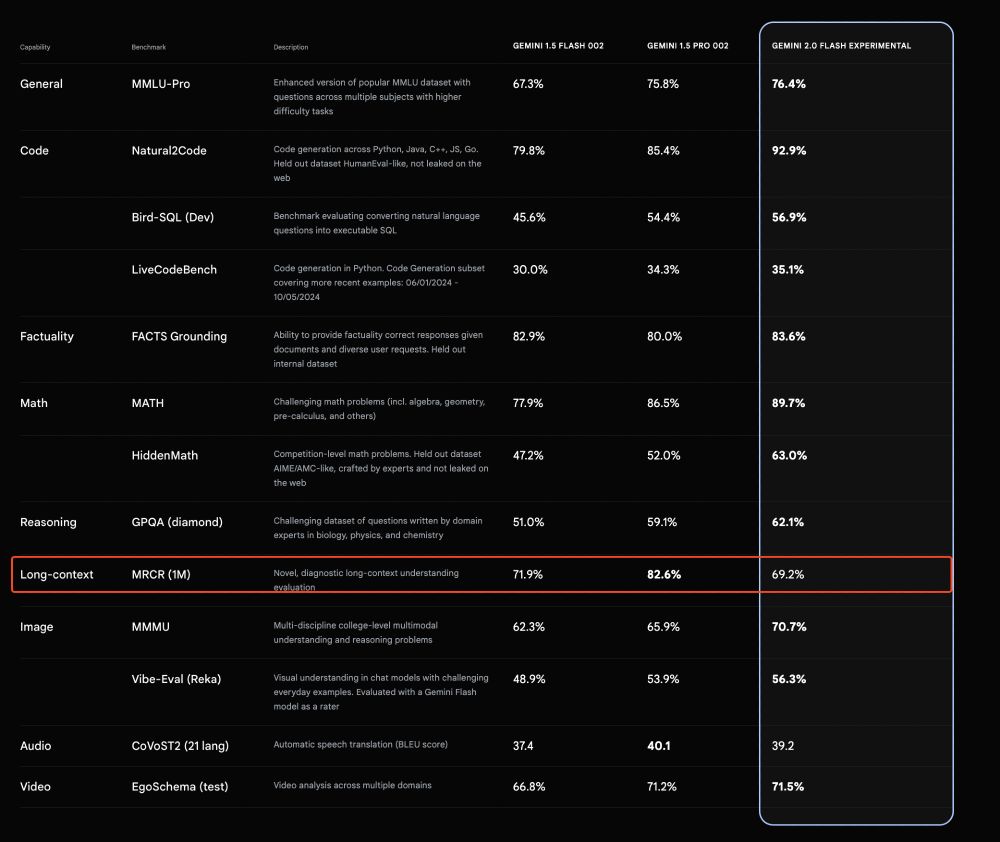

One odd thing is that the model seems to lose some ability with long contexts compared to Flash 1.5. If any google friends could share insights, I'd love to hear them!

One odd thing is that the model seems to lose some ability with long contexts compared to Flash 1.5. If any google friends could share insights, I'd love to hear them!

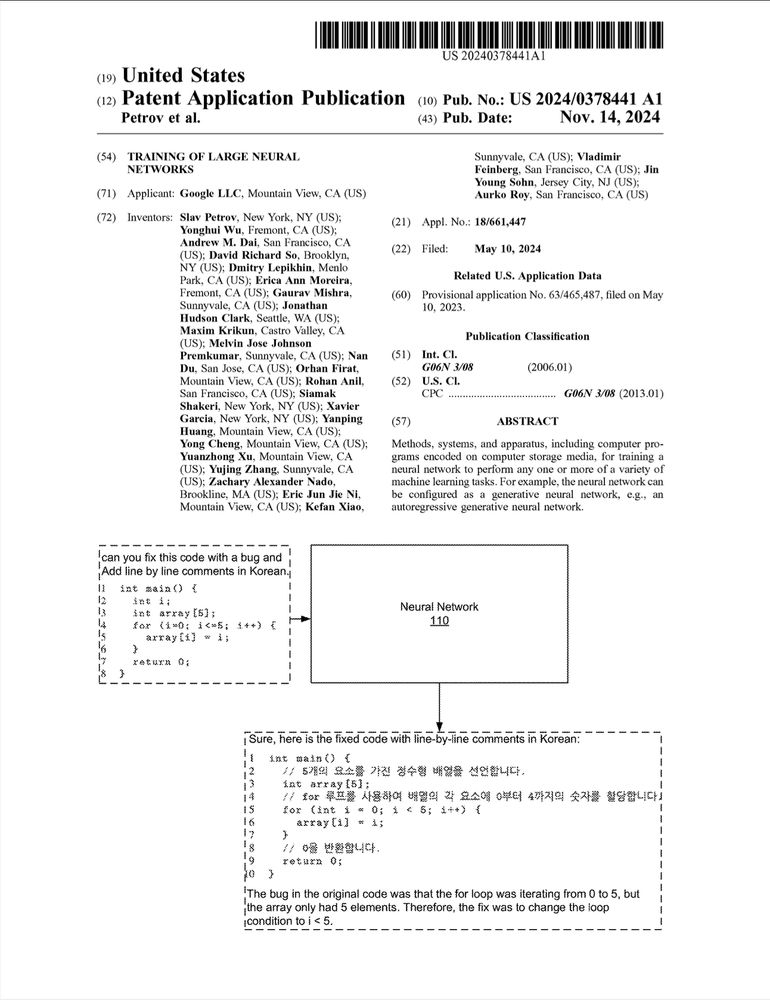

I don't know if this give much information but by going quickly through it seems that:

- They are not only using "causal language modeling task" as a pre-training task but also "span corruption" and "prefix modeling". (ref [0805]-[0091])

I don't know if this give much information but by going quickly through it seems that:

- They are not only using "causal language modeling task" as a pre-training task but also "span corruption" and "prefix modeling". (ref [0805]-[0091])

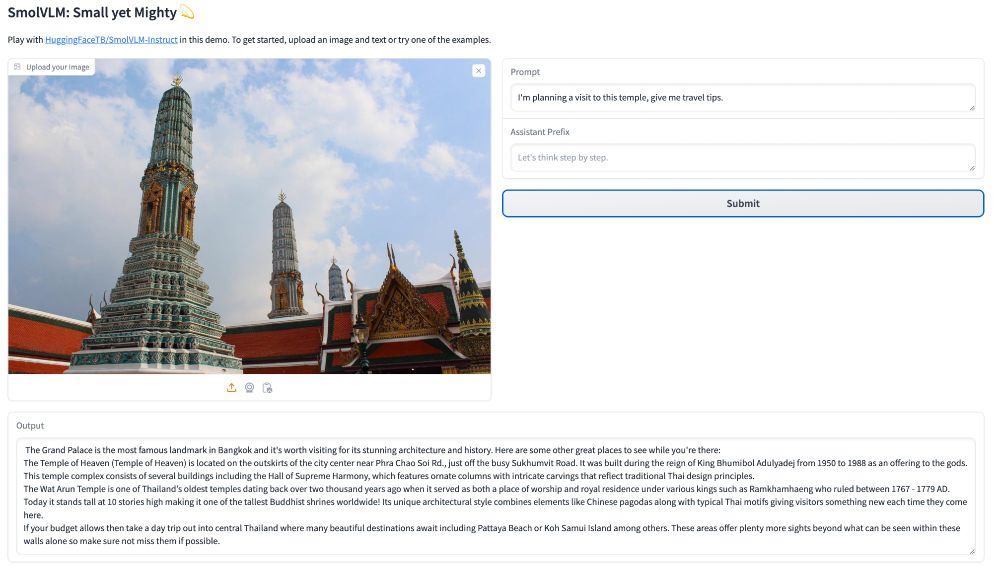

It uses a preliminary 16k context version of SmolLM2 to tackle long-context vision documents and higher-res images.

And yes, we’re cooking up versions with bigger context lengths. 👨🍳

Try it yourself here: huggingface.co/spaces/Huggi...

It uses a preliminary 16k context version of SmolLM2 to tackle long-context vision documents and higher-res images.

And yes, we’re cooking up versions with bigger context lengths. 👨🍳

Try it yourself here: huggingface.co/spaces/Huggi...

Fully open-source. We’ll release a blog post soon to detail how we trained it. I'm also super excited about all the demos that will come in the next few days, especially looking forward for people to test it with entropix 🐸

Fully open-source. We’ll release a blog post soon to detail how we trained it. I'm also super excited about all the demos that will come in the next few days, especially looking forward for people to test it with entropix 🐸