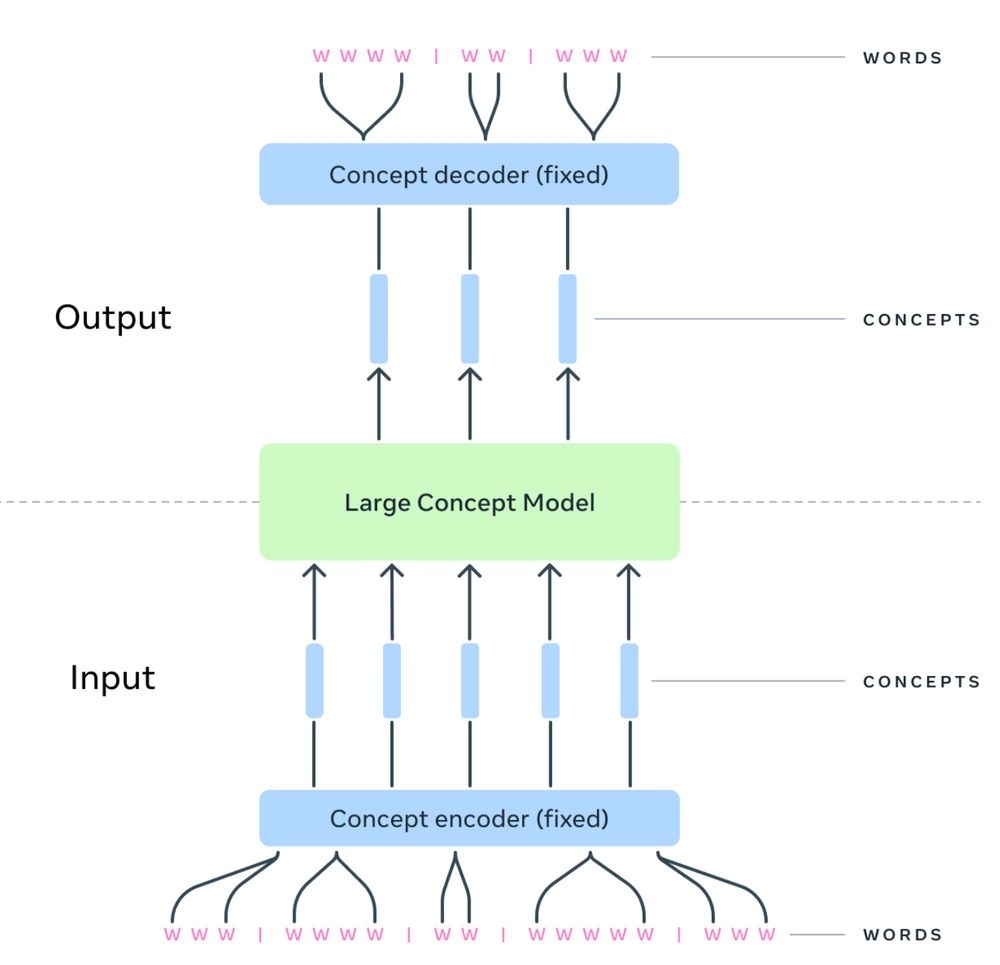

At FAIR (AI at Meta), we're committed to open research! The training code for our LCMs is freely available. I’m excited about the potential of concept-based language models and what new capabilities they can unlock. github.com/facebookrese...

At FAIR (AI at Meta), we're committed to open research! The training code for our LCMs is freely available. I’m excited about the potential of concept-based language models and what new capabilities they can unlock. github.com/facebookrese...