"We detected several successful and unsuccessful attempts at “reward hacking” by o3.

1/7

"We detected several successful and unsuccessful attempts at “reward hacking” by o3.

1/7

futureoflife.org/pro...

Please contact me if you'd like to make long or short video content, I would be happy to collaborate or give tips!

futureoflife.org/pro...

Please contact me if you'd like to make long or short video content, I would be happy to collaborate or give tips!

- algorithmic gains for models doubles every 8 months

- amount of compute used grows 10x every two years

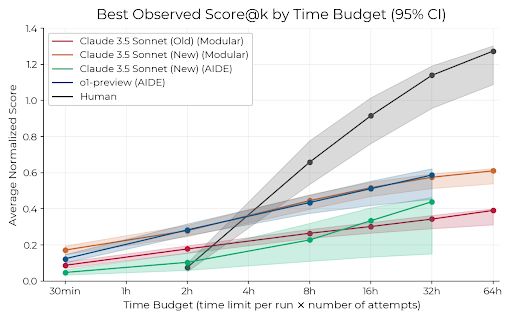

- the length of tasks agents can perform is doubling every 7 months

- 50.8% of global VC funds are going to AI-focused companies

- algorithmic gains for models doubles every 8 months

- amount of compute used grows 10x every two years

- the length of tasks agents can perform is doubling every 7 months

- 50.8% of global VC funds are going to AI-focused companies

- Robustness: no malicious goals

- Misuse risk: human provides malicious goals

- Existential risk: AI provides malicious goals

- Robustness: no malicious goals

- Misuse risk: human provides malicious goals

- Existential risk: AI provides malicious goals

www.nature.com/articles/d41...

www.nature.com/articles/d41...

arxiv.org/pdf/2402.08797

arxiv.org/pdf/2402.08797

go.bsky.app/EkcDcjA

go.bsky.app/EkcDcjA

manifund.org/projects/240...

manifund.org/projects/240...

aisafetychina.substack.com

aisafetychina.substack.com