Former Research Associate at Imperial College London.

Bio/Clinical NLP, Food/Financial AI

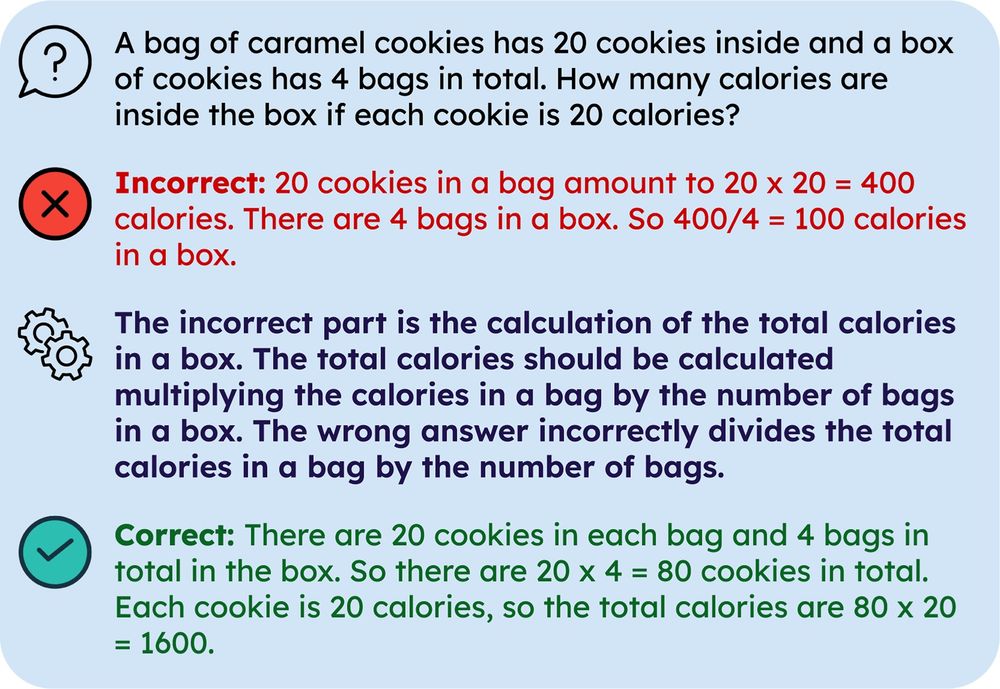

When LLMs learn from previous incorrect answers, they typically observe corrective feedback in the form of rationales explaining each mistake. In our new preprint, we find these rationales do not help, in fact they hurt performance!

🧵

When LLMs learn from previous incorrect answers, they typically observe corrective feedback in the form of rationales explaining each mistake. In our new preprint, we find these rationales do not help, in fact they hurt performance!

🧵