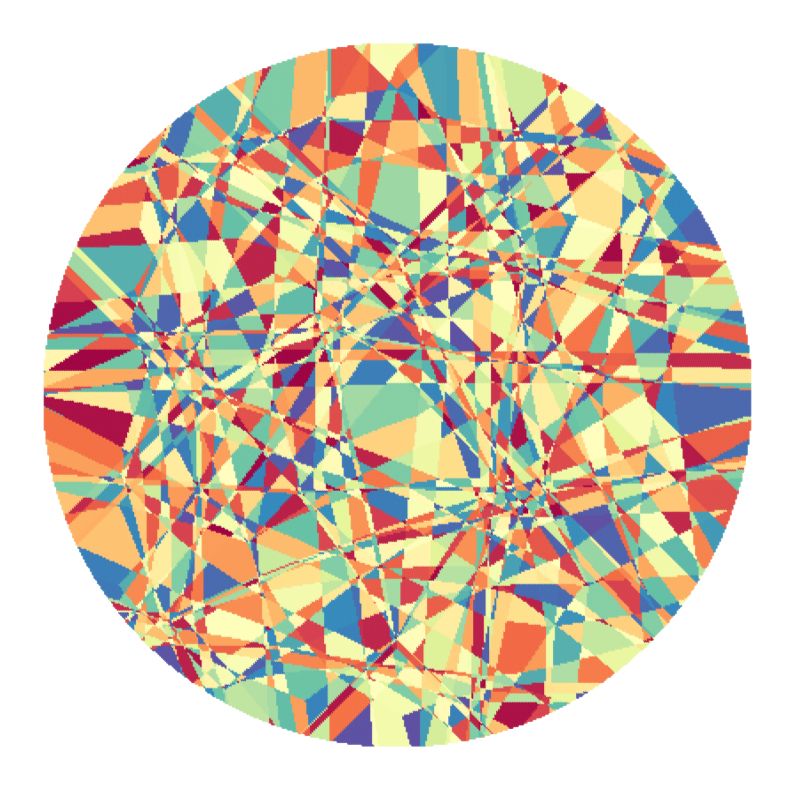

1. The approximation error is lower bounded by an expression depending on the inverse squared of the number of causal pieces. More pieces, less error (which does not mean better generalization though)!

1. The approximation error is lower bounded by an expression depending on the inverse squared of the number of causal pieces. More pieces, less error (which does not mean better generalization though)!

That's all the differently coloured regions shown above - one colour 🟩 = 🧩 one piece!

That's all the differently coloured regions shown above - one colour 🟩 = 🧩 one piece!

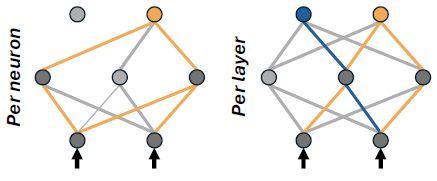

In our new preprint, @pc-pet.bsky.social and I introduce the concept of "Causal Pieces" to approach this question!

In our new preprint, @pc-pet.bsky.social and I introduce the concept of "Causal Pieces" to approach this question!