We retrieve evidence from a patient’s record, visualize how it informs a prediction, and test it in a realistic setting. 👇 (1/6)

arxiv.org/abs/2402.10109

w/ @byron.bsky.social and @jwvdm.bsky.social

We retrieve evidence from a patient’s record, visualize how it informs a prediction, and test it in a realistic setting. 👇 (1/6)

arxiv.org/abs/2402.10109

w/ @byron.bsky.social and @jwvdm.bsky.social

Can we craft arbitrary high-level features without training?👇(1/6)

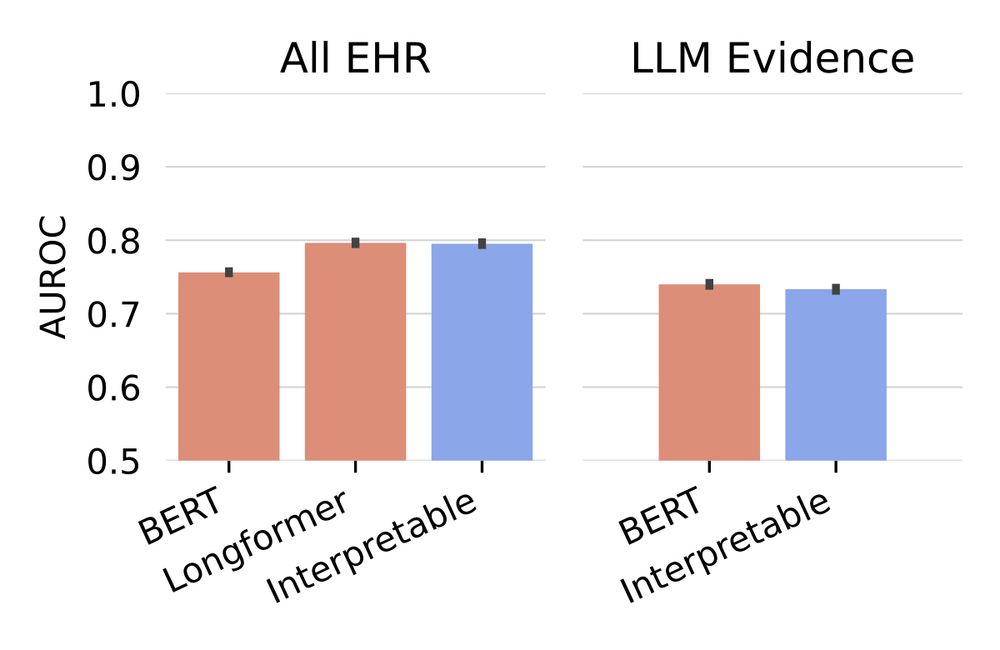

We ask a doctor to ask questions to an LLM and train an interpretable model on the answers.

arxiv.org/abs/2302.12343

w/ @jwvdm.bsky.social and @byron.bsky.social

Can we craft arbitrary high-level features without training?👇(1/6)

We ask a doctor to ask questions to an LLM and train an interpretable model on the answers.

arxiv.org/abs/2302.12343

w/ @jwvdm.bsky.social and @byron.bsky.social