Diego de las Casas

@dlsq.bsky.social

AI Scientist at Mistral AI.

Past: Google DeepMind.

🇧🇷 in 🇬🇧

Past: Google DeepMind.

🇧🇷 in 🇬🇧

Reposted by Diego de las Casas

Gotta wait until he double-crosses Indiana Jones to steal the Holy Grail, I'm afraid.

March 9, 2025 at 2:02 PM

Gotta wait until he double-crosses Indiana Jones to steal the Holy Grail, I'm afraid.

check out more in our post:

mistral.ai/en/news/all-...

mistral.ai/en/news/all-...

The all new le Chat: Your AI assistant for life and work | Mistral AI

Brand new features, iOS and Android apps, Pro, Team, and Enterprise tiers.

mistral.ai

February 6, 2025 at 6:08 PM

check out more in our post:

mistral.ai/en/news/all-...

mistral.ai/en/news/all-...

Have you tried our 8B model?

huggingface.co/mistralai/Mi...

huggingface.co/mistralai/Mi...

mistralai/Ministral-8B-Instruct-2410 · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co

January 31, 2025 at 1:46 PM

Have you tried our 8B model?

huggingface.co/mistralai/Mi...

huggingface.co/mistralai/Mi...

Mistral Small 3 is also available on many partner platforms:

- Ollama: ollama.com/library/mist...

- Kaggle: kaggle.com/models/mistr...

- Fireworks: fireworks.ai/models/firew...

- Together: together.ai/blog/mistral...

And many more soon!

- Ollama: ollama.com/library/mist...

- Kaggle: kaggle.com/models/mistr...

- Fireworks: fireworks.ai/models/firew...

- Together: together.ai/blog/mistral...

And many more soon!

mistral-small

Mistral Small 3 sets a new benchmark in the “small” Large Language Models category below 70B.

ollama.com

January 30, 2025 at 9:17 PM

Mistral Small 3 is also available on many partner platforms:

- Ollama: ollama.com/library/mist...

- Kaggle: kaggle.com/models/mistr...

- Fireworks: fireworks.ai/models/firew...

- Together: together.ai/blog/mistral...

And many more soon!

- Ollama: ollama.com/library/mist...

- Kaggle: kaggle.com/models/mistr...

- Fireworks: fireworks.ai/models/firew...

- Together: together.ai/blog/mistral...

And many more soon!

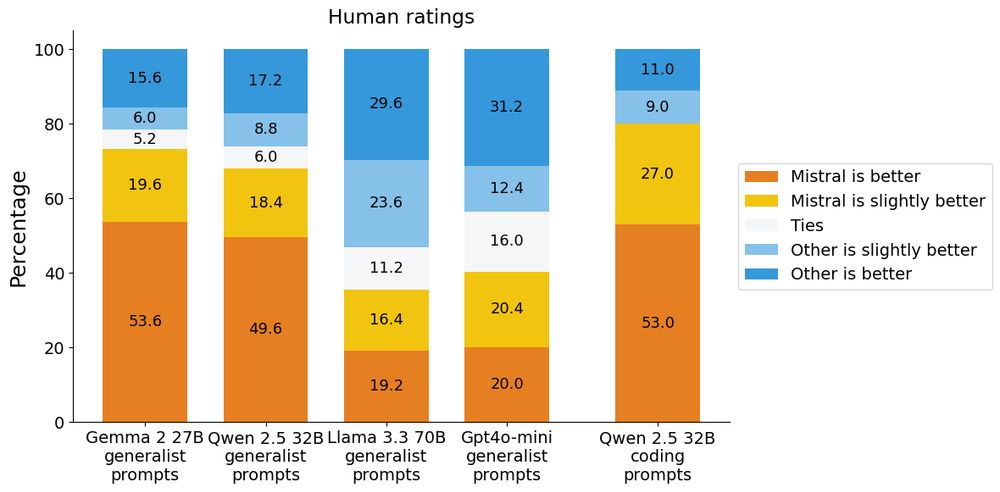

Performance of Mistral Small 3 Instruct model

huggingface.co/mistralai/Mi...

huggingface.co/mistralai/Mi...

January 30, 2025 at 9:17 PM

Performance of Mistral Small 3 Instruct model

huggingface.co/mistralai/Mi...

huggingface.co/mistralai/Mi...

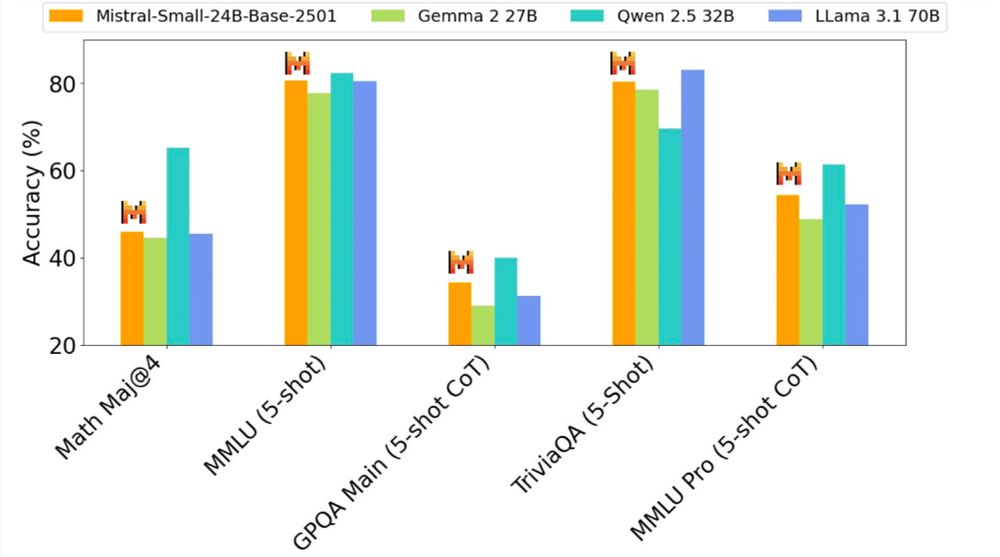

Mistral Small 3 Base model

huggingface.co/mistralai/Mi...

huggingface.co/mistralai/Mi...

January 30, 2025 at 9:17 PM

Mistral Small 3 Base model

huggingface.co/mistralai/Mi...

huggingface.co/mistralai/Mi...

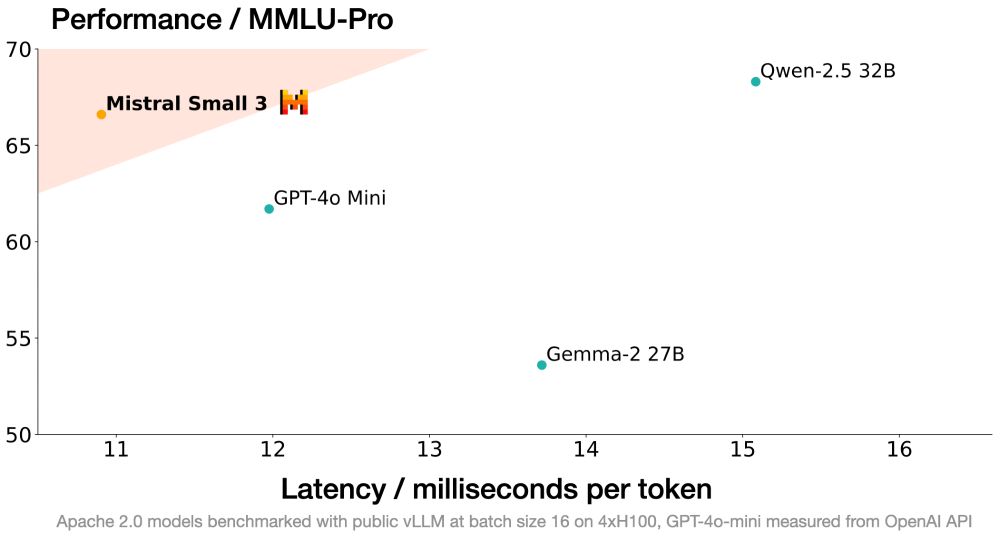

Mistral Small 3 architecture is optimised for latency while preserving high quality

January 30, 2025 at 9:17 PM

Mistral Small 3 architecture is optimised for latency while preserving high quality

Reposted by Diego de las Casas

I know, but it's just an application of one of my favorite memes:

January 21, 2025 at 7:07 PM

I know, but it's just an application of one of my favorite memes:

Reposted by Diego de las Casas

In fact, statistical malpractice is the main driver of progress in machine learning. At some point, we need to come to terms with this.

November 22, 2024 at 2:40 PM

In fact, statistical malpractice is the main driver of progress in machine learning. At some point, we need to come to terms with this.

Fsdp2 has a different policy for handling streams that is also worth a read

github.com/pytorch/pyto...

github.com/pytorch/pyto...

[RFC] Per-Parameter-Sharding FSDP · Issue #114299 · pytorch/pytorch

Per-Parameter-Sharding FSDP Motivation As we looked toward next-generation training, we found limitations in our existing FSDP, mainly from the flat parameter construct. To address these, we propos...

github.com

November 23, 2024 at 10:49 AM

Fsdp2 has a different policy for handling streams that is also worth a read

github.com/pytorch/pyto...

github.com/pytorch/pyto...

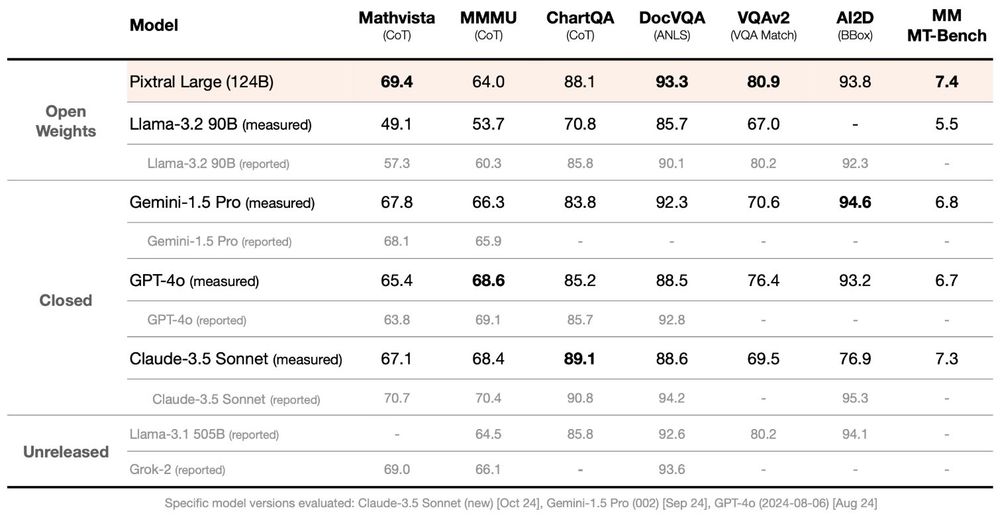

Pixtral Large:

- 123B decoder, 1B vision encoder, 128K sequence length

- Frontier multimodal model

- Maintains text performance of Mistral Large 2

HF weights: huggingface.co/mistralai/Pi...

Try it: chat.mistral.ai

Blog post: mistral.ai/news/pixtral...

- 123B decoder, 1B vision encoder, 128K sequence length

- Frontier multimodal model

- Maintains text performance of Mistral Large 2

HF weights: huggingface.co/mistralai/Pi...

Try it: chat.mistral.ai

Blog post: mistral.ai/news/pixtral...

November 18, 2024 at 5:57 PM

Pixtral Large:

- 123B decoder, 1B vision encoder, 128K sequence length

- Frontier multimodal model

- Maintains text performance of Mistral Large 2

HF weights: huggingface.co/mistralai/Pi...

Try it: chat.mistral.ai

Blog post: mistral.ai/news/pixtral...

- 123B decoder, 1B vision encoder, 128K sequence length

- Frontier multimodal model

- Maintains text performance of Mistral Large 2

HF weights: huggingface.co/mistralai/Pi...

Try it: chat.mistral.ai

Blog post: mistral.ai/news/pixtral...