TCRT5 is a rapid generator of target-conditioned CDR3b, leads SoTA, and yields the first AI-designed self-tolerant binder to an OOD non-viral epitope (w val)

📑: www.nature.com/articles/s42...

🤗: huggingface.co/dkarthikeyan1

👨💻: github.com/pirl-unc/tcr_translate

TCRT5 is a rapid generator of target-conditioned CDR3b, leads SoTA, and yields the first AI-designed self-tolerant binder to an OOD non-viral epitope (w val)

📑: www.nature.com/articles/s42...

🤗: huggingface.co/dkarthikeyan1

👨💻: github.com/pirl-unc/tcr_translate

>preferentially samples sequences with high biological pGen

>generates antigen-specific sequences to unseen epitopes

>generates sequences not seen during training

>preferentially samples sequences with high biological pGen

>generates antigen-specific sequences to unseen epitopes

>generates sequences not seen during training

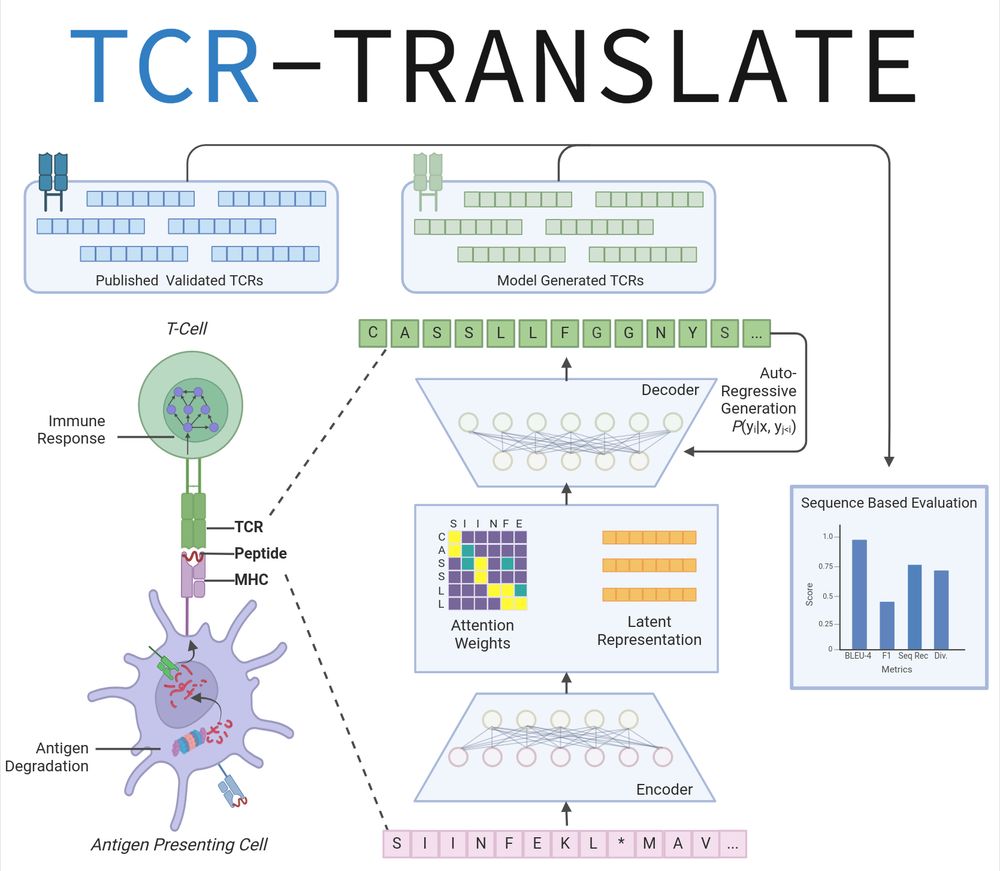

💻🧬TCR-TRANSLATE - A new framework for thinking about the TCR:pMHC specificity problem.

TLDR:

We pretrained LLMs on ~8M TCR & pMHC seqs

Finetuned on sparse pMHC->TCR pair data

Validated CDR3b sequences to unseen antigens

>> random performance on IMMREP2023 "private" antigens

💻🧬TCR-TRANSLATE - A new framework for thinking about the TCR:pMHC specificity problem.

TLDR:

We pretrained LLMs on ~8M TCR & pMHC seqs

Finetuned on sparse pMHC->TCR pair data

Validated CDR3b sequences to unseen antigens

>> random performance on IMMREP2023 "private" antigens