@GaelVaroquaux: neural networks, tabular data, uncertainty, active learning, atomistic ML, learning theory.

https://dholzmueller.github.io

My solution is short (48 LOC) and relatively general-purpose – I used skrub to preprocess string and date columns, and pytabkit to create an ensemble of RealMLP and TabM models. Link below👇

My solution is short (48 LOC) and relatively general-purpose – I used skrub to preprocess string and date columns, and pytabkit to create an ensemble of RealMLP and TabM models. Link below👇

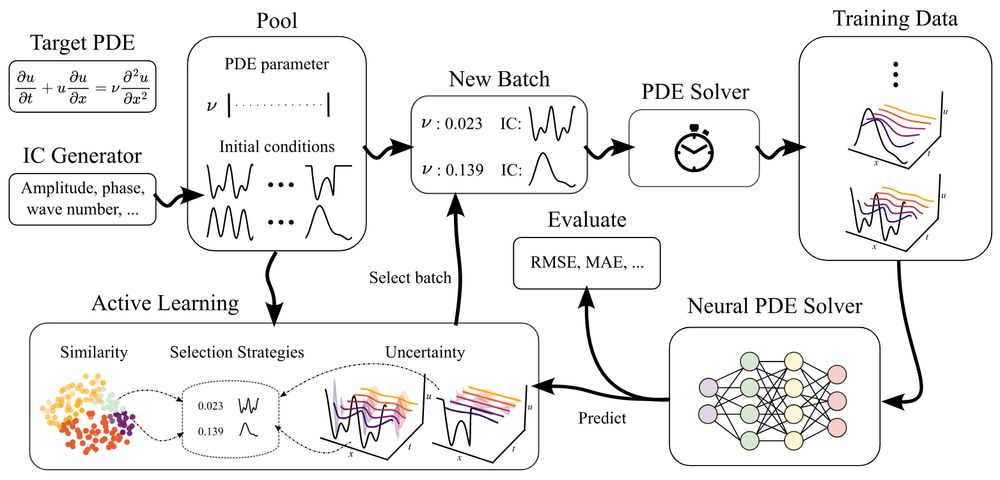

Can active learning help to generate better datasets for neural PDE solvers?

We introduce a new benchmark to find out!

Featuring 6 PDEs, 6 AL methods, 3 architectures and many ablations - transferability, speed, etc.!

Can active learning help to generate better datasets for neural PDE solvers?

We introduce a new benchmark to find out!

Featuring 6 PDEs, 6 AL methods, 3 architectures and many ablations - transferability, speed, etc.!

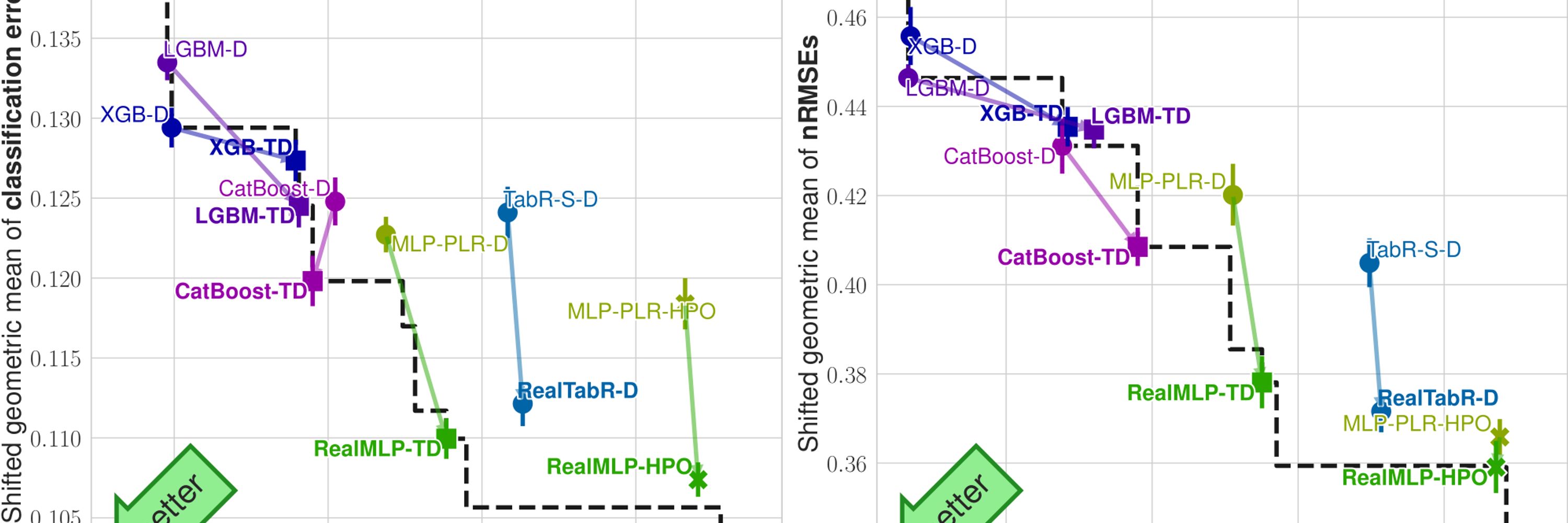

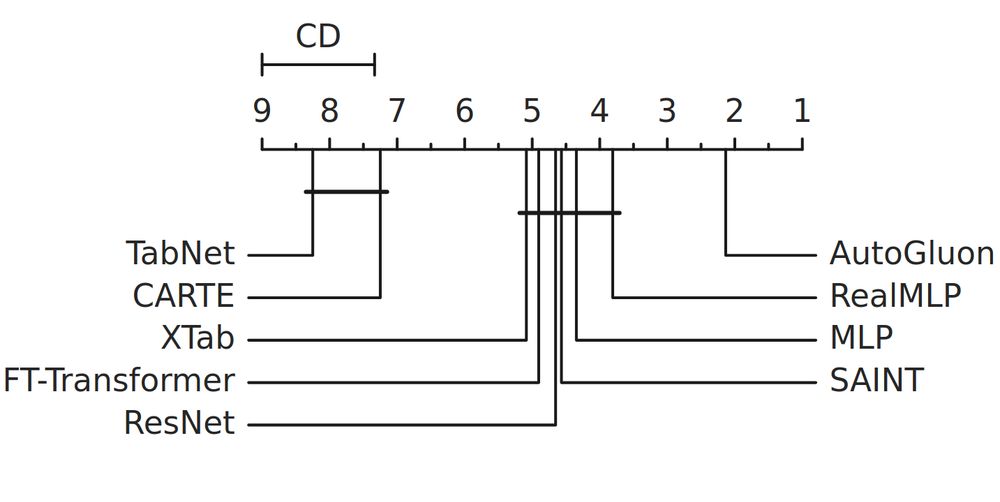

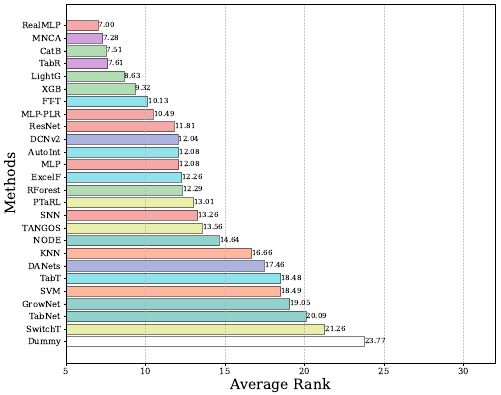

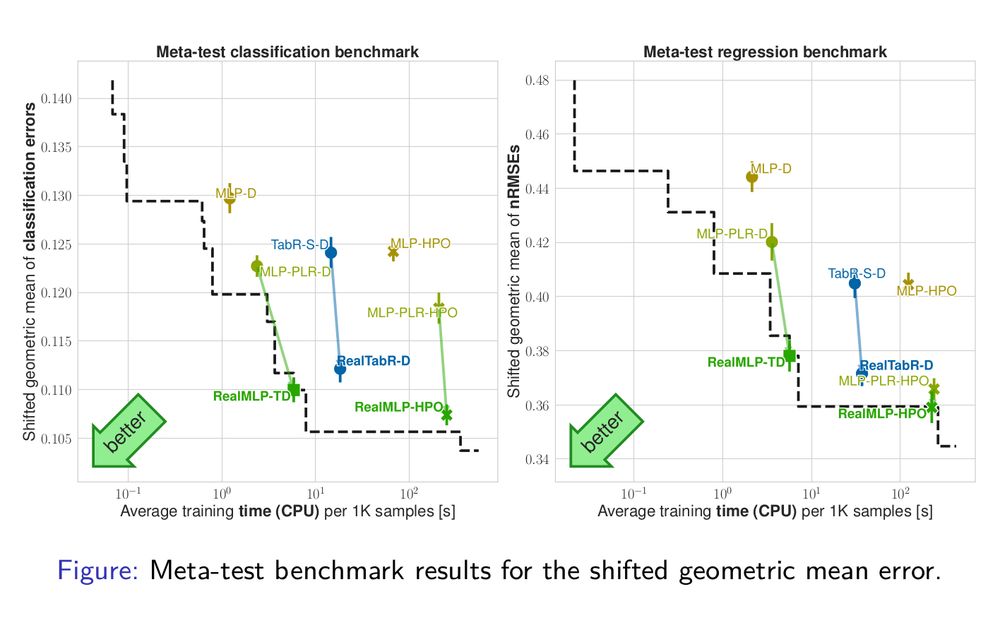

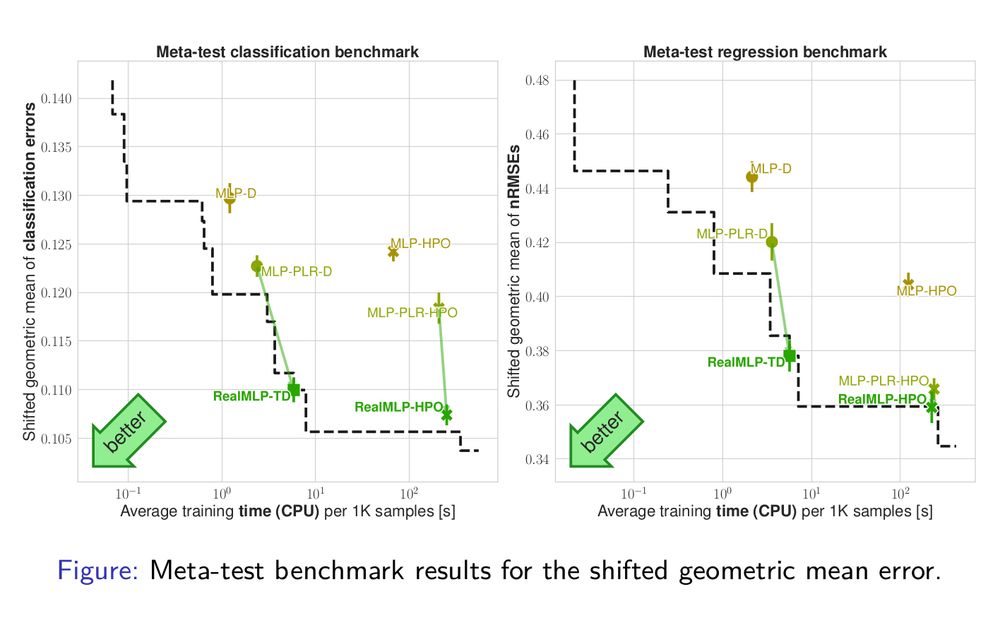

On a recent 300-dataset benchmark with many baselines, RealMLP takes a shared first place overall. 🔥

Importantly, RealMLP is also relatively CPU-friendly, unlike other SOTA DL models (including TabPFNv2 and TabM). 🧵 1/

On a recent 300-dataset benchmark with many baselines, RealMLP takes a shared first place overall. 🔥

Importantly, RealMLP is also relatively CPU-friendly, unlike other SOTA DL models (including TabPFNv2 and TabM). 🧵 1/

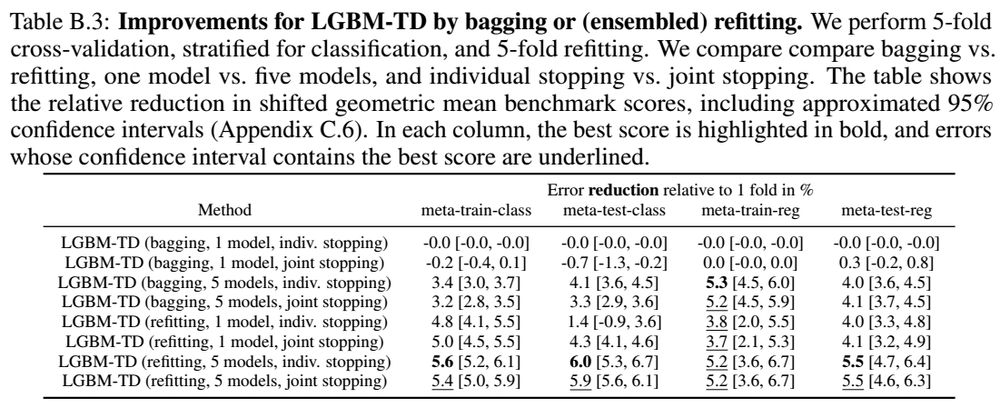

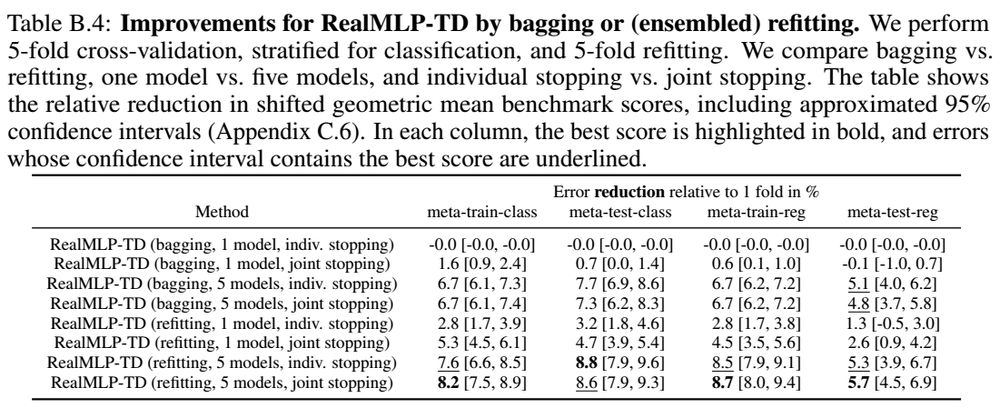

But: it’s slower, you don’t get validation scores for the refitted models, the result might change with more folds, and tuning the hyperparameters on the CV scores may favor bagging. 2/

But: it’s slower, you don’t get validation scores for the refitted models, the result might change with more folds, and tuning the hyperparameters on the CV scores may favor bagging. 2/

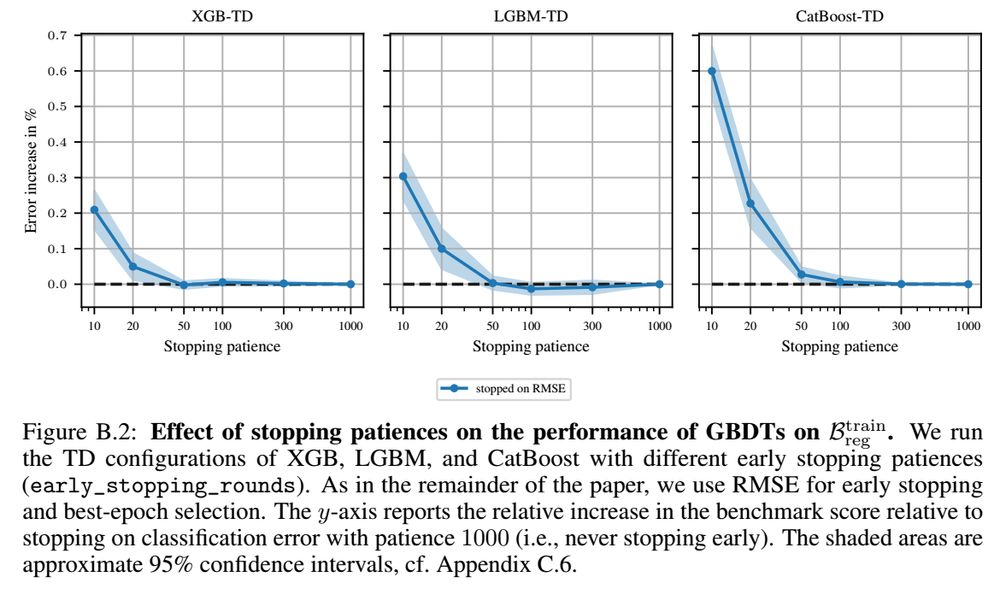

For regression with MSE we found little sensitivity to the patience. 3/

For regression with MSE we found little sensitivity to the patience. 3/

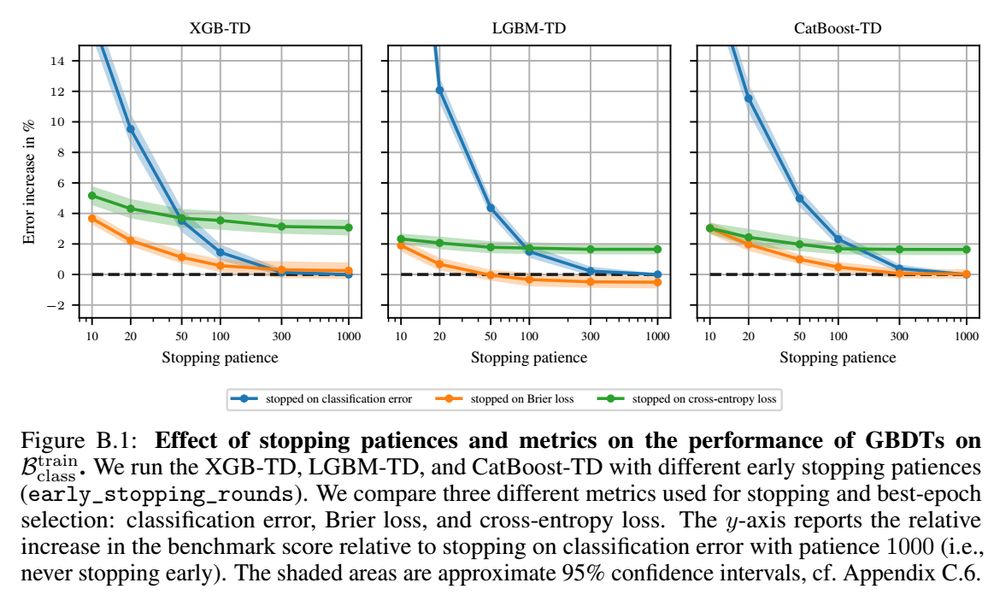

Brier loss yields similar test accuracy for high patience but is less sensitive to patience.

Cross-entropy (the train metric) is even less sensitive but not as good for test accuracy. 2/

Brier loss yields similar test accuracy for high patience but is less sensitive to patience.

Cross-entropy (the train metric) is even less sensitive but not as good for test accuracy. 2/

It is more robust than a StandardScaler but preserves more distributional information than a QuantileTransformer. 9/

It is more robust than a StandardScaler but preserves more distributional information than a QuantileTransformer. 9/

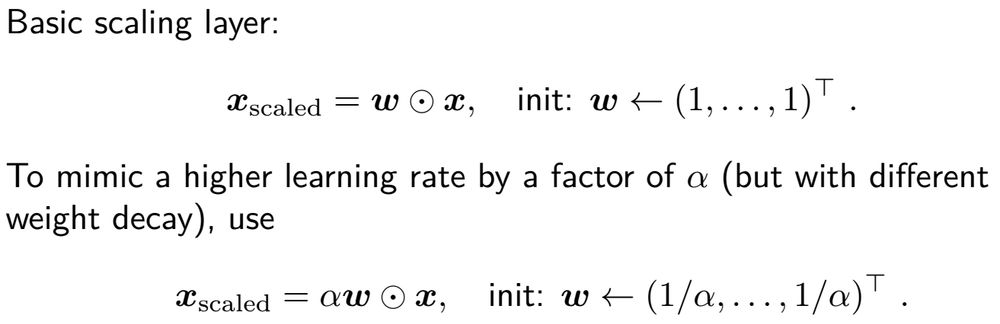

We add many things in different areas like architecture, preprocessing, training/hyperparameters, regularization, and initialization.

We provide multiple ablations, and I want to highlight some of the new things below. 8/

We add many things in different areas like architecture, preprocessing, training/hyperparameters, regularization, and initialization.

We provide multiple ablations, and I want to highlight some of the new things below. 8/

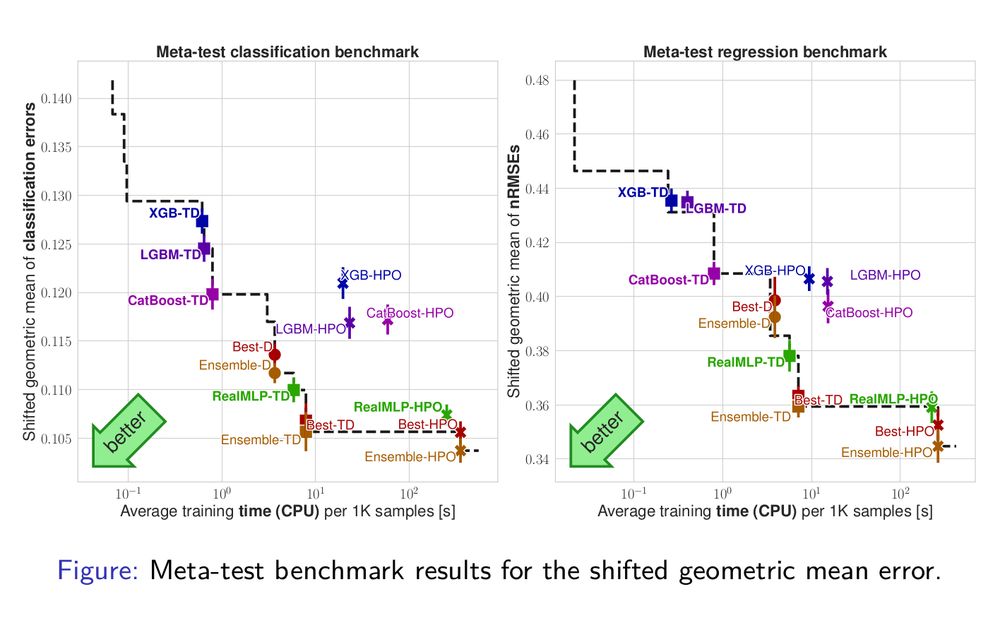

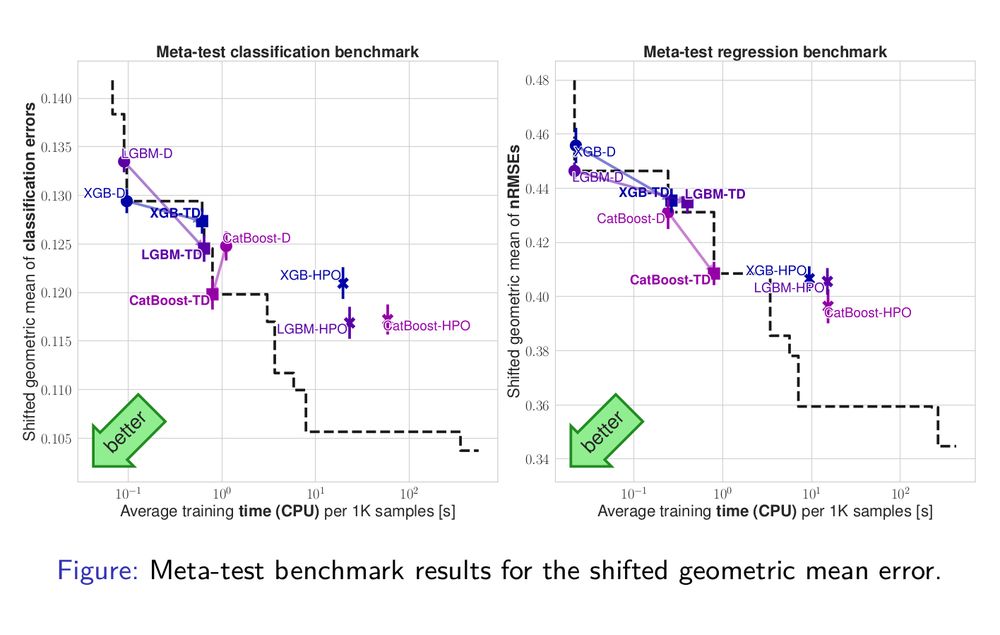

Generally, taking the best TD model (Best-TD) on each dataset typically has a better time-accuracy trade-off than 50 steps of random search HPO . 7/

Generally, taking the best TD model (Best-TD) on each dataset typically has a better time-accuracy trade-off than 50 steps of random search HPO . 7/

The resulting RealTabR-D performs much better than the default parameters from the original paper. 5/

The resulting RealTabR-D performs much better than the default parameters from the original paper. 5/

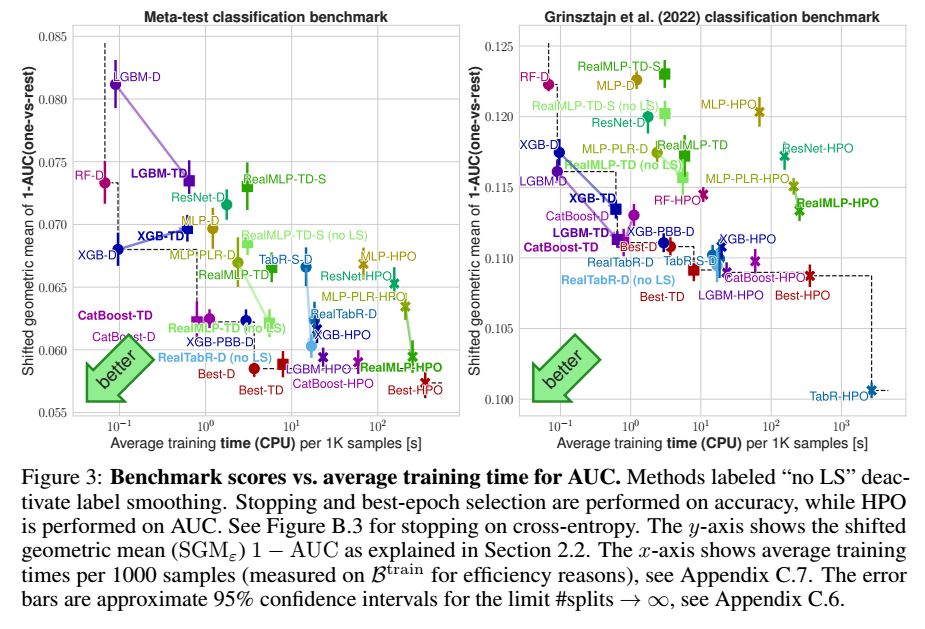

We tuned defaults and our “bag of tricks” only on meta-train. Still, RealMLP outperforms the MLP-PLR baseline with numerical embeddings on all benchmarks. 4/

We tuned defaults and our “bag of tricks” only on meta-train. Still, RealMLP outperforms the MLP-PLR baseline with numerical embeddings on all benchmarks. 4/

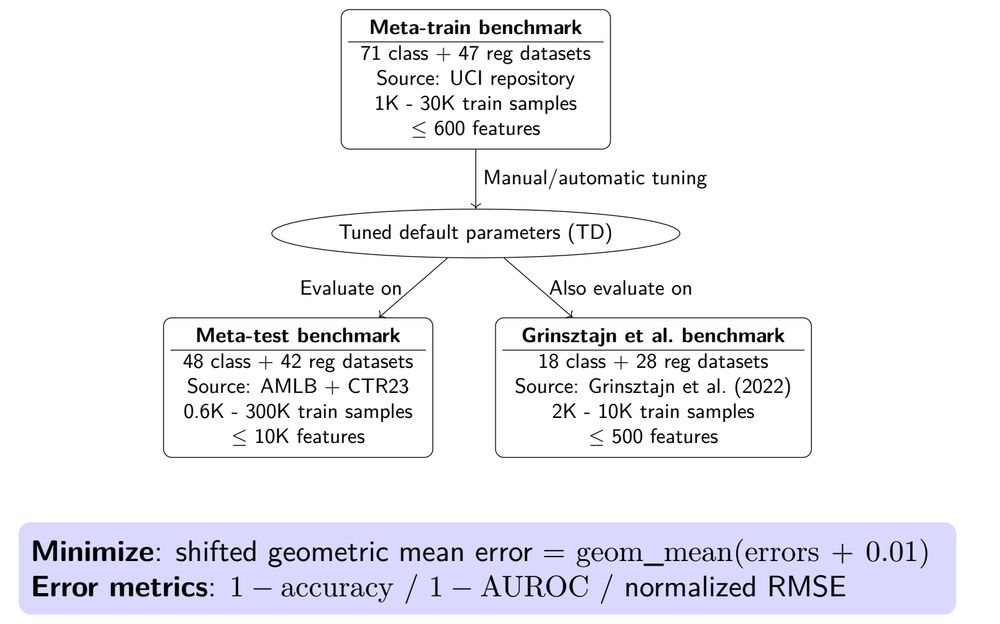

- a disjoint meta-test benchmark including large and high-dimensional datasets

- the smaller Grinsztajn et al. benchmark (with more baselines). 3/

- a disjoint meta-test benchmark including large and high-dimensional datasets

- the smaller Grinsztajn et al. benchmark (with more baselines). 3/

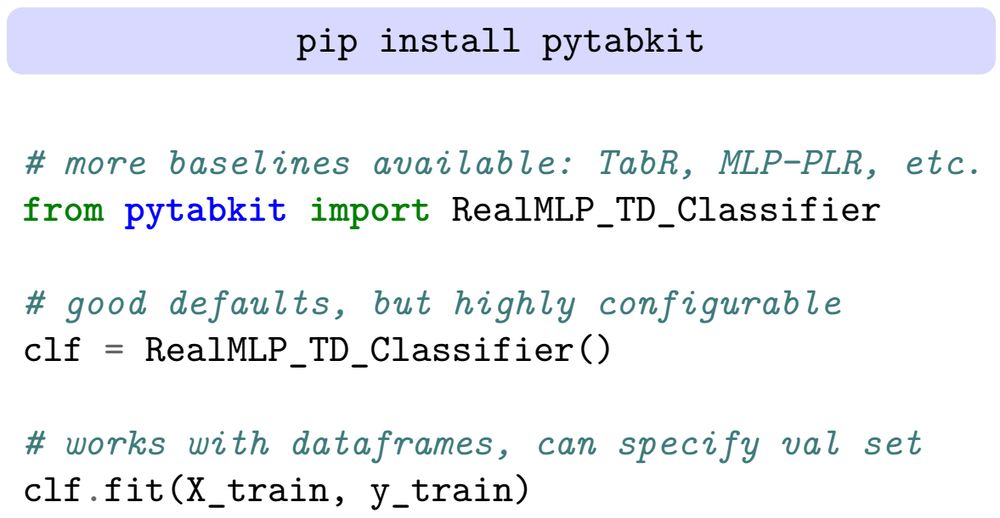

Paper: arxiv.org/abs/2407.04491

Code: github.com/dholzmueller...

Our library is pip-installable and contains easy-to-use and configurable scikit-learn interfaces (including baselines). 2/

Paper: arxiv.org/abs/2407.04491

Code: github.com/dholzmueller...

Our library is pip-installable and contains easy-to-use and configurable scikit-learn interfaces (including baselines). 2/

In our NeurIPS 2024 paper, we introduce RealMLP, a NN with improvements in all areas and meta-learned default parameters.

Some insights about RealMLP and other models on large benchmarks (>200 datasets): 🧵

In our NeurIPS 2024 paper, we introduce RealMLP, a NN with improvements in all areas and meta-learned default parameters.

Some insights about RealMLP and other models on large benchmarks (>200 datasets): 🧵