Feel free to reach out for any questions ✌️

Thanks to all my co-authors! @prshootana.bsky.social @camuljak.bsky.social @chatruncata.bsky.social @mtutek.bsky.social

Feel free to reach out for any questions ✌️

Thanks to all my co-authors! @prshootana.bsky.social @camuljak.bsky.social @chatruncata.bsky.social @mtutek.bsky.social

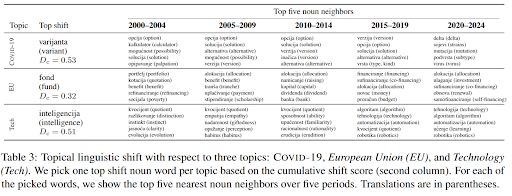

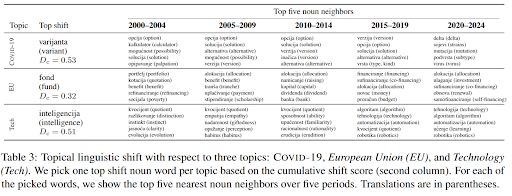

Semantic change is measured using the cumulative cosine distance between embeddings in neighboring periods.

Semantic change is measured using the cumulative cosine distance between embeddings in neighboring periods.

Feel free to reach out for any questions ✌️

Thanks to all my co-authors! @prshootana.bsky.social @camuljak.bsky.social @chatruncata.bsky.social @mtutek.bsky.social

Feel free to reach out for any questions ✌️

Thanks to all my co-authors! @prshootana.bsky.social @camuljak.bsky.social @chatruncata.bsky.social @mtutek.bsky.social

Semantic change is measured using the cumulative cosine distance between embeddings in neighboring periods.

Semantic change is measured using the cumulative cosine distance between embeddings in neighboring periods.