Computational Neuroscience PhD.

Was trying to understand the brain to help build AI, but it appears it's no longer necessary..

github: https://github.com/SelfishGene

It summarizes my thoughts about what is good now and what soon could be even better

open.substack.com/pub/davidben...

It summarizes my thoughts about what is good now and what soon could be even better

open.substack.com/pub/davidben...

We propose a simple, perceptron-like neuron model, the calcitron, that has four sources of [Ca2+]...We demonstrate that by modulating the plasticity thresholds and calcium influx from each calcium source, we can reproduce a wide range of learning and plasticity protocols.

We propose a simple, perceptron-like neuron model, the calcitron, that has four sources of [Ca2+]...We demonstrate that by modulating the plasticity thresholds and calcium influx from each calcium source, we can reproduce a wide range of learning and plasticity protocols.

This would be a "vote with their feet" type of way to know if "grad student turing test" is passed in practice

Mainly due to language models maturing into research assistants that from an advisor point of view are simply better than an average PhD student

This would be a "vote with their feet" type of way to know if "grad student turing test" is passed in practice

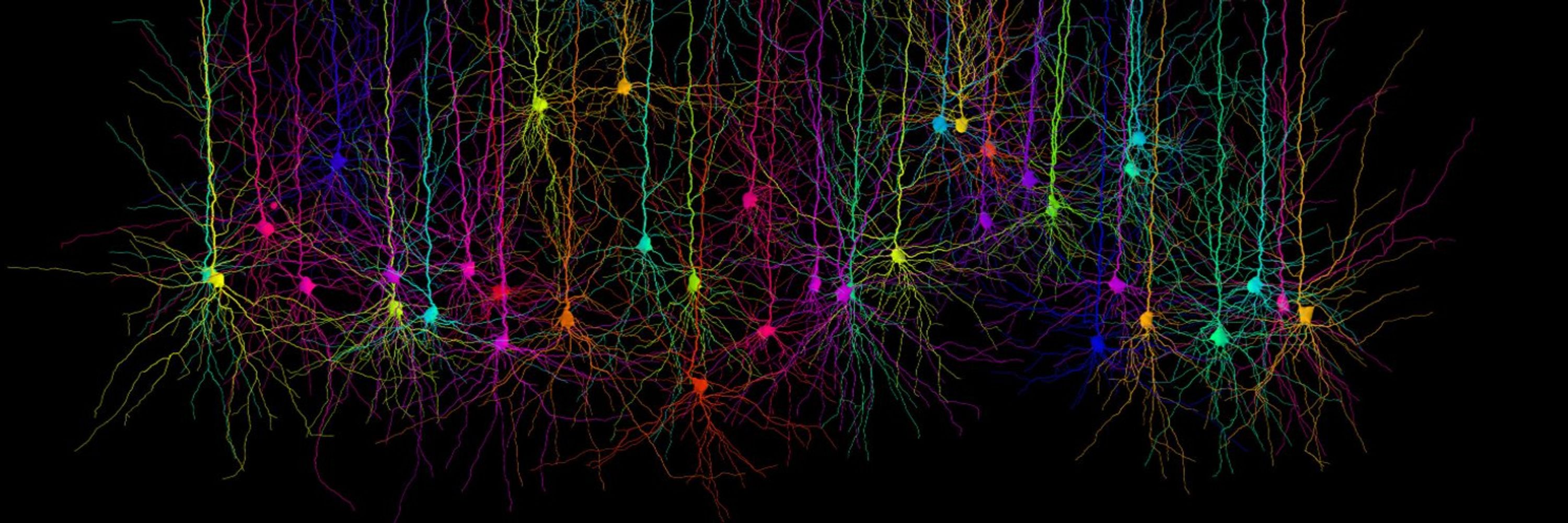

What makes human cortical neurons have such a complex I/O function as compared to rat neurons?

It turns out it's not just about their size

All details in the thread by Ido

bioRxiv: www.biorxiv.org/content/10.1...

What makes human cortical neurons have such a complex I/O function as compared to rat neurons?

It turns out it's not just about their size

All details in the thread by Ido

bioRxiv: www.biorxiv.org/content/10.1...

Infinitely hardworking,

infinitely knowledgeable,

is exceptionally technically competent,

reads instantly everything you send it, and immediately starts working on it

Infinitely hardworking,

infinitely knowledgeable,

is exceptionally technically competent,

reads instantly everything you send it, and immediately starts working on it

Scientists often have accumulated decades of insights and were always lacking in grant funding and in enthusiastic, capable, and hardworking students to help them - will soon be unlocked

Scientists often have accumulated decades of insights and were always lacking in grant funding and in enthusiastic, capable, and hardworking students to help them - will soon be unlocked

Mainly due to language models maturing into research assistants that from an advisor point of view are simply better than an average PhD student

Mainly due to language models maturing into research assistants that from an advisor point of view are simply better than an average PhD student

It felt magical

Weirdly, ideas increased in complexity and these days always somehow feel at least 1-3 months of hard work away

It felt magical

Weirdly, ideas increased in complexity and these days always somehow feel at least 1-3 months of hard work away

Is the source code for the 'discover' or 'following' feeds available somewhere? Is it tinkerable/tunable?

How do these things work here?

Is the source code for the 'discover' or 'following' feeds available somewhere? Is it tinkerable/tunable?

How do these things work here?

Worst changes:

1) suppression of link sharing

2) tiktokification of news feed (engagement over substance)

Worst changes:

1) suppression of link sharing

2) tiktokification of news feed (engagement over substance)

I have two posts there, it was not really possible to share on twitter, so maybe here?

Titles:

1) Why I can no longer pursue a career in academia (Sep 2024)

2) Obvious next steps in AI research (Sep 2023)

davidbeniaguev.substack.com

I have two posts there, it was not really possible to share on twitter, so maybe here?

Titles:

1) Why I can no longer pursue a career in academia (Sep 2024)

2) Obvious next steps in AI research (Sep 2023)

davidbeniaguev.substack.com