University of Cambridge

https://sdq.github.io

👉 sdq.github.io/DxHF

Furui Tino

@oulasvirta.bsky.social @elassady.bsky.social

👉 sdq.github.io/DxHF

Furui Tino

@oulasvirta.bsky.social @elassady.bsky.social

🔗 typoist.github.io

👨💻 Danqing Shi @danqingshi.bsky.social , Yujun Zhu, Francisco Erivaldo Fernandes Junior, Shumin Zhai , and Antti Oulasvirta @oulasvirta.bsky.social

🔗 typoist.github.io

👨💻 Danqing Shi @danqingshi.bsky.social , Yujun Zhu, Francisco Erivaldo Fernandes Junior, Shumin Zhai , and Antti Oulasvirta @oulasvirta.bsky.social

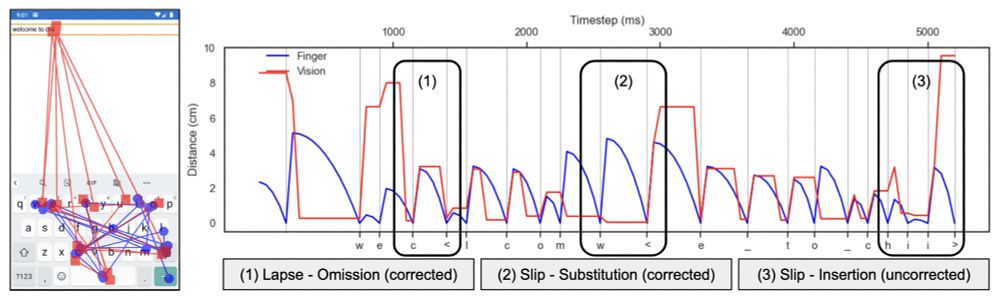

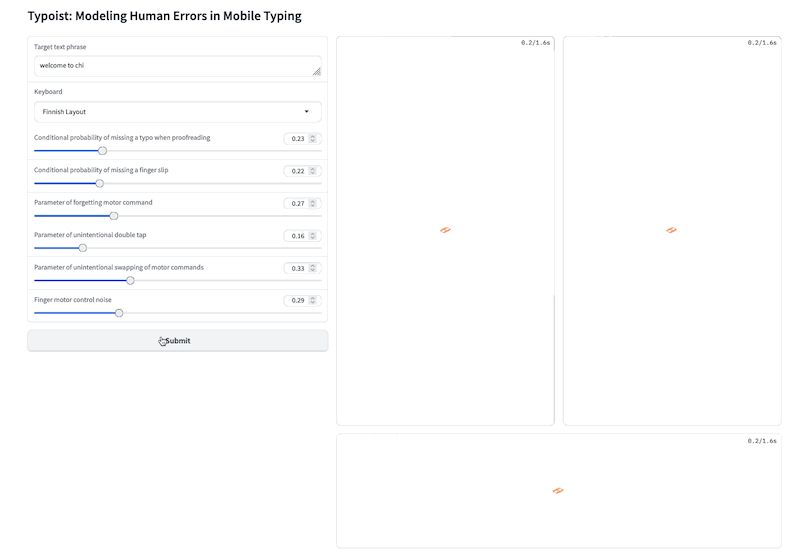

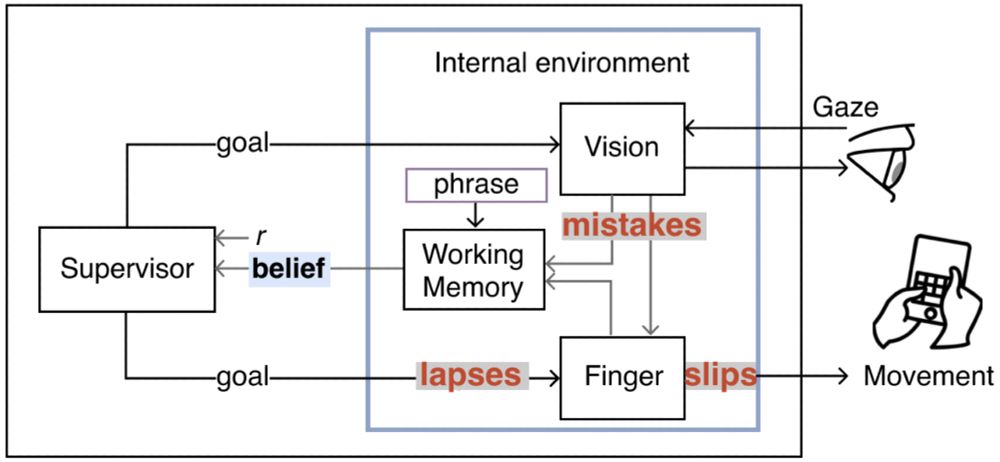

Typoist extends the computational rationality framework ( crtypist.github.io ) for touchscreen typing. It simulates eye & finger movements and predicts how users detect & correct errors.

Typoist extends the computational rationality framework ( crtypist.github.io ) for touchscreen typing. It simulates eye & finger movements and predicts how users detect & correct errors.

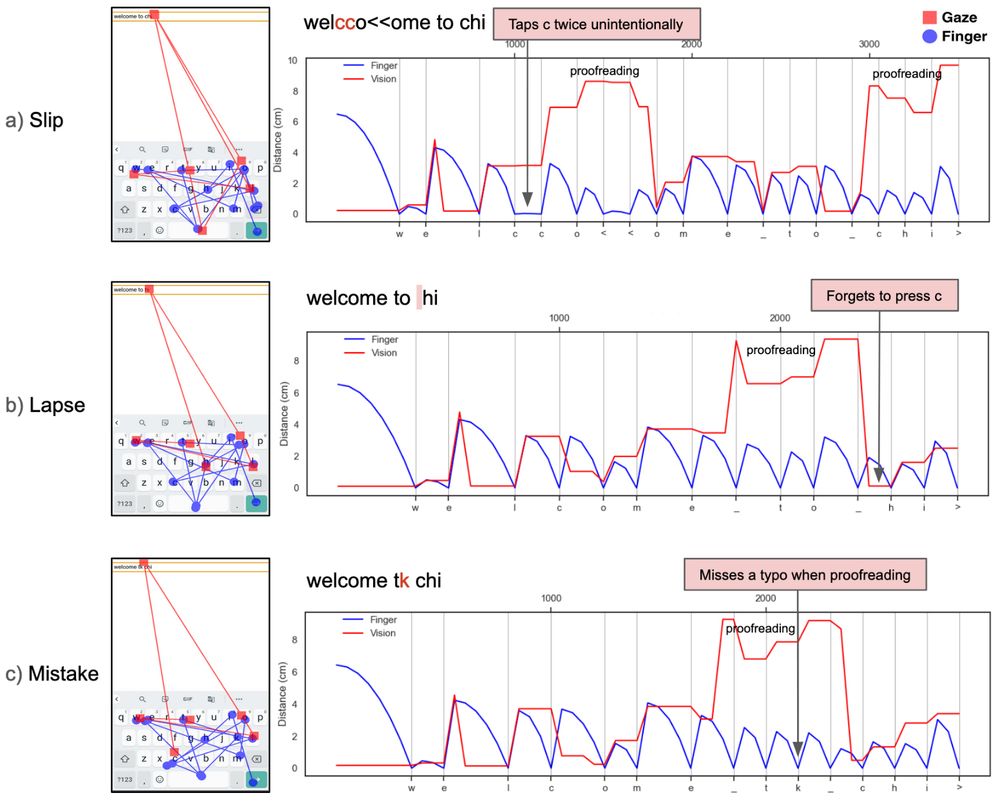

🔹Slips - motor execution deviates from the intended outcome;

🔹Lapses - memory failures;

🔹Mistakes - incorrect or partial knowledge.

Typoist captures them all!

🔹Slips - motor execution deviates from the intended outcome;

🔹Lapses - memory failures;

🔹Mistakes - incorrect or partial knowledge.

Typoist captures them all!

Our #CHI2025 paper introduces Typoist, the computational model to simulate human errors across perception, motor, and memory. 📄 arxiv.org/abs/2502.03560

Our #CHI2025 paper introduces Typoist, the computational model to simulate human errors across perception, motor, and memory. 📄 arxiv.org/abs/2502.03560

🔗 chart-reading.github.io

👨💻 Danqing Shi @danqingshi.bsky.social , Yao Wang , Yunpeng Bai, Andreas Bulling, and Antti Oulasvirta @oulasvirta.bsky.social

🔗 chart-reading.github.io

👨💻 Danqing Shi @danqingshi.bsky.social , Yao Wang , Yunpeng Bai, Andreas Bulling, and Antti Oulasvirta @oulasvirta.bsky.social

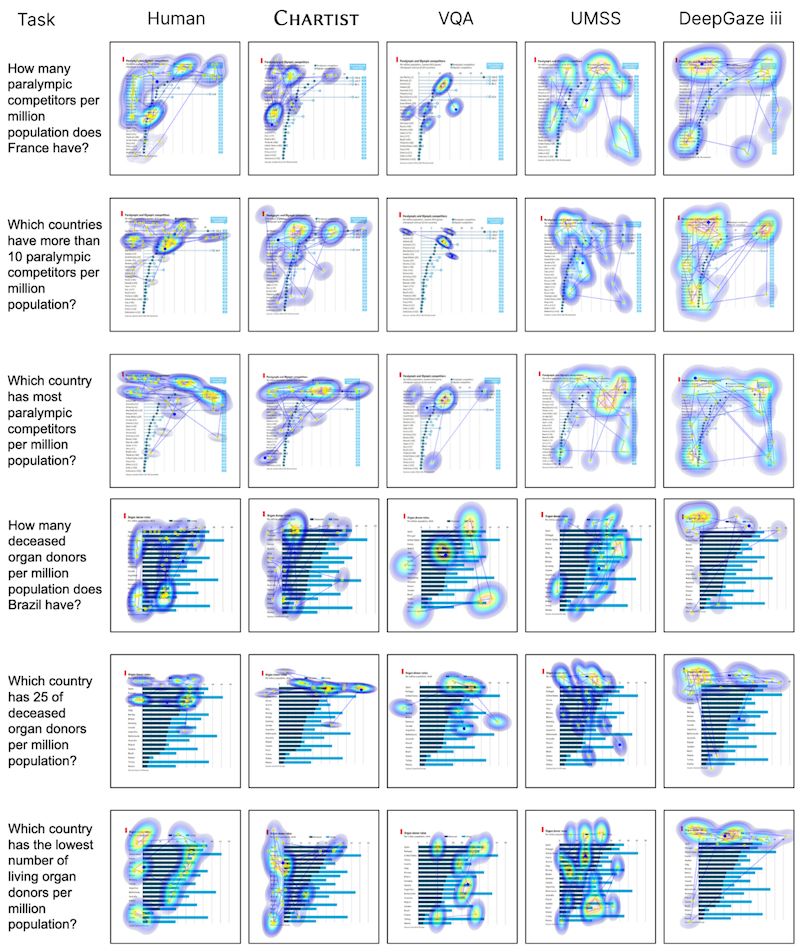

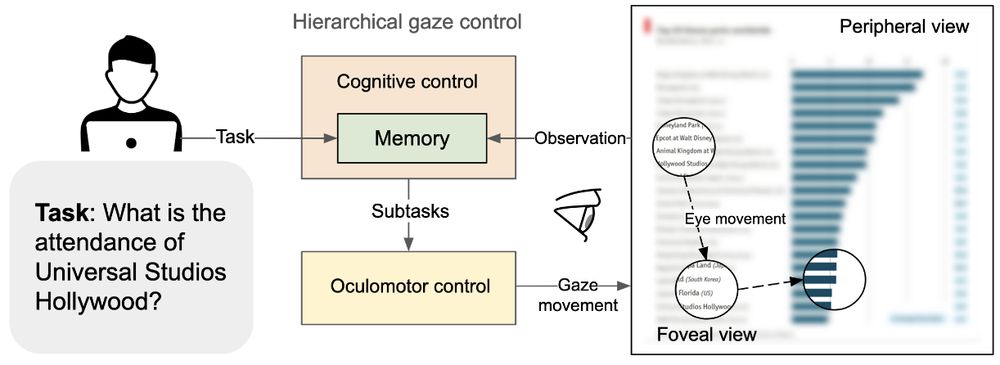

Chartist uses a hierarchical gaze control model with:

A cognitive controller (powered by LLMs) to reason about the task-solving process

An oculomotor controller (trained via reinforcement learning) to simulate detailed gaze movements

Chartist uses a hierarchical gaze control model with:

A cognitive controller (powered by LLMs) to reason about the task-solving process

An oculomotor controller (trained via reinforcement learning) to simulate detailed gaze movements

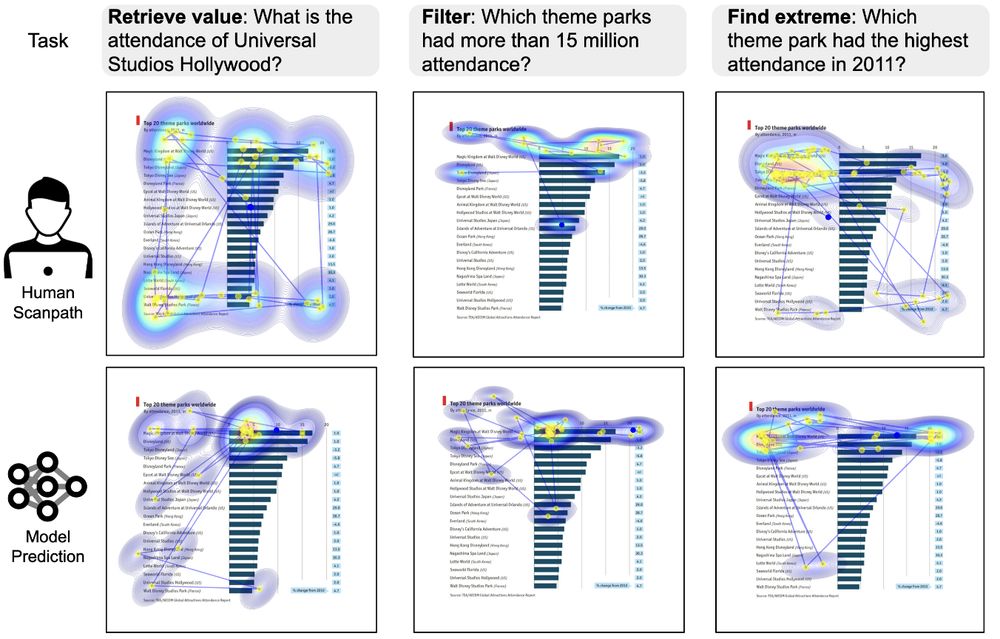

🧐 Want to find a specific value?

🔍 Need to filter relevant data points?

📈 Looking for extreme values?

Chartist predicts human-like eye movement, simulating how people move their gaze to address these tasks.

🧐 Want to find a specific value?

🔍 Need to filter relevant data points?

📈 Looking for extreme values?

Chartist predicts human-like eye movement, simulating how people move their gaze to address these tasks.

Our #CHI2025 paper introduces Chartist, the first model designed to simulate these task-driven eye movements. 📄 arxiv.org/abs/2502.03575

Our #CHI2025 paper introduces Chartist, the first model designed to simulate these task-driven eye movements. 📄 arxiv.org/abs/2502.03575