Danny Wilf-Townsend

@dannywt.bsky.social

Associate Professor of Law at Georgetown Law thinking, writing, and teaching about civil procedure, consumer protection, and AI.

Blog: https://www.wilftownsend.net/

Academic papers: https://papers.ssrn.com/sol3/cf_dev/AbsByAuth.cfm?per_id=2491047

Blog: https://www.wilftownsend.net/

Academic papers: https://papers.ssrn.com/sol3/cf_dev/AbsByAuth.cfm?per_id=2491047

Oh, also, from the parochial law professor standpoint (i.e., the most important standpoint) it makes "looking for hallucinations" a less reliable form of trying to monitor student AI use on exams or papers.

October 3, 2025 at 2:52 PM

Oh, also, from the parochial law professor standpoint (i.e., the most important standpoint) it makes "looking for hallucinations" a less reliable form of trying to monitor student AI use on exams or papers.

...has gone up. Certainly not to the point where I would recommend relying on AI for legal advice (or to write your briefs), but the size of the change does seem notable for at least those (and probably other) reasons.

October 3, 2025 at 2:51 PM

...has gone up. Certainly not to the point where I would recommend relying on AI for legal advice (or to write your briefs), but the size of the change does seem notable for at least those (and probably other) reasons.

...a few thoughts: (1) for practitioners using AI, I would think that fewer hallucinations makes it faster and cheaper to review/check/edit AI-generated outputs. And (2) for non-experts using AI, who aren't editing but just reading (or even relying on) answers, the quality of those answers..

October 3, 2025 at 2:51 PM

...a few thoughts: (1) for practitioners using AI, I would think that fewer hallucinations makes it faster and cheaper to review/check/edit AI-generated outputs. And (2) for non-experts using AI, who aren't editing but just reading (or even relying on) answers, the quality of those answers..

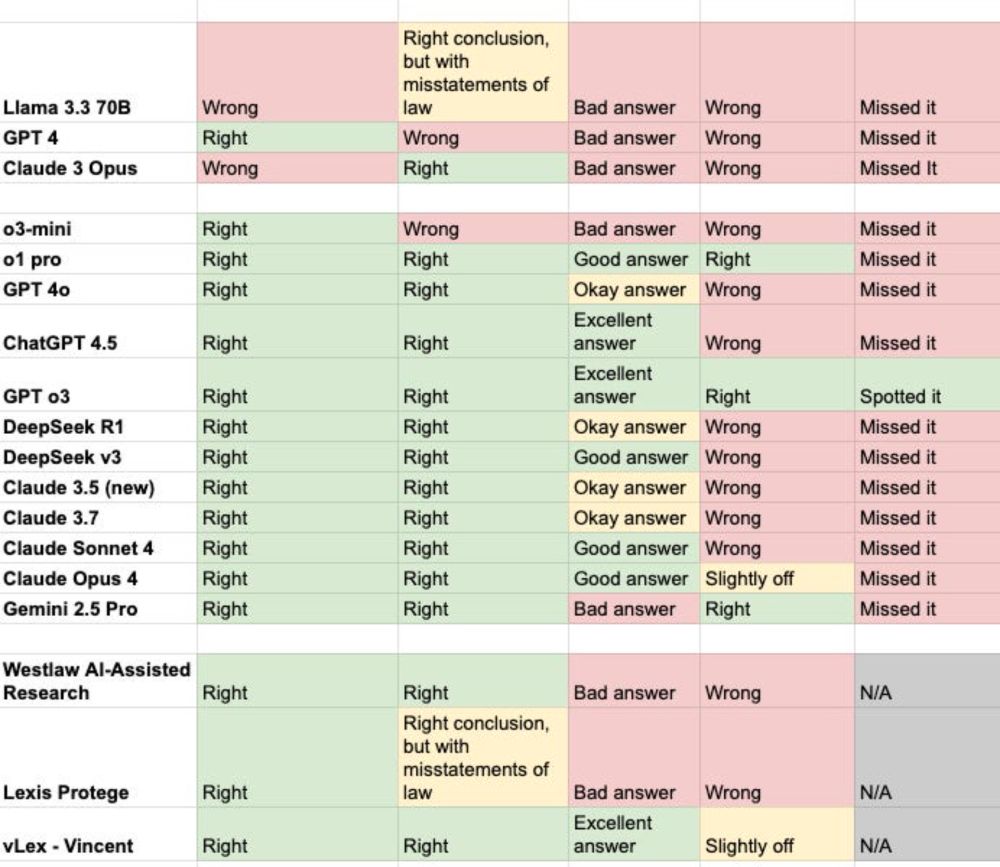

I wouldn't draw a big conclusion specifically from this exercise; but it is consistent with my experience that hallucinations in answering legal questions seem way down in general now compared to, e.g., a year ago. In terms of the implications of that broader fact (if it is a fact)...

October 3, 2025 at 2:51 PM

I wouldn't draw a big conclusion specifically from this exercise; but it is consistent with my experience that hallucinations in answering legal questions seem way down in general now compared to, e.g., a year ago. In terms of the implications of that broader fact (if it is a fact)...

Overall, GPT-5-Pro was good enough to use for my (informal) approach here—it was both internally consistent and looked good in accuracy spot checks. Its grades show some models scoring in the A- to A range, consistent with what others have found, too.

October 1, 2025 at 1:58 PM

Overall, GPT-5-Pro was good enough to use for my (informal) approach here—it was both internally consistent and looked good in accuracy spot checks. Its grades show some models scoring in the A- to A range, consistent with what others have found, too.

It turns out that some models are deeply inaccurate, and some are frequently inconsistent, but a few are reasonably consistent and accurate. And along the way, I learned that human graders are sometimes less consistent than we might hope.

October 1, 2025 at 1:58 PM

It turns out that some models are deeply inaccurate, and some are frequently inconsistent, but a few are reasonably consistent and accurate. And along the way, I learned that human graders are sometimes less consistent than we might hope.

The goal here wasn't to use them to grade student work—something I would not recommend. It's instead to see if they can be used to automate the evaluation process of other language models: can we use LLMs to get a sense of different models' relative capacities on legal questions?

October 1, 2025 at 1:58 PM

The goal here wasn't to use them to grade student work—something I would not recommend. It's instead to see if they can be used to automate the evaluation process of other language models: can we use LLMs to get a sense of different models' relative capacities on legal questions?

The review of "The Deletion Remedy" also discusses Christina Lee's "Beyond Algorithmic Disgorgement," papers.ssrn.com/sol3/papers..... Christina is on the job market this year, and if I were on a hiring committee I would definitely be taking a look.

Beyond Algorithmic Disgorgement: Remedying Algorithmic Harms

AI regulations are popping up around the world, and they mostly involve ex-ante risk assessment and mitigating those risks. But even with careful risk assessmen

papers.ssrn.com

September 29, 2025 at 1:50 PM

The review of "The Deletion Remedy" also discusses Christina Lee's "Beyond Algorithmic Disgorgement," papers.ssrn.com/sol3/papers..... Christina is on the job market this year, and if I were on a hiring committee I would definitely be taking a look.

Indicting based on sandwich type could lead to quite a pickle. Let's hope this jury's not on a roll.

August 28, 2025 at 1:57 PM

Indicting based on sandwich type could lead to quite a pickle. Let's hope this jury's not on a roll.

Me too! They must be targeting proceduralists. Probably due to our lax morals.

August 22, 2025 at 1:30 PM

Me too! They must be targeting proceduralists. Probably due to our lax morals.

And thank you to @wertwhile.bsky.social for the shoutout and discussion of my work!

July 17, 2025 at 5:02 PM

And thank you to @wertwhile.bsky.social for the shoutout and discussion of my work!

And I completely agree with what @wertwhile.bsky.social and @weisenthal.bsky.social say about OpenAI's o3 being the model to focus on—lots of people are forming impressions about AI capabilities based on older or less powerful tools, and aren't seeing the current level of capabilities as a result.

July 17, 2025 at 5:02 PM

And I completely agree with what @wertwhile.bsky.social and @weisenthal.bsky.social say about OpenAI's o3 being the model to focus on—lots of people are forming impressions about AI capabilities based on older or less powerful tools, and aren't seeing the current level of capabilities as a result.

Finally, the work of mine that is discussed a bit is this informal testing of AI models on legal questions. The most recent post is here: www.wilftownsend.net/p/testing-ge...

Testing generative AI on legal questions—May 2025 update

The latest round of my informal testing

www.wilftownsend.net

July 17, 2025 at 5:02 PM

Finally, the work of mine that is discussed a bit is this informal testing of AI models on legal questions. The most recent post is here: www.wilftownsend.net/p/testing-ge...

On the issue of AI increasing numbers of lawsuits and a "Jevons paradox" for litigation, I would recommend @arbel.bsky.social's work here: papers.ssrn.com/sol3/papers....

and Henry Thompson has some interesting thoughts about these dynamics as well: henryathompson.com/s/Thompson-A...

and Henry Thompson has some interesting thoughts about these dynamics as well: henryathompson.com/s/Thompson-A...

Judicial Economy in the Age of AI

Individuals do not vindicate the majority of their legal claims because of access to justice barriers. This entrenched state of affairs is now facing a disrupti

papers.ssrn.com

July 17, 2025 at 5:02 PM

On the issue of AI increasing numbers of lawsuits and a "Jevons paradox" for litigation, I would recommend @arbel.bsky.social's work here: papers.ssrn.com/sol3/papers....

and Henry Thompson has some interesting thoughts about these dynamics as well: henryathompson.com/s/Thompson-A...

and Henry Thompson has some interesting thoughts about these dynamics as well: henryathompson.com/s/Thompson-A...

First, on using AI to predict damages / outcomes, check out work by David Freeman Engstrom and @gelbach.bsky.social, which discusses some areas this is happening (ctrl+f for "Walmart" to find relevant sections):

scholarship.law.upenn.edu/penn_law_rev...

scholarship.law.upenn.edu/jcl/vol23/is...

scholarship.law.upenn.edu/penn_law_rev...

scholarship.law.upenn.edu/jcl/vol23/is...

Legal Tech, Civil Procedure, and the Future of Adversarialism

By David Freeman Engstrom and Jonah B. Gelbach, Published on 01/01/21

scholarship.law.upenn.edu

July 17, 2025 at 5:02 PM

First, on using AI to predict damages / outcomes, check out work by David Freeman Engstrom and @gelbach.bsky.social, which discusses some areas this is happening (ctrl+f for "Walmart" to find relevant sections):

scholarship.law.upenn.edu/penn_law_rev...

scholarship.law.upenn.edu/jcl/vol23/is...

scholarship.law.upenn.edu/penn_law_rev...

scholarship.law.upenn.edu/jcl/vol23/is...

What an interesting question — cool study.

June 26, 2025 at 1:58 PM

What an interesting question — cool study.