Adjunct lecturer @ Australian Institute for ML. @aimlofficial.bsky.social

Occasionally cycling across continents.

https://www.damienteney.info

- ReLUs: smooth functions

- Learned activations: sharp, axis-aligned decision boundaries, very similar to trees.

- ReLUs: smooth functions

- Learned activations: sharp, axis-aligned decision boundaries, very similar to trees.

Optimizing the activation function doesn't make much difference. But when initialized from a ReLU, we rediscover smooth variants similar to GeLUs! Clearly a local optimum on which researchers honed in, by trial and error over the years!

Optimizing the activation function doesn't make much difference. But when initialized from a ReLU, we rediscover smooth variants similar to GeLUs! Clearly a local optimum on which researchers honed in, by trial and error over the years!

Do We Always Need the Simplicity Bias?

We take another step to understand why/when neural nets generalize so well. ⬇️🧵

Do We Always Need the Simplicity Bias?

We take another step to understand why/when neural nets generalize so well. ⬇️🧵

Any advice to get more ML and less bunnies/politics in my Bluesky feed?

Any advice to get more ML and less bunnies/politics in my Bluesky feed?

arxiv.org/abs/2403.02241

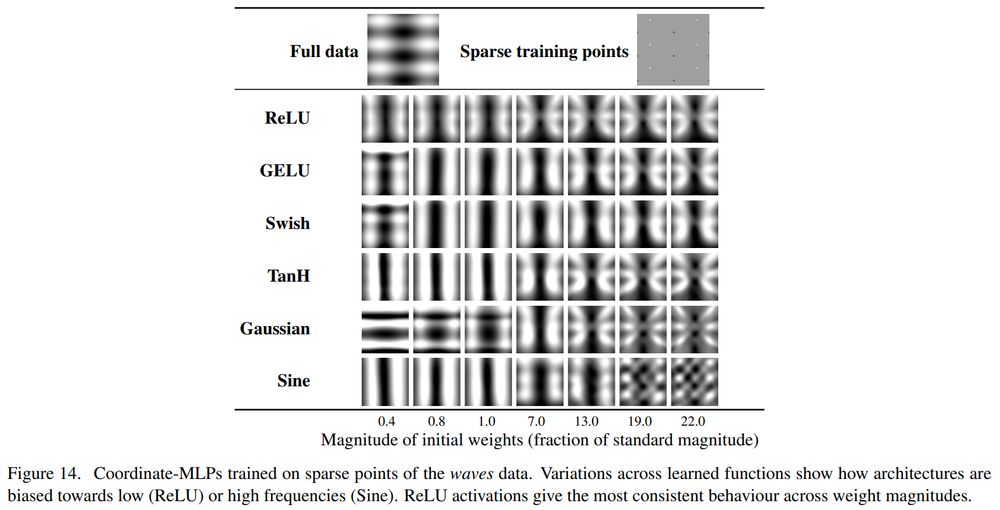

"Neural Redshift: Random Networks are not Random Functions"

(Fig. 14 from the appendix ⬇️)

arxiv.org/abs/2403.02241

"Neural Redshift: Random Networks are not Random Functions"

(Fig. 14 from the appendix ⬇️)