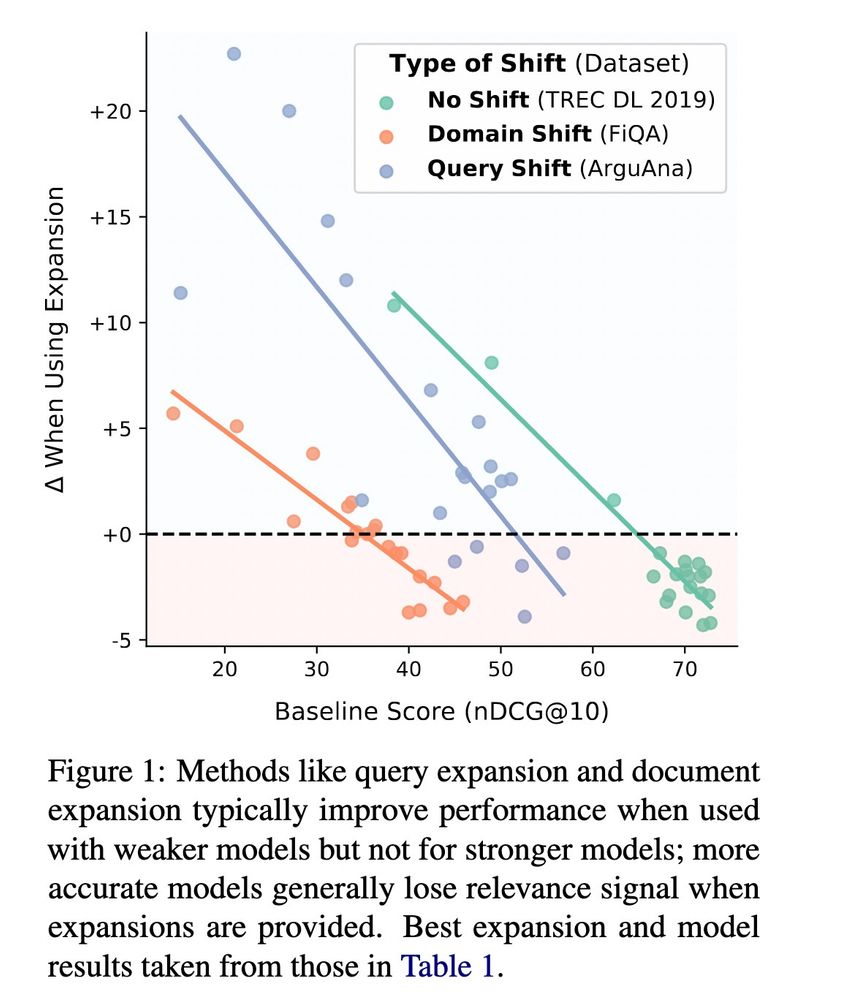

But do these approaches work for all IR models and for different types of distribution shifts? Turns out its actually more 📉 🚨

📝 (arxiv soon): orionweller.github.io/assets/pdf/L...

But do these approaches work for all IR models and for different types of distribution shifts? Turns out its actually more 📉 🚨

📝 (arxiv soon): orionweller.github.io/assets/pdf/L...