💻 Code: github.com/anyasims/sto...

A massive 🙏 to my incredible co-authors: Anya Sims, Thom Foster, @klarakaleb.bsky.social, Tuan-Duy H. Nguyen, Joseph Lee, @jfoerst.bsky.social, @yeewhye.bsky.social!

[8/8]

💻 Code: github.com/anyasims/sto...

A massive 🙏 to my incredible co-authors: Anya Sims, Thom Foster, @klarakaleb.bsky.social, Tuan-Duy H. Nguyen, Joseph Lee, @jfoerst.bsky.social, @yeewhye.bsky.social!

[8/8]

[7/]

[7/]

COST-EFFECTIVE: StochasTok allows enhanced subword skills to be seamlessly 'retrofitted' into existing pretrained models - thus avoiding costly pretraining!

ENHANCED ROBUSTNESS: Improves resilience to alternative tokenizations! (see examples)

[6/]

COST-EFFECTIVE: StochasTok allows enhanced subword skills to be seamlessly 'retrofitted' into existing pretrained models - thus avoiding costly pretraining!

ENHANCED ROBUSTNESS: Improves resilience to alternative tokenizations! (see examples)

[6/]

LANGUAGE: As hoped, StochasTok unlocks language manipulation ability! (see task examples below)

MATH: Furthermore, StochasTok dramatically changes multi-digit addition, enabling grokking and even generalization to UNSEEN TOKENIZERS!🤯

[5/]

LANGUAGE: As hoped, StochasTok unlocks language manipulation ability! (see task examples below)

MATH: Furthermore, StochasTok dramatically changes multi-digit addition, enabling grokking and even generalization to UNSEEN TOKENIZERS!🤯

[5/]

✅Computationally lightweight🪶

✅A simple dataset preprocessing step — No training loop or inference time changes required!🛠️

✅Compatible with ANY base tokenizer — Allows us to retrofit pretrained models!💰

✅Robust to hyperparameter choice!🔥

[4/]

✅Computationally lightweight🪶

✅A simple dataset preprocessing step — No training loop or inference time changes required!🛠️

✅Compatible with ANY base tokenizer — Allows us to retrofit pretrained models!💰

✅Robust to hyperparameter choice!🔥

[4/]

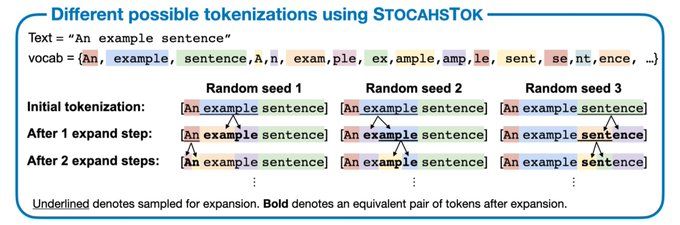

1️⃣ Simply tokenize text with ANY base tokenizer,

2️⃣ Then, stochastically split some of those tokens into equivalent token pairs.

That’s basically it! Repeat step 2 for the desired granularity.

[3/]

1️⃣ Simply tokenize text with ANY base tokenizer,

2️⃣ Then, stochastically split some of those tokens into equivalent token pairs.

That’s basically it! Repeat step 2 for the desired granularity.

[3/]

🎉The solution: Allow LLMs to naturally 'see inside' tokens via alternative tokenizations!

[2/]

🎉The solution: Allow LLMs to naturally 'see inside' tokens via alternative tokenizations!

[2/]