•Masters Theoretical Physics UoM|UCLA🪐

•Intern @zuckermanbrain.bsky.social|

@SapienzaRoma | @CERN | @EPFL

https://linktr.ee/Clementine_Domine

At @gatsbyucl.bsky.social, my research explored how prior knowledge shapes neural representations.

I’m deeply grateful to my mentors, @saxelab.bsky.social and @caswell.bsky.social, my incredible collaborators, and everyone who supported me!

At @gatsbyucl.bsky.social, my research explored how prior knowledge shapes neural representations.

I’m deeply grateful to my mentors, @saxelab.bsky.social and @caswell.bsky.social, my incredible collaborators, and everyone who supported me!

Continual learning, Reversal learning, Transfer learning, Fine-tuning (7/9)

Continual learning, Reversal learning, Transfer learning, Fine-tuning (7/9)

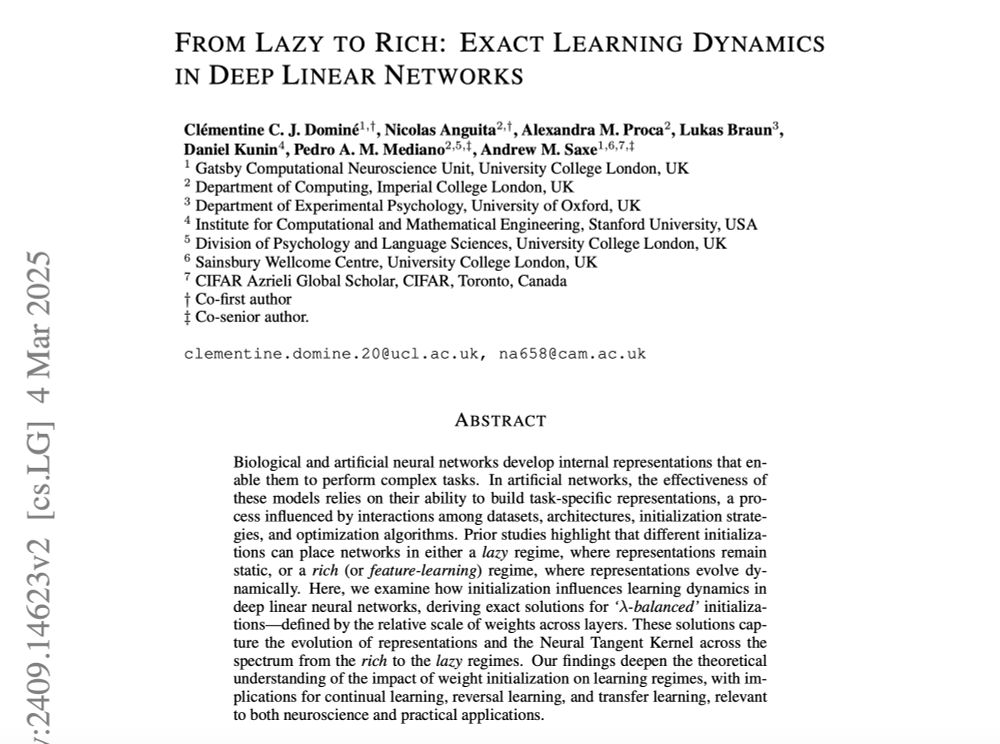

1️⃣ We derive exact solutions for gradient flow, representational similarity, and the finite-width Neural Tangent Kernel (NTK).

(5/9)

1️⃣ We derive exact solutions for gradient flow, representational similarity, and the finite-width Neural Tangent Kernel (NTK).

(5/9)

Lazy learning – Representations remain mostly static

Rich/feature learning – Representations evolve dynamically

Our work investigates how initialization determines where a network falls on this spectrum. (3/9)

Lazy learning – Representations remain mostly static

Rich/feature learning – Representations evolve dynamically

Our work investigates how initialization determines where a network falls on this spectrum. (3/9)

arxiv.org/abs/2409.14623

A thread on how relative weight initialization shapes learning dynamics in deep networks. 🧵 (1/9)

arxiv.org/abs/2409.14623

A thread on how relative weight initialization shapes learning dynamics in deep networks. 🧵 (1/9)

We analyze Elastic Weight Consolidation (EWC) (Kirkpatrick et al., 2017) and show that specialization dynamics impact its effectiveness, revealing limitations in standard continual learning regularization.

(6/8)

We analyze Elastic Weight Consolidation (EWC) (Kirkpatrick et al., 2017) and show that specialization dynamics impact its effectiveness, revealing limitations in standard continual learning regularization.

(6/8)

1. In non-specialized networks, the relationship between task similarity and forgetting shifts—leading to a monotonic forgetting trend.

2. We identify initialization schemes that enhance specialization by increasing readout weight entropy and layer imbalance.

(5/8)

1. In non-specialized networks, the relationship between task similarity and forgetting shifts—leading to a monotonic forgetting trend.

2. We identify initialization schemes that enhance specialization by increasing readout weight entropy and layer imbalance.

(5/8)

1. Analyzing specialization dynamics in deep linear networks (Saxe et al., 2013).

2. Extending to high-dimensional mean-field networks trained with SGD (Saad & Solla, 1995; Biehl & Schwarze, 1995). (4/8)

1. Analyzing specialization dynamics in deep linear networks (Saxe et al., 2013).

2. Extending to high-dimensional mean-field networks trained with SGD (Saad & Solla, 1995; Biehl & Schwarze, 1995). (4/8)

Specialization isn’t guaranteed—it strongly depends on initialization. We show that weight imbalance and high weight entropy encourage specialization, influencing feature reuse and forgetting in continual learning.

(3/8)

Specialization isn’t guaranteed—it strongly depends on initialization. We show that weight imbalance and high weight entropy encourage specialization, influencing feature reuse and forgetting in continual learning.

(3/8)

openreview.net/forum?id=RQz...

We shows how neural network can build specialized and shared representation depending on initialization, this has consequences in continual learning.

(1/8)

openreview.net/forum?id=RQz...

We shows how neural network can build specialized and shared representation depending on initialization, this has consequences in continual learning.

(1/8)