https://cpsiff.github.io

Realistic isn't always best, but having accurate g.t. is definitely important and a separate issue.

Realistic isn't always best, but having accurate g.t. is definitely important and a separate issue.

Few-view 3D reconstruction with high resolution sensors: weihan1.github.io/transientang...

Handling specular / mirror surfaces:

arxiv.org/abs/2209.03336

Detecting human pose:

arxiv.org/abs/2110.114...

Few-view 3D reconstruction with high resolution sensors: weihan1.github.io/transientang...

Handling specular / mirror surfaces:

arxiv.org/abs/2209.03336

Detecting human pose:

arxiv.org/abs/2110.114...

cpsiff.github.io/towards_3d_v...

And more on specific applications of these sensors on robotics, which utilize histogram info (+ one in review, stay tuned):

cpsiff.github.io/using_a_dist...

cpsiff.github.io/unlocking_pr...

cpsiff.github.io/towards_3d_v...

And more on specific applications of these sensors on robotics, which utilize histogram info (+ one in review, stay tuned):

cpsiff.github.io/using_a_dist...

cpsiff.github.io/unlocking_pr...

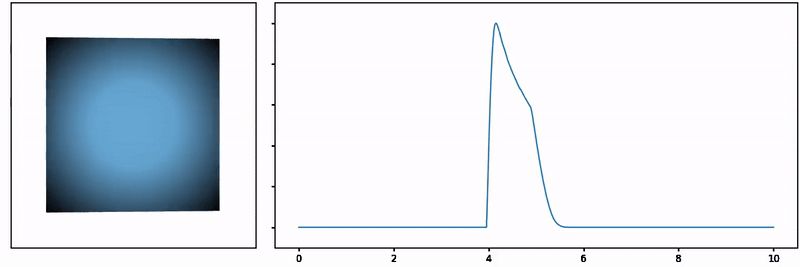

When the per-pixel FoV is wide, this histogram encodes rich information about the scene, as shown in this awesome animation by my labmate Sacha Jungerman (wisionlab.com/people/sacha...).

When the per-pixel FoV is wide, this histogram encodes rich information about the scene, as shown in this awesome animation by my labmate Sacha Jungerman (wisionlab.com/people/sacha...).

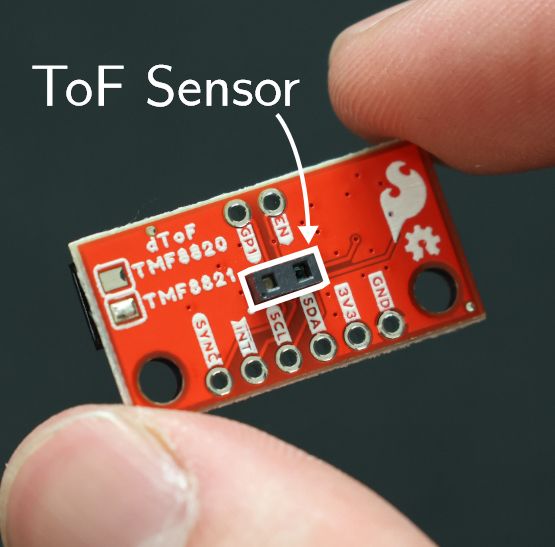

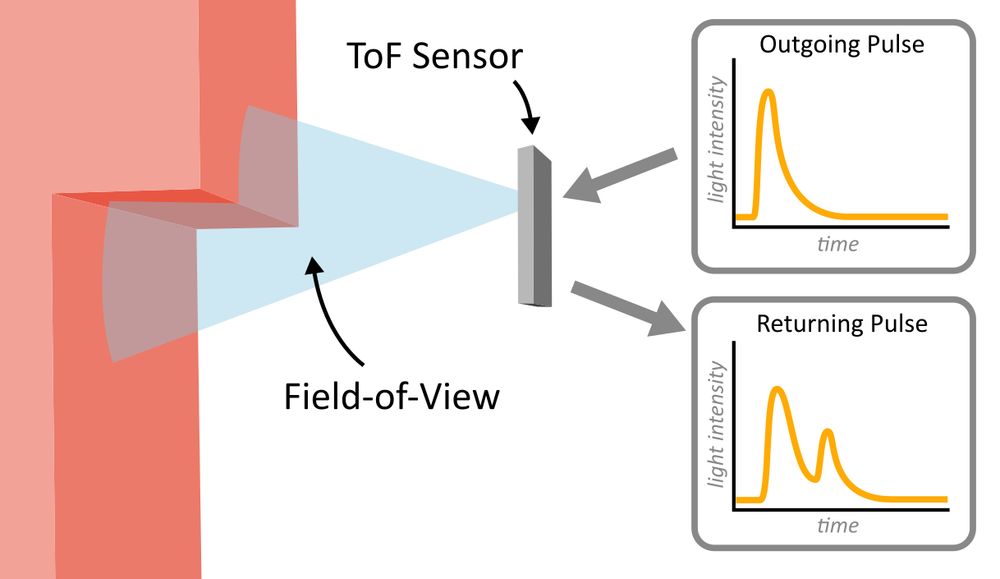

Recently, a new class of these sensors have emerged that measure the intensity of returning light over very short (pico-to-nanosecond) timescales.

Recently, a new class of these sensors have emerged that measure the intensity of returning light over very short (pico-to-nanosecond) timescales.