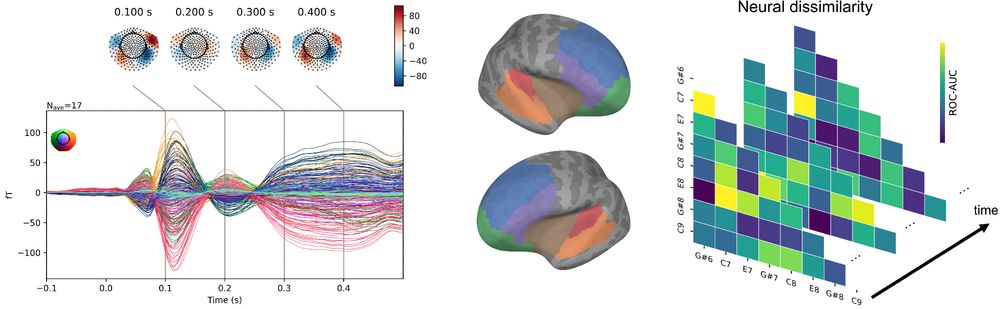

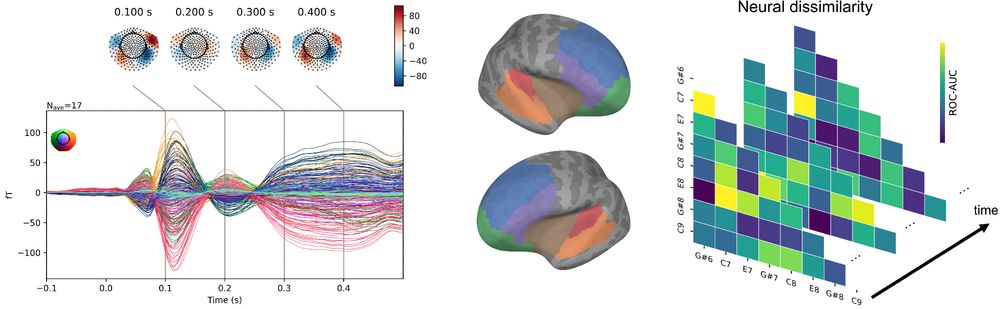

We explored an alternative: training a DNN on spectrotemporal modulation (#STM) features—an approach inspired by how the human auditory cortex processes sound.

We explored an alternative: training a DNN on spectrotemporal modulation (#STM) features—an approach inspired by how the human auditory cortex processes sound.

5/n

5/n

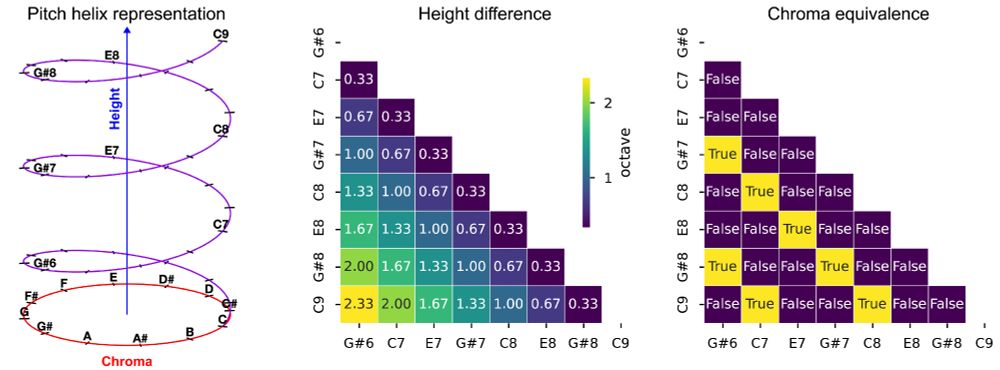

4/n

4/n

3/n

3/n