Can Demircan

@candemircan.bsky.social

phd student in Munich, working on machine learning and cognitive science

Reposted by Can Demircan

In the first paper, @candemircan.bsky.social and @tankred-saanum.bsky.social use sparse autoencoders to show that LLMs can implement temporal difference learning in context. This work is together with Akshay Jagadish and @marcelbinz.bsky.social.

arxiv.org/abs/2410.01280

Sparse Autoencoders Reveal Temporal Difference Learning in Large Language Models

In-context learning, the ability to adapt based on a few examples in the input prompt, is a ubiquitous feature of large language models (LLMs). However, as LLMs' in-context learning abilities continue...

arxiv.org

January 22, 2025 at 8:09 PM

In the first paper, @candemircan.bsky.social and @tankred-saanum.bsky.social use sparse autoencoders to show that LLMs can implement temporal difference learning in context. This work is together with Akshay Jagadish and @marcelbinz.bsky.social.

arxiv.org/abs/2410.01280

Many thanks to @tankred-saanum.bsky.social @marcelbinz.bsky.social @mgarvert.bsky.social @ericschulz.bsky.social and Doeller lab

Check the paper here: arxiv.org/abs/2306.09377

Reach out if you're at NeurIPS and wanna talk about representational alignment, mechanistic interpretability, or CogSci!

Check the paper here: arxiv.org/abs/2306.09377

Reach out if you're at NeurIPS and wanna talk about representational alignment, mechanistic interpretability, or CogSci!

Evaluating alignment between humans and neural network representations in image-based learning tasks

Humans represent scenes and objects in rich feature spaces, carrying information that allows us to generalise about category memberships and abstract functions with few examples. What determines wheth...

arxiv.org

December 10, 2024 at 3:39 PM

Many thanks to @tankred-saanum.bsky.social @marcelbinz.bsky.social @mgarvert.bsky.social @ericschulz.bsky.social and Doeller lab

Check the paper here: arxiv.org/abs/2306.09377

Reach out if you're at NeurIPS and wanna talk about representational alignment, mechanistic interpretability, or CogSci!

Check the paper here: arxiv.org/abs/2306.09377

Reach out if you're at NeurIPS and wanna talk about representational alignment, mechanistic interpretability, or CogSci!

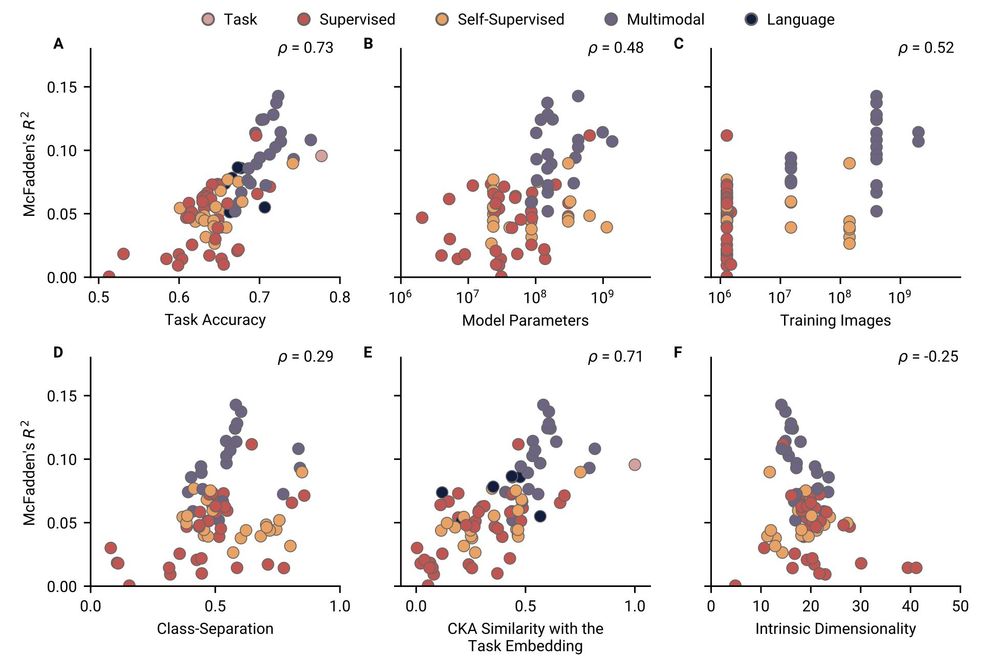

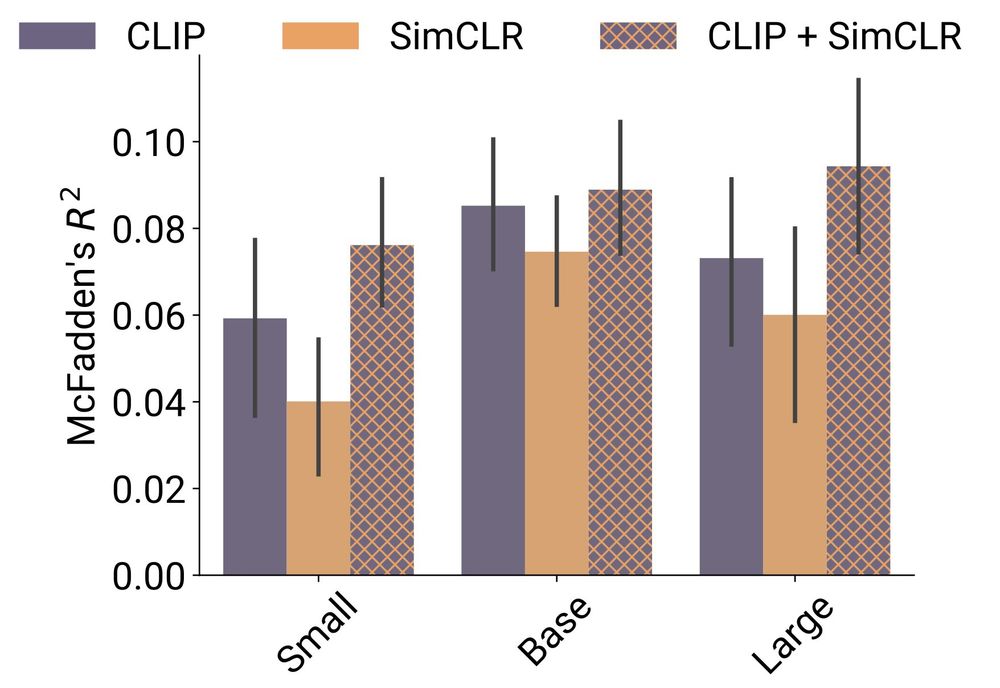

Lastly, we found that previously established alignment methods do not consistently yield better results compared to non-aligned baselines.

December 10, 2024 at 3:39 PM

Lastly, we found that previously established alignment methods do not consistently yield better results compared to non-aligned baselines.

Several other factors were important for alignment, such as model size, how separated class representations were, and intrinsic dimensionality.

December 10, 2024 at 3:39 PM

Several other factors were important for alignment, such as model size, how separated class representations were, and intrinsic dimensionality.

We found that this cannot be fully attributed to pretraining data size in additional analyses.

December 10, 2024 at 3:39 PM

We found that this cannot be fully attributed to pretraining data size in additional analyses.

CLIP-style models predicted human choices the best across the tasks, suggesting multimodal pretraining is important for representational alignment.

December 10, 2024 at 3:39 PM

CLIP-style models predicted human choices the best across the tasks, suggesting multimodal pretraining is important for representational alignment.

We tested humans on reward and category learning tasks using naturalistic images, where the underlying functions were generated using the THINGS embedding.

December 10, 2024 at 3:39 PM

We tested humans on reward and category learning tasks using naturalistic images, where the underlying functions were generated using the THINGS embedding.

tried messaging you, but the app says you cannot be messaged

December 5, 2024 at 4:46 PM

tried messaging you, but the app says you cannot be messaged