Whether or not you’re closely following capabilities/safety progress it’s an incredibly useful resource: a rigorous, concise, & well-evidenced summary of developments!

(1/10)

Whether or not you’re closely following capabilities/safety progress it’s an incredibly useful resource: a rigorous, concise, & well-evidenced summary of developments!

Yes, LLMs can now pass the Turing test, but don’t confuse this with AGI, which is a long way off.

arxiv.org/abs/2503.23674

Yes, LLMs can now pass the Turing test, but don’t confuse this with AGI, which is a long way off.

arxiv.org/abs/2503.23674

GPT-4.5 (when prompted to adopt a humanlike persona) was judged to be the human 73% of the time, suggesting it passes the Turing test (🧵)

GPT-4.5 (when prompted to adopt a humanlike persona) was judged to be the human 73% of the time, suggesting it passes the Turing test (🧵)

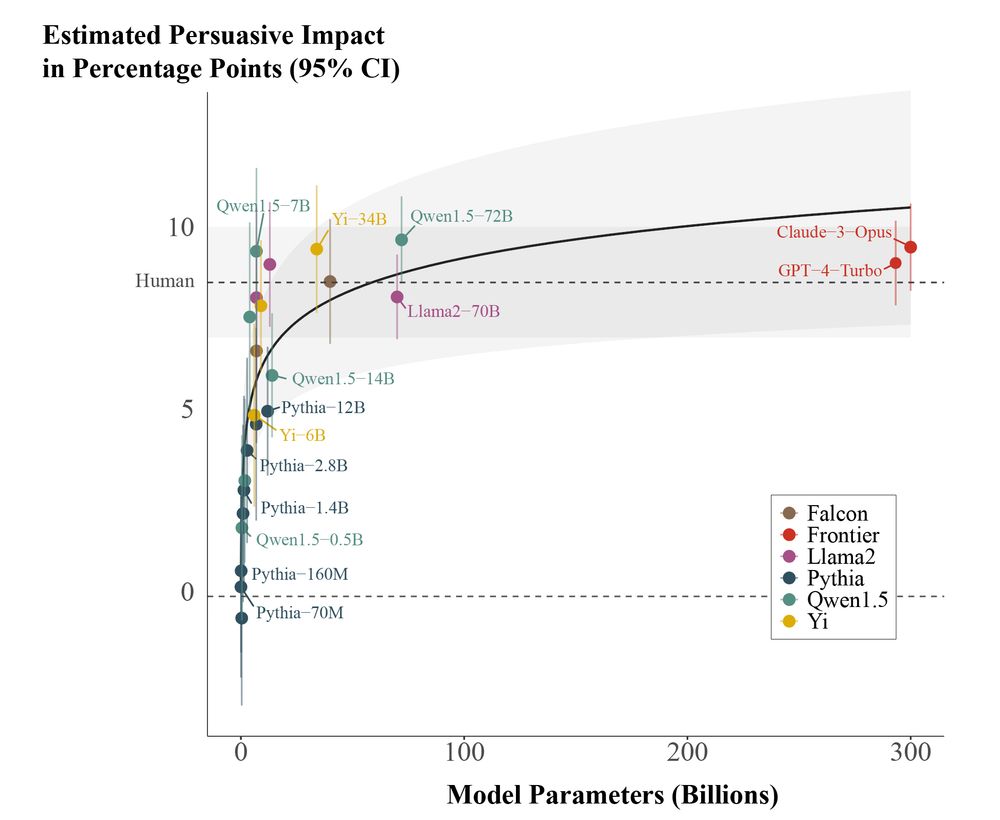

In a large pre-registered experiment (n=25,982), we find evidence that scaling the size of LLMs yields sharply diminishing persuasive returns for static political messages.

🧵:

In a large pre-registered experiment (n=25,982), we find evidence that scaling the size of LLMs yields sharply diminishing persuasive returns for static political messages.

🧵:

We cover domestication syndrome, plasticity-led evolution, soft inheritance, animal traditions, how culture shapes evolution, and more.

Kensy also does a wonderful production job, turning me into a coherent speaker! Thank you

A conversation w/ @kevinlala.bsky.social about his new (co-authored) book, ‘Evolution Evolving'!

Ideas about evolution have changed a lot in recent decades. An emerging view—synthesized by Lala et al.—puts developmental processes front and center.

Listen: disi.org/the-developm...

We cover domestication syndrome, plasticity-led evolution, soft inheritance, animal traditions, how culture shapes evolution, and more.

Kensy also does a wonderful production job, turning me into a coherent speaker! Thank you

camrobjones.substack.com/p/notes-from...

camrobjones.substack.com/p/notes-from...

See if you can tell the difference between a human and an AI here: turingtest.live

See if you can tell the difference between a human and an AI here: turingtest.live

arxiv.org/abs/2412.17128

arxiv.org/abs/2412.17128

You can play now! (For the next hour, and then every day from 8-9am and 3-4am ET).

It uses a 3-player format where you talk to a human and an LLM simultaneously and have to decide which is which.

You can play now! (For the next hour, and then every day from 8-9am and 3-4am ET).

It uses a 3-player format where you talk to a human and an LLM simultaneously and have to decide which is which.

See if you can tell the difference between human and an AI!

See if you can tell the difference between human and an AI!