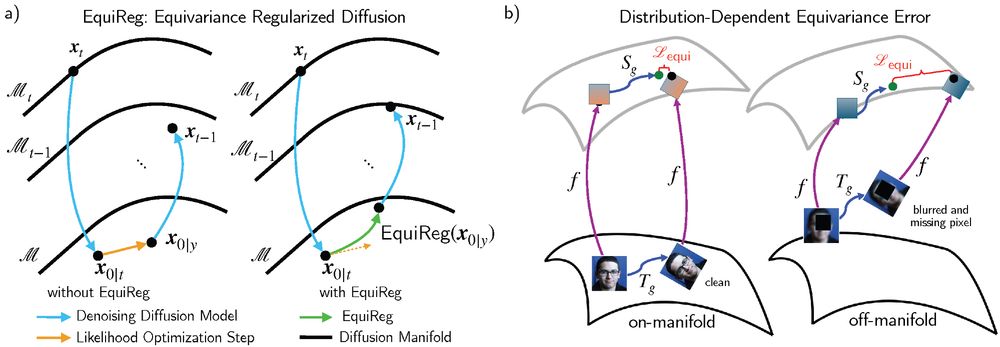

a) training induced: equivariance emerges in encoder-decoder architectures trained with symmetry-preserving augmentations,

b) data inherent: MPE arises from inherent data symmetries, commonly observed in physical systems. 9/

a) training induced: equivariance emerges in encoder-decoder architectures trained with symmetry-preserving augmentations,

b) data inherent: MPE arises from inherent data symmetries, commonly observed in physical systems. 9/

@aditijc.bsky.social, Rayhan Zirvi, Abbas Mammadov, @jiacheny.bsky.social, Chuwei Wang, @anima-anandkumar.bsky.social 1/

@aditijc.bsky.social, Rayhan Zirvi, Abbas Mammadov, @jiacheny.bsky.social, Chuwei Wang, @anima-anandkumar.bsky.social 1/

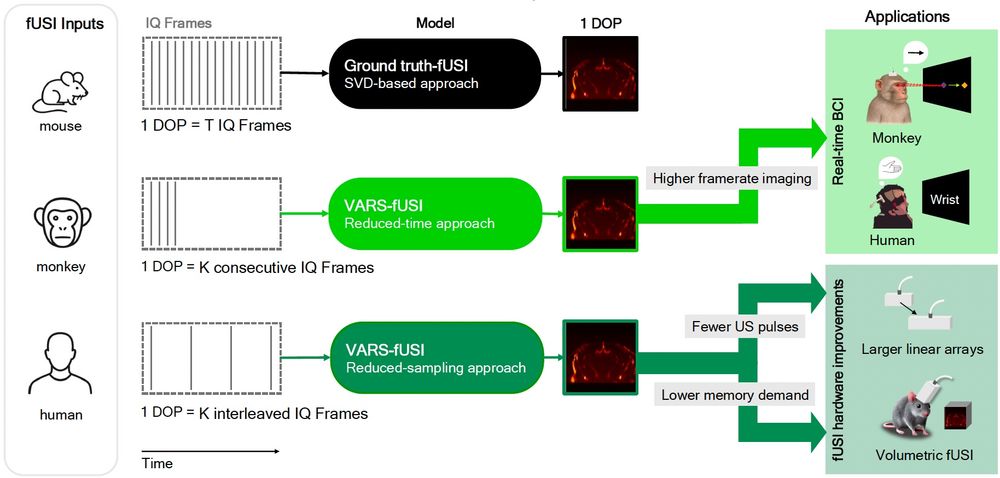

- It respects the complex-valued nature of the data,

- It treats the temporal aspect of the data as a continuous function using neural operators,

- It captures decomposed spatially local and temporally global features. 8/

- It respects the complex-valued nature of the data,

- It treats the temporal aspect of the data as a continuous function using neural operators,

- It captures decomposed spatially local and temporally global features. 8/

VARS-fUSI offers a training pipeline, a fine-tuning procedure, and an accelerated, low-latency online imaging system. You can use VARS-fUSI to reduce the acquisition duration or rate. 7/

VARS-fUSI offers a training pipeline, a fine-tuning procedure, and an accelerated, low-latency online imaging system. You can use VARS-fUSI to reduce the acquisition duration or rate. 7/

We applied VARS-fUSI to brain images collected from mouse, monkey, and human. Our method achieves state-of-the-art performance and shows superior generalization to unseen sessions in new animals, and even across species. 6/

We applied VARS-fUSI to brain images collected from mouse, monkey, and human. Our method achieves state-of-the-art performance and shows superior generalization to unseen sessions in new animals, and even across species. 6/

The first deep learning fUSI method to allow for different sampling durations and rates during training and inference. biorxiv.org/content/10.1... 1/

The first deep learning fUSI method to allow for different sampling durations and rates during training and inference. biorxiv.org/content/10.1... 1/

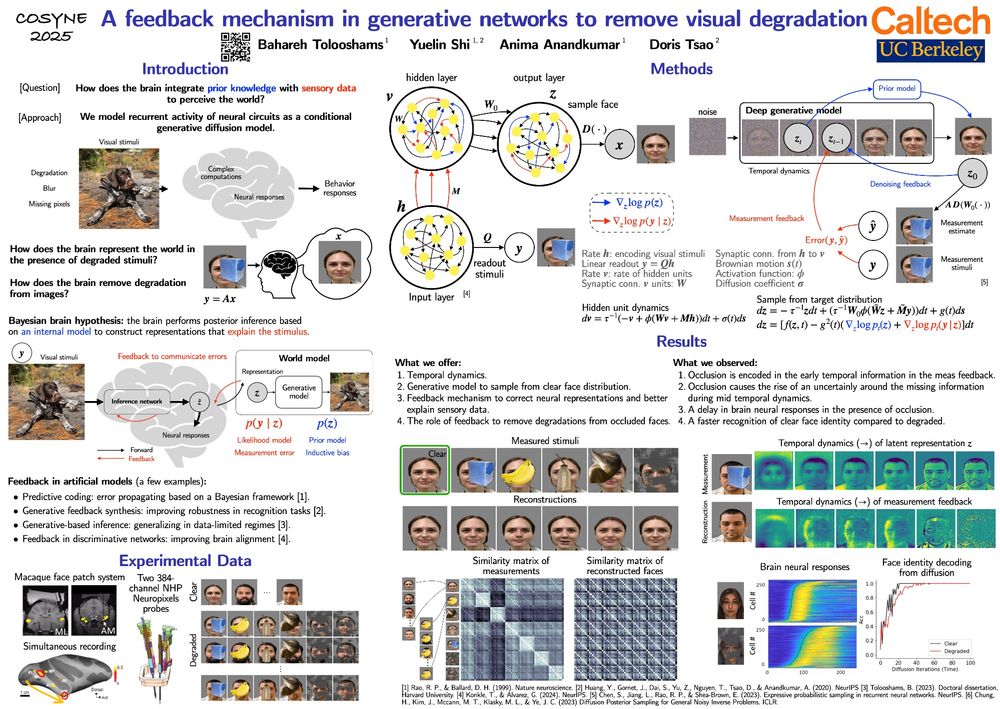

Come check out our poster [1-090] at #cosyne2025:

"A feedback mechanism in generative networks to remove visual degradation," joint work with Yuelin Shi, @anima-anandkumar.bsky.social, and Doris Tsao. 1/2

Come check out our poster [1-090] at #cosyne2025:

"A feedback mechanism in generative networks to remove visual degradation," joint work with Yuelin Shi, @anima-anandkumar.bsky.social, and Doris Tsao. 1/2