- Mega Cache looks nice, unclear whether those modules get cached by legacy mechanisms too

- foreach map looks good for optimizers

- trainable biases means you can now train T5 on flex

- prologue fusion is hype

- context parallel brings ring attention

pytorch.org/blog/pytorch...

- Mega Cache looks nice, unclear whether those modules get cached by legacy mechanisms too

- foreach map looks good for optimizers

- trainable biases means you can now train T5 on flex

- prologue fusion is hype

- context parallel brings ring attention

pytorch.org/blog/pytorch...

highlights:

- flex attention: better compilation of blockmask creation, better support for dynamic shapes

- cuDNN SDPA: fixes for memory layout

- CUDA 12.6

- python 3.13

- MaskedTensor memory leak fix

highlights:

- flex attention: better compilation of blockmask creation, better support for dynamic shapes

- cuDNN SDPA: fixes for memory layout

- CUDA 12.6

- python 3.13

- MaskedTensor memory leak fix

mostly I'm looking forward to better compilation of flex block mask creation, and better support for flex attention on dynamic shapes.

there's also fixes for memory layout in cuDNN SDPA.

dev-discuss.pytorch.org/t/pytorch-re...

mostly I'm looking forward to better compilation of flex block mask creation, and better support for flex attention on dynamic shapes.

there's also fixes for memory layout in cuDNN SDPA.

dev-discuss.pytorch.org/t/pytorch-re...

heat rises. "the top is cool enough to drink" implies "everything below it is colder".

drinking from the bottom lets us access safe temperatures earlier and before the whole cup cools.

heat rises. "the top is cool enough to drink" implies "everything below it is colder".

drinking from the bottom lets us access safe temperatures earlier and before the whole cup cools.

github.com/npm/cli/issu...

github.com/npm/cli/issu...

pip install huggingface_hub[hf_transfer]

HF_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download

pip install huggingface_hub[hf_transfer]

HF_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download

then you realize it was 111 secs

then you realize it was 111 secs

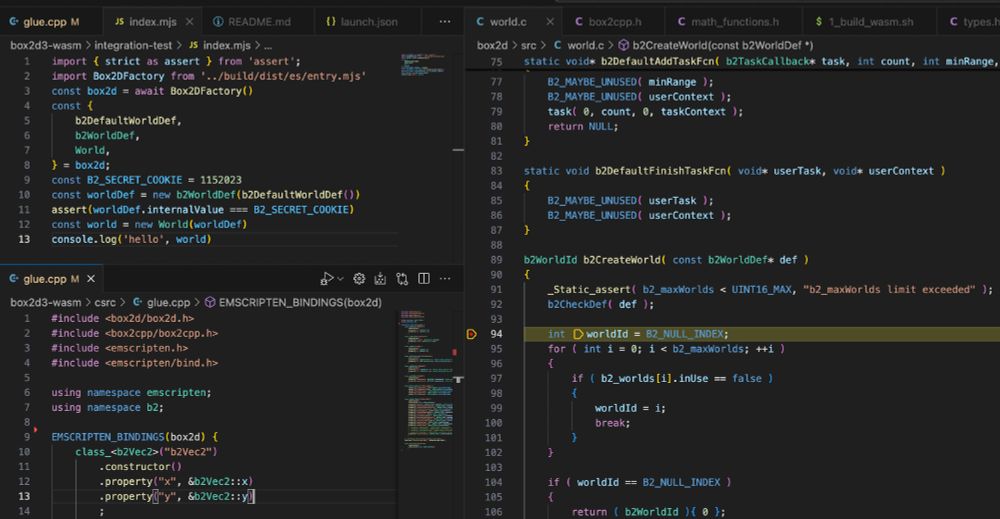

so we don't have to click terminate a hundred times

github.com/microsoft/vs...

so we don't have to click terminate a hundred times

github.com/microsoft/vs...

github.com/facebookrese...

github.com/facebookrese...

github.com/NVlabs/edm2

github.com/NVlabs/edm2

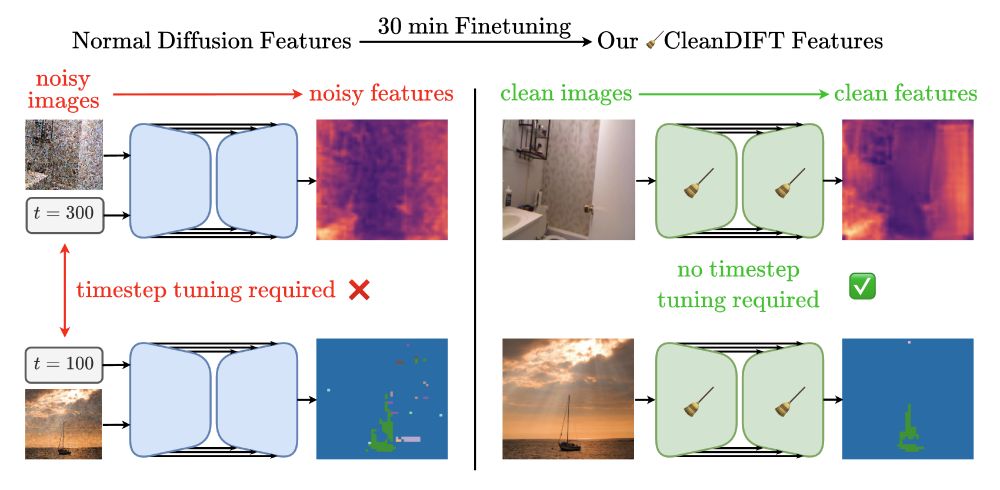

Yes, it is - but we found a way to do better. 🚀

Here’s how we unlock better features, no noise, no hassle.

📝 Project Page: compvis.github.io/cleandift

💻 Code: github.com/CompVis/clea...

🧵👇

Yes, it is - but we found a way to do better. 🚀

Here’s how we unlock better features, no noise, no hassle.

📝 Project Page: compvis.github.io/cleandift

💻 Code: github.com/CompVis/clea...

🧵👇

I measure suites of shapes, log recompiles, check which require warmup.

operation compile competitive with whole-model compile.

some operations prefer compiler disabled.

dynamic often slow.

I measure suites of shapes, log recompiles, check which require warmup.

operation compile competitive with whole-model compile.

some operations prefer compiler disabled.

dynamic often slow.

Our answer: They’re two sides of the same coin. We wrote a blog post to show how diffusion models and Gaussian flow matching are equivalent. That’s great: It means you can use them interchangeably.

Our answer: They’re two sides of the same coin. We wrote a blog post to show how diffusion models and Gaussian flow matching are equivalent. That’s great: It means you can use them interchangeably.

www.youtube.com/watch?v=tuDA...

www.youtube.com/watch?v=tuDA...