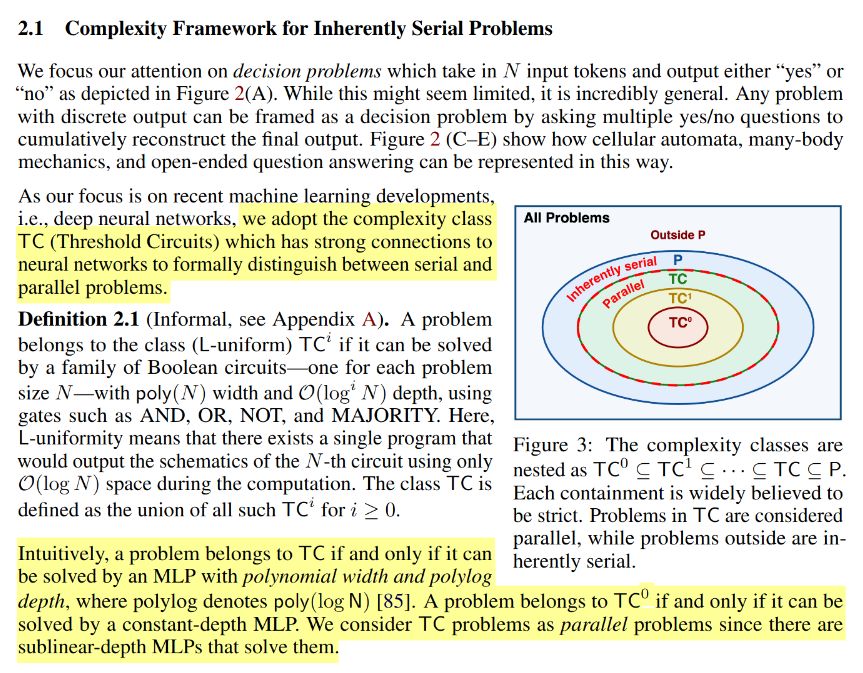

- not all solutions will require serial computations, even for something outside of TC0

- approximations can fall pretty close, and oftentimes we don´t expect anything much better than an approximation

- not all solutions will require serial computations, even for something outside of TC0

- approximations can fall pretty close, and oftentimes we don´t expect anything much better than an approximation

Note that there's a interesting operating mode attached to being able to self-assess: generate multiple options then pick the better one (self-monte carlo ?)

Note that there's a interesting operating mode attached to being able to self-assess: generate multiple options then pick the better one (self-monte carlo ?)

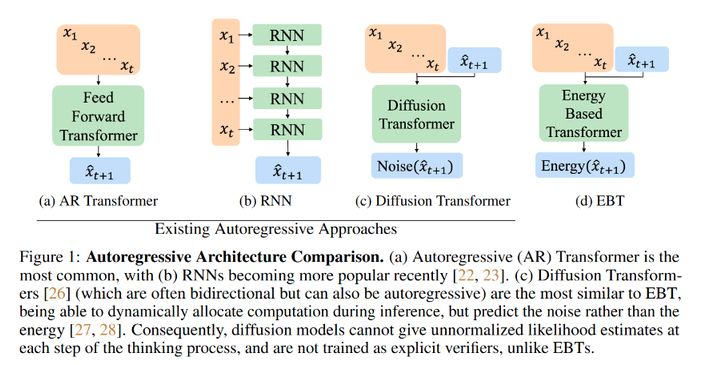

The paper runs pretty deep, besides the initial handwave which is nice and intuitive (model essentially predicts a step, not final distribution)

The paper runs pretty deep, besides the initial handwave which is nice and intuitive (model essentially predicts a step, not final distribution)