cowleygroup.cshl.edu

One paper that molded my research direction was "Sequential optimal design of neurophysiology experiments." from Lewi, Butera, & Paninski in 2009.

The key idea is to let the model speak for itself by choosing its own stimuli in closed-loop experiments!

One paper that molded my research direction was "Sequential optimal design of neurophysiology experiments." from Lewi, Butera, & Paninski in 2009.

The key idea is to let the model speak for itself by choosing its own stimuli in closed-loop experiments!

Overall, our main hypothesis is that the DNN models we use in comp neuro are needlessly large. We should strive for predictive *and* explainable DNN models.

Overall, our main hypothesis is that the DNN models we use in comp neuro are needlessly large. We should strive for predictive *and* explainable DNN models.

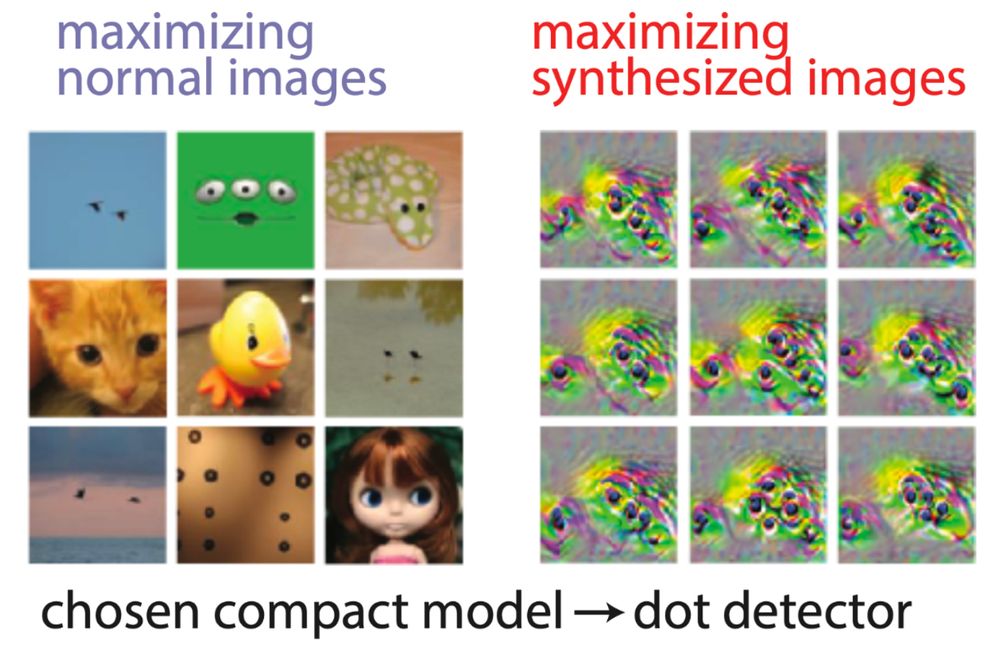

We found a simple mechanism for dot size selectivity:

Detect the four corners of the dot and inhibit dots with large edges.

200+ compact models to go!

We found a simple mechanism for dot size selectivity:

Detect the four corners of the dot and inhibit dots with large edges.

200+ compact models to go!

We put this to the test with my favorite compact model---a dot detector. How does it work?

We put this to the test with my favorite compact model---a dot detector. How does it work?

Across all models, we found a common motif: The compact models share similar filters in early layers but then heavily specialize via a consolidation step.

Across all models, we found a common motif: The compact models share similar filters in early layers but then heavily specialize via a consolidation step.

We used the compact models to identify adversarial images---small perturbations to the input that lead to large changes in V4 responses.

These adversarial images worked on V4 neurons!

We used the compact models to identify adversarial images---small perturbations to the input that lead to large changes in V4 responses.

These adversarial images worked on V4 neurons!

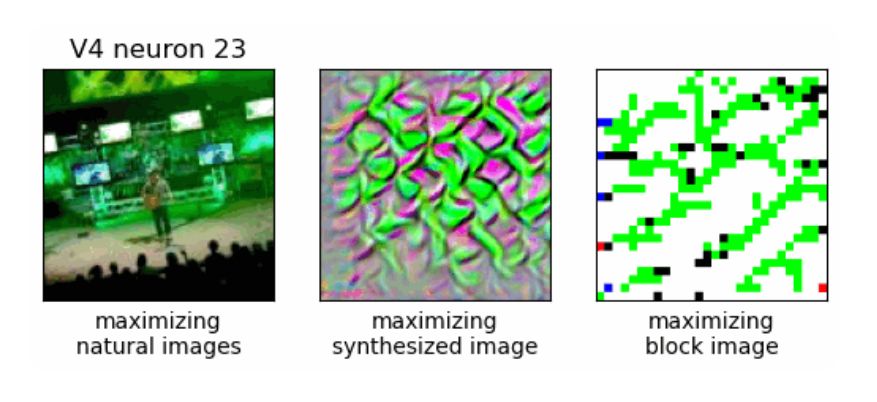

1. We trained compact models on previous sessions.

2. Probed their maximizing images.

3. Recorded V4 responses to these images on a future session.

These 'preferred stimuli' did yield larger responses (red dots) than those to natural images (black dots).

1. We trained compact models on previous sessions.

2. Probed their maximizing images.

3. Recorded V4 responses to these images on a future session.

These 'preferred stimuli' did yield larger responses (red dots) than those to natural images (black dots).

For example, we found what appears to be a palm tree detecting V4 neuron---its maximizing natural and synthesized images were palm-tree like.

To make sure this was real, we ran causal tests.

For example, we found what appears to be a palm tree detecting V4 neuron---its maximizing natural and synthesized images were palm-tree like.

To make sure this was real, we ran causal tests.

We can display *all* the convolutional weights of a compact model in one figure!

We can display *all* the convolutional weights of a compact model in one figure!

We relied on two more ML tricks---knowledge distillation and pruning---to compress the deep ensemble model to obtain compact models.

We relied on two more ML tricks---knowledge distillation and pruning---to compress the deep ensemble model to obtain compact models.

We first obtained a highly-predictive, data-driven DNN by using every ML trick in the book---transfer learning, ensemble learning, active learning, etc.

and collected lots of responses: 45 sessions, ~75k unique images.

We first obtained a highly-predictive, data-driven DNN by using every ML trick in the book---transfer learning, ensemble learning, active learning, etc.

and collected lots of responses: 45 sessions, ~75k unique images.

Compact deep neural network models of visual cortex

B. Cowley, P. Stan, J. Pillow*, M. Smith*

tiny.cc/dmxhvz

Task-driven DNN models nicely predict neural responses but have millions of params---next to impossible to explain. Do they need to be so large?

Compact deep neural network models of visual cortex

B. Cowley, P. Stan, J. Pillow*, M. Smith*

tiny.cc/dmxhvz

Task-driven DNN models nicely predict neural responses but have millions of params---next to impossible to explain. Do they need to be so large?

The LCs read out from multiple optic lobe neuron types, and downstream neuron types tend to read out from multiple LCs.

(from newly-released FlyWire connectome)

The LCs read out from multiple optic lobe neuron types, and downstream neuron types tend to read out from multiple LCs.

(from newly-released FlyWire connectome)

→ Almost every neural channel encoded multiple visual features.

→ Multiple neural channels drove the same behavior.

In the end, our model suggests that the optic glomeruli form a distributed population code…

→ Almost every neural channel encoded multiple visual features.

→ Multiple neural channels drove the same behavior.

In the end, our model suggests that the optic glomeruli form a distributed population code…

The model *never* had access to neural activity. Even so, the model’s predicted activity matched well with real recorded responses!

(top plot: real LC11 neuron responses)

(bottom plot: model LC11 responses)

The model *never* had access to neural activity. Even so, the model’s predicted activity matched well with real recorded responses!

(top plot: real LC11 neuron responses)

(bottom plot: model LC11 responses)

We then devised "knockout training", silencing model units during training similar to how we silenced the real neurons.

We then devised "knockout training", silencing model units during training similar to how we silenced the real neurons.

For the model’s input, we reconstructed the male’s visual scene as he chases the female.

(sorry, no wings—they were beyond my animation skills!)

For the model’s input, we reconstructed the male’s visual scene as he chases the female.

(sorry, no wings—they were beyond my animation skills!)

--> 2x silenced data with new LC31 neuron type

--> more LC neural recordings

--> FlyWire connectome

--> knockout training simulations

Enjoy!

tinyurl.com/5n7t6tpv

--> 2x silenced data with new LC31 neuron type

--> more LC neural recordings

--> FlyWire connectome

--> knockout training simulations

Enjoy!

tinyurl.com/5n7t6tpv