UChicago/Argonne/Globus - UofIllinois alum. materials, chemistry, physics. Opinions are my own.🤖🔬

1. Search literature (currently stubbed)

2. Enumerate papers, extract contacts

3. Send email w/ data drop location

4. Parse data

Does anyone want to help productionize this?

1. Search literature (currently stubbed)

2. Enumerate papers, extract contacts

3. Send email w/ data drop location

4. Parse data

Does anyone want to help productionize this?

What did I learn?

1. Gemini 3 feels clearly ahead in terms of front-end design. Less AI slop, and more focus.

What did I learn?

1. Gemini 3 feels clearly ahead in terms of front-end design. Less AI slop, and more focus.

See the projects here and come join our community: llmhackathon.github.io

See the projects here and come join our community: llmhackathon.github.io

Access Details: github.com/facebookrese...

Access Details: github.com/facebookrese...

We'll share our findings soon, but from what I've heard already prepare to be amazed.

We'll share our findings soon, but from what I've heard already prepare to be amazed.

Read the paper: arxiv.org/abs/2508.18489

Read the paper: arxiv.org/abs/2508.18489

⚛️ Chemistry/Materials (MLIPs in Garden, gaps w/ Globus Compute)

🧬 Bioinformatics (phylogenetics across ALCF+NERSC)

📂 Filesystem monitoring (Icicle + Octopus)

Paper: arxiv.org/abs/2508.18489

⚛️ Chemistry/Materials (MLIPs in Garden, gaps w/ Globus Compute)

🧬 Bioinformatics (phylogenetics across ALCF+NERSC)

📂 Filesystem monitoring (Icicle + Octopus)

Paper: arxiv.org/abs/2508.18489

+ Move data effortlessly across systems

+ Launch simulations on Exascale HPC systems

+ Run AI models via Garden & Galaxy

All via user or agent intent/language to make the next breakthroughs in energy, materials, and chemistry.

+ Move data effortlessly across systems

+ Launch simulations on Exascale HPC systems

+ Run AI models via Garden & Galaxy

All via user or agent intent/language to make the next breakthroughs in energy, materials, and chemistry.

Models are made available through TorchSim significantly boosting performance

🔗 github.com/Radical-AI/t...

On cloud: models are available through credits from @Modal (thanks @bernhardsson) so researchers can try MLIPs without spinning up infra.

Models are made available through TorchSim significantly boosting performance

🔗 github.com/Radical-AI/t...

On cloud: models are available through credits from @Modal (thanks @bernhardsson) so researchers can try MLIPs without spinning up infra.

SevenNet and more.

We’ve created initial benchmarks for task performance, cloud cost, and HPC compute cost for each model.

See the MLIP Garden here: thegardens.ai#/use-cases/m...

SevenNet and more.

We’ve created initial benchmarks for task performance, cloud cost, and HPC compute cost for each model.

See the MLIP Garden here: thegardens.ai#/use-cases/m...

Today, we release the MLIP Garden v0.1.

What you can do now:

- Experiment ~instantly

- Scale deployments on experimental NSF and Dept of Energy systems

Today, we release the MLIP Garden v0.1.

What you can do now:

- Experiment ~instantly

- Scale deployments on experimental NSF and Dept of Energy systems

This is your opportunity to rally a team to help solve big problems!

Register here for the link: llmhackathon.github.io

This is your opportunity to rally a team to help solve big problems!

Register here for the link: llmhackathon.github.io

🤗 Join the preeminent community for LLM and multimodal model applications in materials science and chemistry.

🤗 Join the preeminent community for LLM and multimodal model applications in materials science and chemistry.

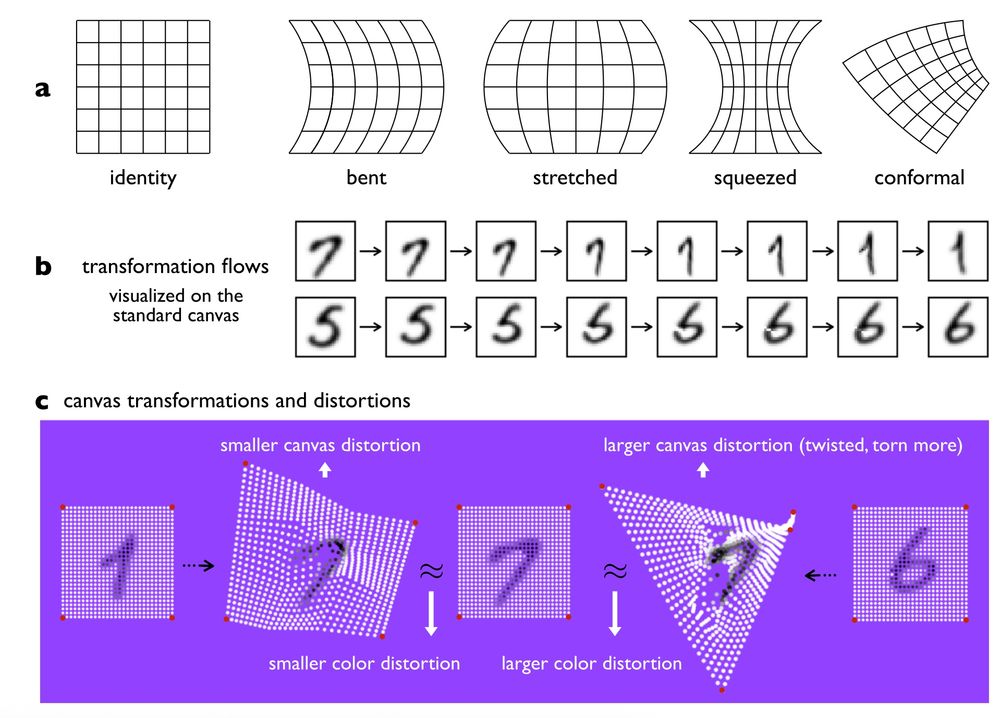

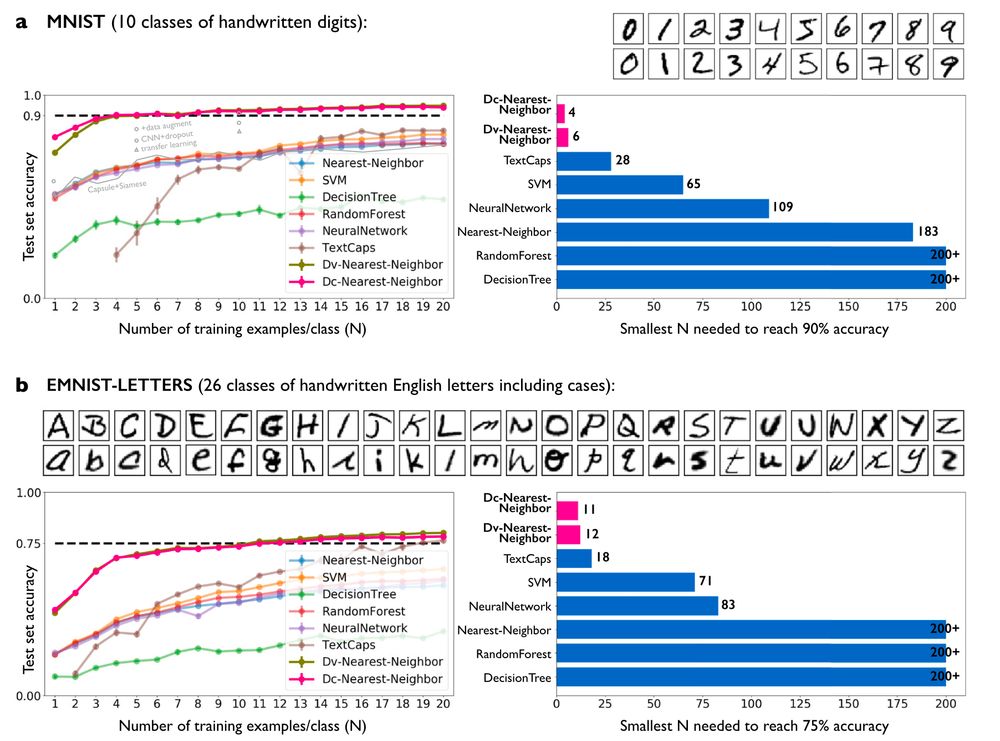

Using this approach, they achieve near-human performance on character/doodle recognition without transfer learning and with one or few examples.

Using this approach, they achieve near-human performance on character/doodle recognition without transfer learning and with one or few examples.

Last year, 34 teams submitted inspiring and open examples. This year, we are expecting amazing applications with higher powered models and agentic frameworks. Your imagination is the only limit.

Last year, 34 teams submitted inspiring and open examples. This year, we are expecting amazing applications with higher powered models and agentic frameworks. Your imagination is the only limit.

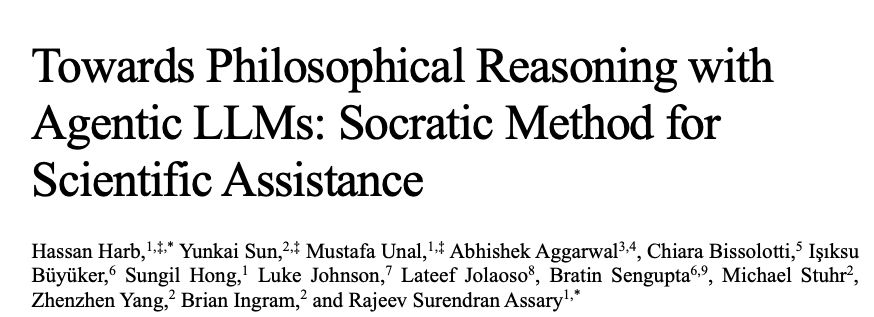

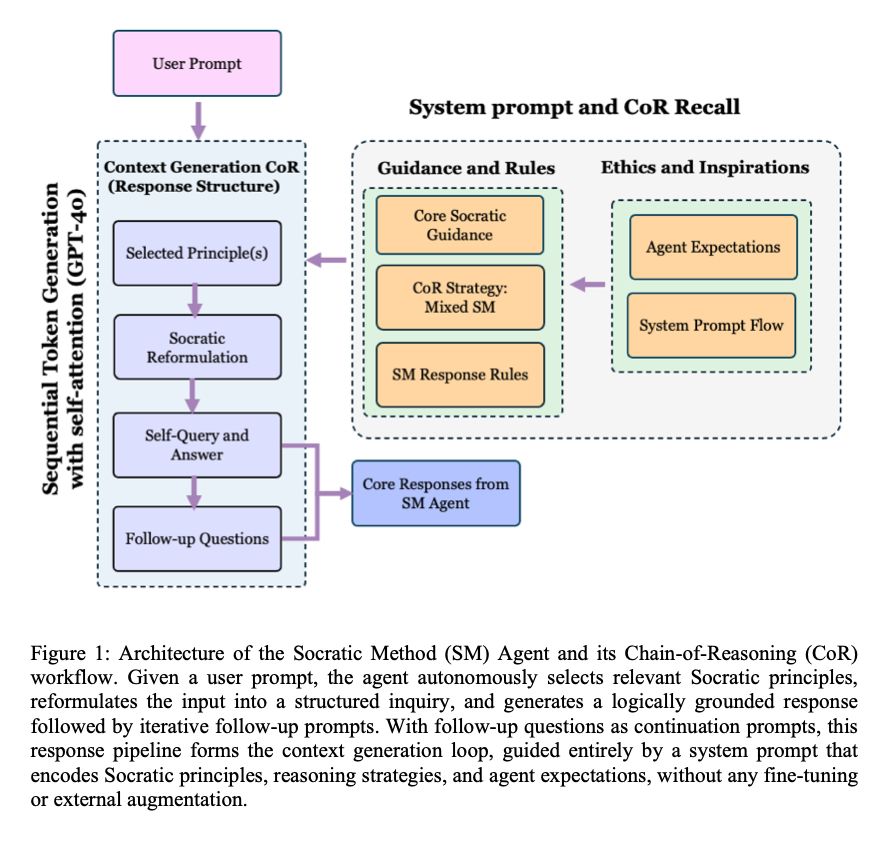

Wonderful work by the team at Argonne, UIC, and NU including Hassan Harb, Rajeev Assary, Brian Ingram and others.

Paper link: doi.org/10.26434/che...

Prompt: github.com/HassanHarb92...

Wonderful work by the team at Argonne, UIC, and NU including Hassan Harb, Rajeev Assary, Brian Ingram and others.

Paper link: doi.org/10.26434/che...

Prompt: github.com/HassanHarb92...

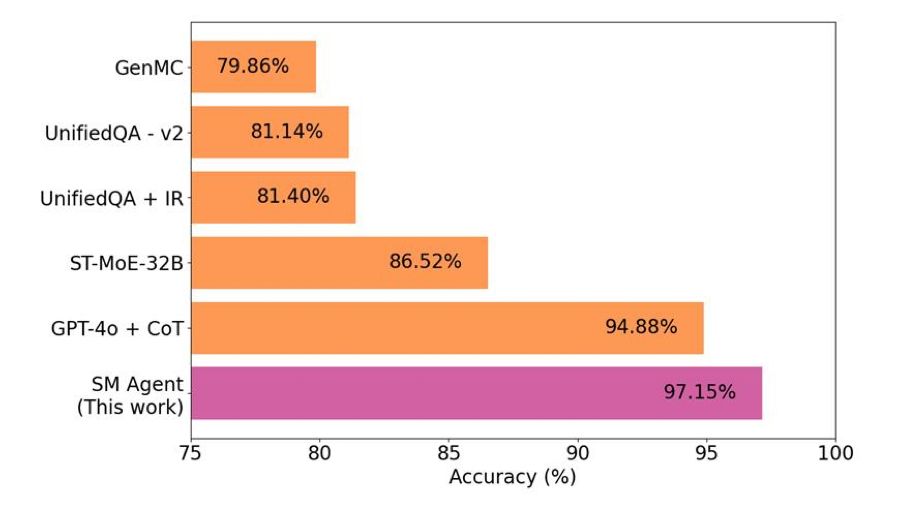

➡️ The prompted LLM saw improved performance, achieving SOTA (97.15%) on the ARC Challenge without fine-tuning or external tool usage.

➡️ The approach showed consistent gains in reasoning depth, clarity, and domain-specific insight across chemistry and materials science.

➡️ The prompted LLM saw improved performance, achieving SOTA (97.15%) on the ARC Challenge without fine-tuning or external tool usage.

➡️ The approach showed consistent gains in reasoning depth, clarity, and domain-specific insight across chemistry and materials science.

Continued below w links:

Continued below w links:

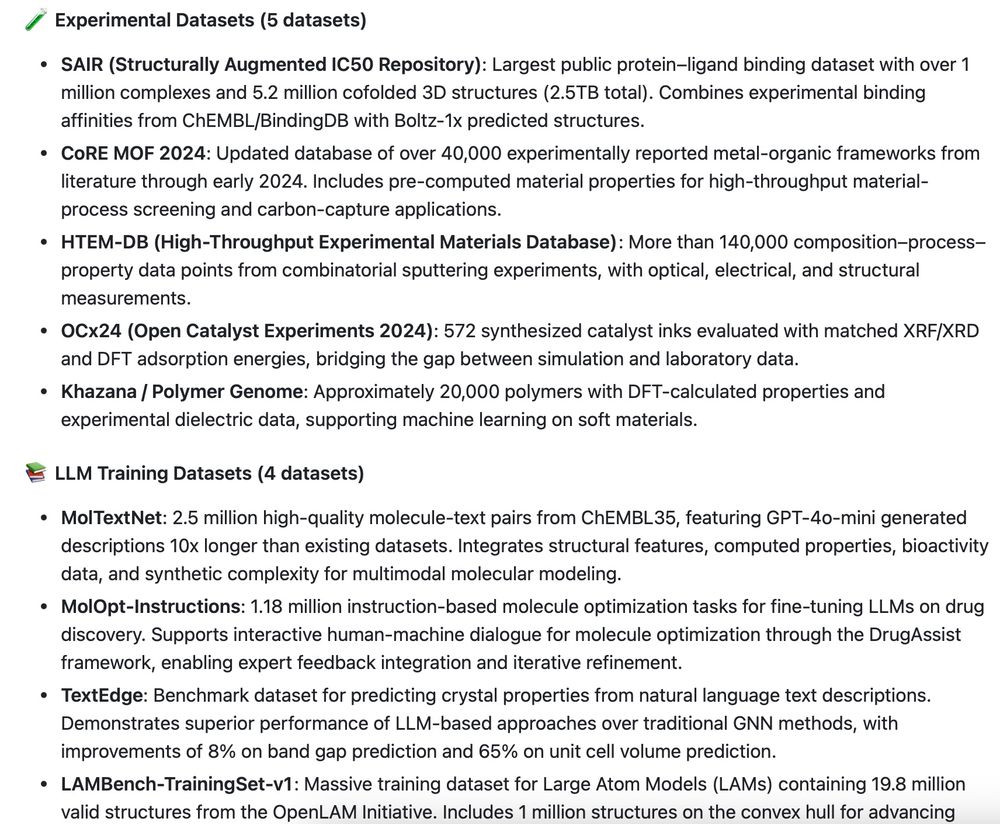

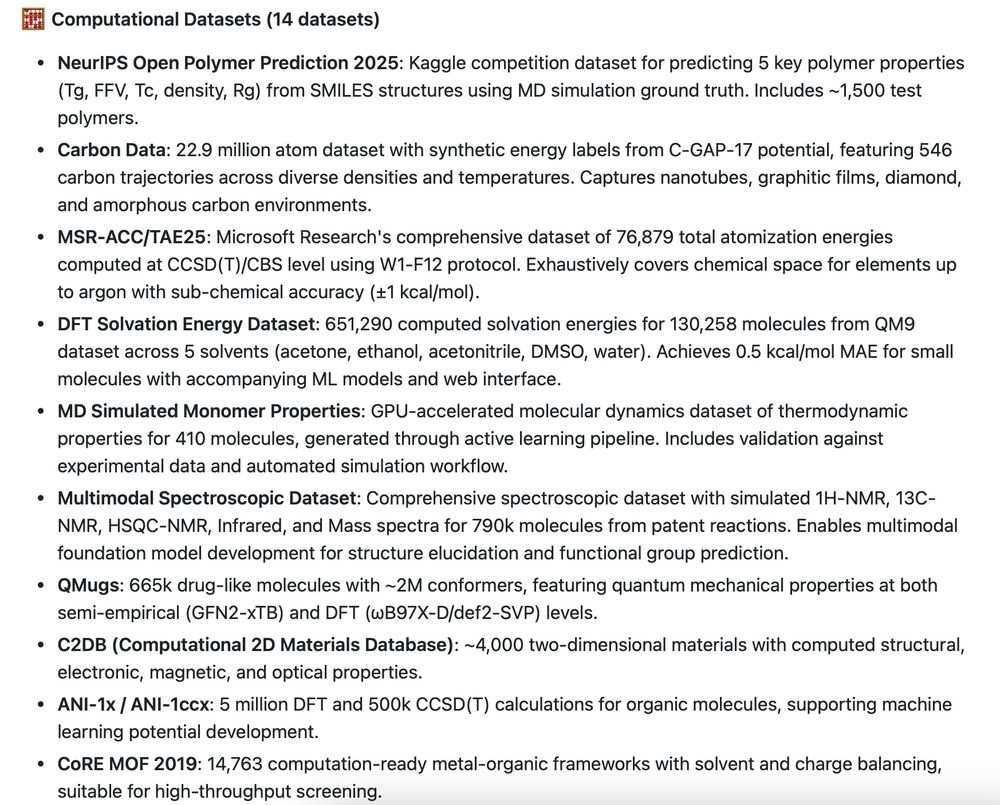

June updates: 26 high-quality datasets spanning polymer science, drug discovery, spectroscopy, MOF databases, + foundation model training datasets.

🔗: github.com/blaiszik/awe...

June updates: 26 high-quality datasets spanning polymer science, drug discovery, spectroscopy, MOF databases, + foundation model training datasets.

🔗: github.com/blaiszik/awe...