Especially interested in model representations.

We prove that a small KL divergence between models is not enough to guarantee similar representations. Here is an example of how to construct two models with small KL divergence, but representations which are far from being linear transformations of each other.

We prove that a small KL divergence between models is not enough to guarantee similar representations. Here is an example of how to construct two models with small KL divergence, but representations which are far from being linear transformations of each other.

Very happy that so many people are interested in our work :D

If you didn’t manage to have a look yet: This work is relevant if you want to compare representations in language models, and it can be read here: arxiv.org/abs/2502.10201

Very happy that so many people are interested in our work :D

If you didn’t manage to have a look yet: This work is relevant if you want to compare representations in language models, and it can be read here: arxiv.org/abs/2502.10201

Main points:

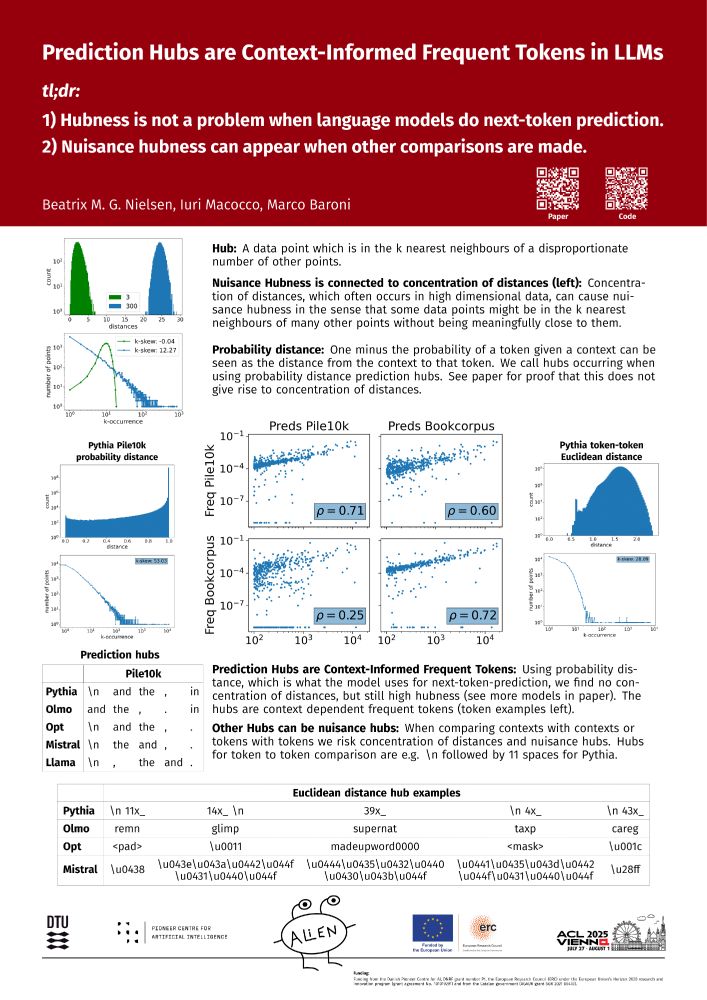

1. Hubness is not a problem when language models do next-token prediction.

2. Nuisance hubness can appear when other comparisons are made.

Main points:

1. Hubness is not a problem when language models do next-token prediction.

2. Nuisance hubness can appear when other comparisons are made.