Despite massive advances in scale, architecture, & training - from BERT to Llama-3.1 - transformer models organize linguistic information the same.

The classical NLP pipeline isn't an artifact of early models; it persists in modern ones & seems fundamental to NTP.

Despite massive advances in scale, architecture, & training - from BERT to Llama-3.1 - transformer models organize linguistic information the same.

The classical NLP pipeline isn't an artifact of early models; it persists in modern ones & seems fundamental to NTP.

Read the paper for our full analysis including steering vectors, intrinsic dimensionality, and training dynamics (in OLMo2 and Pythia)!

Read the paper for our full analysis including steering vectors, intrinsic dimensionality, and training dynamics (in OLMo2 and Pythia)!

We looked at 6 typologically diverse languages: English, Chinese, German, French, Russian and Turkish.

We looked at 6 typologically diverse languages: English, Chinese, German, French, Russian and Turkish.

📊 Large modern models compress the entire pipeline into fewer layers. Information emerges earlier and consolidates faster.

Same hierarchical organization, but more efficient. Models seem to have gotten better at building useful representations quickly.

📊 Large modern models compress the entire pipeline into fewer layers. Information emerges earlier and consolidates faster.

Same hierarchical organization, but more efficient. Models seem to have gotten better at building useful representations quickly.

Early layers capture syntax (POS, dependencies) -> Middle layers handle semantics & entities (NER, SRL) -> Later layers encode discourse (coreference, relations)

This holds whether you're looking at BERT, Qwen2.5 or OLMo 2.

Early layers capture syntax (POS, dependencies) -> Middle layers handle semantics & entities (NER, SRL) -> Later layers encode discourse (coreference, relations)

This holds whether you're looking at BERT, Qwen2.5 or OLMo 2.

🔍The answer: YES. Modern LMs consistently rediscover the classical NLP pipeline.

🔍The answer: YES. Modern LMs consistently rediscover the classical NLP pipeline.

Despite rapid advances since BERT, certain aspects of how LMs process language remain remarkably consistent💡

Paper: arxiv.org/abs/2506.02132

Code: github.com/ml5885/model...

Despite rapid advances since BERT, certain aspects of how LMs process language remain remarkably consistent💡

Paper: arxiv.org/abs/2506.02132

Code: github.com/ml5885/model...

Whole-word embeddings consistently outperform averaged subtoken representations - linguistic regularities are stored at the word level, not compositionally!

Whole-word embeddings consistently outperform averaged subtoken representations - linguistic regularities are stored at the word level, not compositionally!

🎢 Some models (GPT-2, OLMo-2) compress their middle layers to just 1-2 dimensions capturing 50-99% of variance, then expand again! This bottleneck aligns with where grammar is most accessible & lexical info is most nonlinear.

🎢 Some models (GPT-2, OLMo-2) compress their middle layers to just 1-2 dimensions capturing 50-99% of variance, then expand again! This bottleneck aligns with where grammar is most accessible & lexical info is most nonlinear.

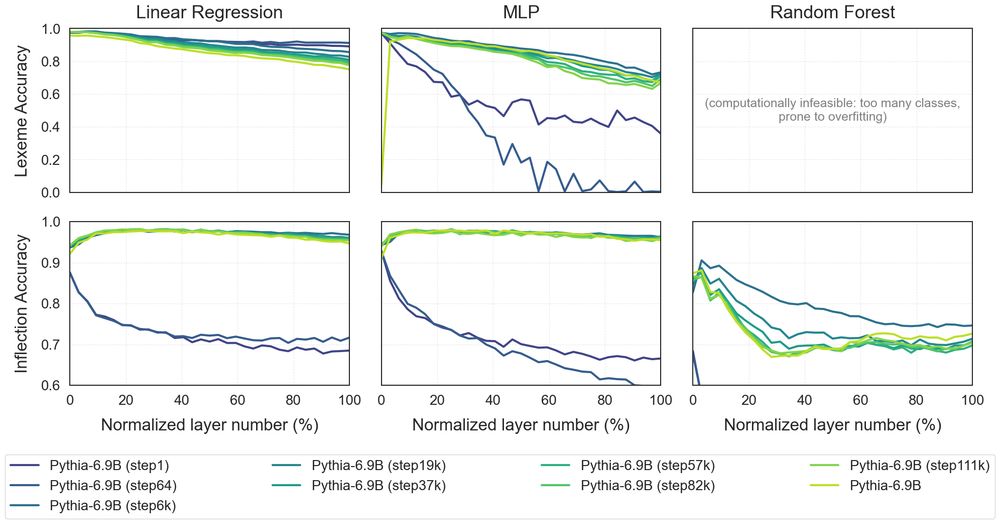

We find that models learn this linguistic organization in the first few thousand steps! But this encoding slowly degrades as training progresses. 📉

We find that models learn this linguistic organization in the first few thousand steps! But this encoding slowly degrades as training progresses. 📉

📈 We ran control tasks with random labels - inflection classifiers show high selectivity (real learning!) while lemma classifiers don't (memorization).

📈 We ran control tasks with random labels - inflection classifiers show high selectivity (real learning!) while lemma classifiers don't (memorization).

- 📉 Lexical info concentrates in early layers & becomes increasingly nonlinear in deeper layers

- ✨ Inflection (grammar) stays linearly accessible throughout ALL layers

- Models memorize word identity but learn generalizable patterns for inflections!

- 📉 Lexical info concentrates in early layers & becomes increasingly nonlinear in deeper layers

- ✨ Inflection (grammar) stays linearly accessible throughout ALL layers

- Models memorize word identity but learn generalizable patterns for inflections!

We trained classifiers on hidden activations from 16 models (BERT -> Llama 3.1) to find out how they store word identity (lexemes) vs. grammar (inflections).

We trained classifiers on hidden activations from 16 models (BERT -> Llama 3.1) to find out how they store word identity (lexemes) vs. grammar (inflections).