Link: arxiv.org/abs/2502.16763

Link: arxiv.org/abs/2502.16763

We study the effect of using only fixed positional encodings in the Transformer architecture for computational tasks. These positional encodings remain the same across layers.

We study the effect of using only fixed positional encodings in the Transformer architecture for computational tasks. These positional encodings remain the same across layers.

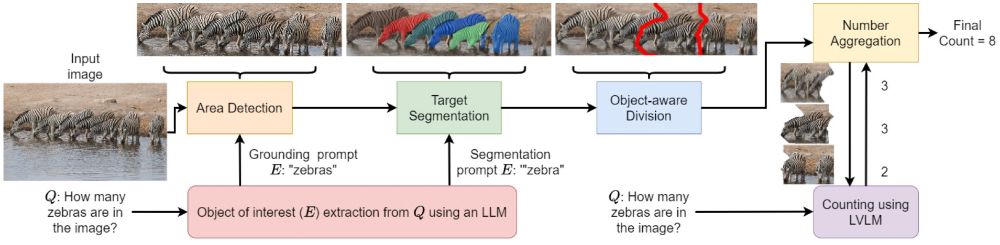

By splitting the image into sub-images using a novel object-aware division technique, we can enhance the performance of LVLMs such as GPT-4o and Gemini Pro 1.5.

Link: arxiv.org/abs/2412.00686

By splitting the image into sub-images using a novel object-aware division technique, we can enhance the performance of LVLMs such as GPT-4o and Gemini Pro 1.5.

Link: arxiv.org/abs/2412.00686

50% of papers have discussion - let’s bring this number up!