Avik Dey

@avikdey.bsky.social

Mostly Data, ML, OSS & Society.

Stop chasing Approximately Generated Illusions; focus on Specialized Small LMs FTW.

If you cant explain it simply, you dont understand it well enough.

Shadow of https://linkedin.com/in/avik-dey, except have a beard now.

Stop chasing Approximately Generated Illusions; focus on Specialized Small LMs FTW.

If you cant explain it simply, you dont understand it well enough.

Shadow of https://linkedin.com/in/avik-dey, except have a beard now.

November 5, 2025 at 4:06 PM

Every author writing like this should be required to rewrite abstracts in plain English and read it aloud to an audience of their peers, before they can publish it.

Summary: Conjectural with nice diagrams but no quantitative measures and ignores prior literature.

arxiv.org/pdf/2510.26745

Summary: Conjectural with nice diagrams but no quantitative measures and ignores prior literature.

arxiv.org/pdf/2510.26745

November 3, 2025 at 4:14 PM

Every author writing like this should be required to rewrite abstracts in plain English and read it aloud to an audience of their peers, before they can publish it.

Summary: Conjectural with nice diagrams but no quantitative measures and ignores prior literature.

arxiv.org/pdf/2510.26745

Summary: Conjectural with nice diagrams but no quantitative measures and ignores prior literature.

arxiv.org/pdf/2510.26745

Karpathy’s tweet is a live demo of the learning loop he promotes. Consciously or not, he is channeling:

- Kolb: Experimental learning theory

- Feynman: Explain in your own words

- Dweck: Growth mindset scale

The medium is the message.

- Kolb: Experimental learning theory

- Feynman: Explain in your own words

- Dweck: Growth mindset scale

The medium is the message.

November 1, 2025 at 4:16 PM

Karpathy’s tweet is a live demo of the learning loop he promotes. Consciously or not, he is channeling:

- Kolb: Experimental learning theory

- Feynman: Explain in your own words

- Dweck: Growth mindset scale

The medium is the message.

- Kolb: Experimental learning theory

- Feynman: Explain in your own words

- Dweck: Growth mindset scale

The medium is the message.

She’s making the classic layman’s mistake of thinking DeepMind is synonymous with AI. If she had actually read even the first paragraph of their paper, she might have clued in it’s a great example of purely statistical machine learning, but that’s probably asking too much.

arxiv.org/pdf/2506.10772

arxiv.org/pdf/2506.10772

October 30, 2025 at 4:02 PM

She’s making the classic layman’s mistake of thinking DeepMind is synonymous with AI. If she had actually read even the first paragraph of their paper, she might have clued in it’s a great example of purely statistical machine learning, but that’s probably asking too much.

arxiv.org/pdf/2506.10772

arxiv.org/pdf/2506.10772

Today for some reason, I felt the urge to share this tweet posted back in 2018:

October 20, 2025 at 6:22 PM

Today for some reason, I felt the urge to share this tweet posted back in 2018:

Read this if you work with LLMs. For those of us who have been hands on, both under the hood and in front of the shiny bits, it’s been obvious from the get go.

Folks still refusing to acknowledge the obvious are invested in it, directly or indirectly.

For the rest of us it’s just another tool.

Folks still refusing to acknowledge the obvious are invested in it, directly or indirectly.

For the rest of us it’s just another tool.

October 18, 2025 at 11:20 PM

Read this if you work with LLMs. For those of us who have been hands on, both under the hood and in front of the shiny bits, it’s been obvious from the get go.

Folks still refusing to acknowledge the obvious are invested in it, directly or indirectly.

For the rest of us it’s just another tool.

Folks still refusing to acknowledge the obvious are invested in it, directly or indirectly.

For the rest of us it’s just another tool.

If even Karpathy can’t get AI coding to work for him, are you willing to bet on it working for you? Your’s are IID you say? You will soon find out dimensionality means yours are OOD too.

October 13, 2025 at 9:48 PM

If even Karpathy can’t get AI coding to work for him, are you willing to bet on it working for you? Your’s are IID you say? You will soon find out dimensionality means yours are OOD too.

Nice paper. Observations:

- Verifier assisted code snippet optimization

- Before solutions are sub-optimal as are the after solutions

- Some of the paper’s commentary is contradictory

- If framework included chaos monkey to simulate real world, would these hold?

arxiv.org/abs/2510.061...

- Verifier assisted code snippet optimization

- Before solutions are sub-optimal as are the after solutions

- Some of the paper’s commentary is contradictory

- If framework included chaos monkey to simulate real world, would these hold?

arxiv.org/abs/2510.061...

October 10, 2025 at 4:47 PM

Nice paper. Observations:

- Verifier assisted code snippet optimization

- Before solutions are sub-optimal as are the after solutions

- Some of the paper’s commentary is contradictory

- If framework included chaos monkey to simulate real world, would these hold?

arxiv.org/abs/2510.061...

- Verifier assisted code snippet optimization

- Before solutions are sub-optimal as are the after solutions

- Some of the paper’s commentary is contradictory

- If framework included chaos monkey to simulate real world, would these hold?

arxiv.org/abs/2510.061...

All those words just to say - ‘If you view them thru an anthropomorphic lens, LLMs are just shallow replicas of human intelligence.’

Buddy - the rest of us knew.

(Not going link, but it’s on the bird site)

Buddy - the rest of us knew.

(Not going link, but it’s on the bird site)

October 2, 2025 at 4:08 PM

All those words just to say - ‘If you view them thru an anthropomorphic lens, LLMs are just shallow replicas of human intelligence.’

Buddy - the rest of us knew.

(Not going link, but it’s on the bird site)

Buddy - the rest of us knew.

(Not going link, but it’s on the bird site)

Used to be Sunday breakfast staple with “luchi”, in my younger days.

August 29, 2025 at 8:18 PM

Used to be Sunday breakfast staple with “luchi”, in my younger days.

August 29, 2025 at 7:01 PM

Was always the plan. A lawsuit needs to be filed to save copies of all of those server logs indefinitely.

bsky.app/profile/tech...

bsky.app/profile/tech...

August 26, 2025 at 5:39 PM

Was always the plan. A lawsuit needs to be filed to save copies of all of those server logs indefinitely.

bsky.app/profile/tech...

bsky.app/profile/tech...

Yes, they are or they wouldn’t be redistricting while it’s still 5 years to next census.

Not a great idea to listen to ‘"center-left, corporate and GOP donor-funded nonprofit", which advocates for neoliberal policies and is staunchly opposed to Medicare for All.’

en.m.wikipedia.org/wiki/Third_W...

Not a great idea to listen to ‘"center-left, corporate and GOP donor-funded nonprofit", which advocates for neoliberal policies and is staunchly opposed to Medicare for All.’

en.m.wikipedia.org/wiki/Third_W...

August 23, 2025 at 9:31 PM

Yes, they are or they wouldn’t be redistricting while it’s still 5 years to next census.

Not a great idea to listen to ‘"center-left, corporate and GOP donor-funded nonprofit", which advocates for neoliberal policies and is staunchly opposed to Medicare for All.’

en.m.wikipedia.org/wiki/Third_W...

Not a great idea to listen to ‘"center-left, corporate and GOP donor-funded nonprofit", which advocates for neoliberal policies and is staunchly opposed to Medicare for All.’

en.m.wikipedia.org/wiki/Third_W...

Good to see research catching up with what math behind these models have always implied: scaling laws may favor LLMs in average generality, but data points strongly toward SSLMs for agentic use cases.

“Small Language Models are the Future of Agentic AI” from NVIDIA:

arxiv.org/pdf/2506.021...

“Small Language Models are the Future of Agentic AI” from NVIDIA:

arxiv.org/pdf/2506.021...

August 18, 2025 at 4:12 PM

Good to see research catching up with what math behind these models have always implied: scaling laws may favor LLMs in average generality, but data points strongly toward SSLMs for agentic use cases.

“Small Language Models are the Future of Agentic AI” from NVIDIA:

arxiv.org/pdf/2506.021...

“Small Language Models are the Future of Agentic AI” from NVIDIA:

arxiv.org/pdf/2506.021...

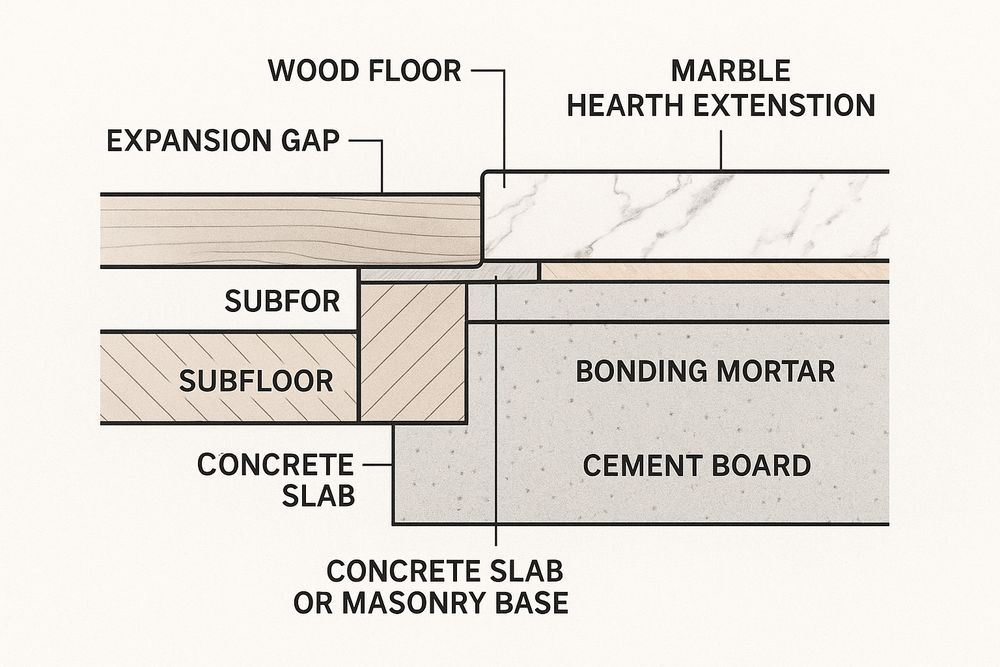

Tiling pros on Bsky, does this look like a good plan? Need to start the DIY project ASAP!

GPT-5: “Here’s the cross-section detail for blending a marble hearth into a wood floor without a transition strip.”

GPT-5: “Here’s the cross-section detail for blending a marble hearth into a wood floor without a transition strip.”

August 14, 2025 at 10:25 PM

Tiling pros on Bsky, does this look like a good plan? Need to start the DIY project ASAP!

GPT-5: “Here’s the cross-section detail for blending a marble hearth into a wood floor without a transition strip.”

GPT-5: “Here’s the cross-section detail for blending a marble hearth into a wood floor without a transition strip.”

GPT-5 the whale amongst LLMs or not.

August 9, 2025 at 12:32 AM

GPT-5 the whale amongst LLMs or not.

August 8, 2025 at 4:38 PM

This was posted on the bird site in a popular thread. Since I wont link also removed poster info (not fair to quote without linking).

I have seen this point made quite a few times since the IMO drama. What folks are missing is, when LLMs claims it knows, it doesn’t actually know that it knows.

I have seen this point made quite a few times since the IMO drama. What folks are missing is, when LLMs claims it knows, it doesn’t actually know that it knows.

July 27, 2025 at 1:48 AM

This was posted on the bird site in a popular thread. Since I wont link also removed poster info (not fair to quote without linking).

I have seen this point made quite a few times since the IMO drama. What folks are missing is, when LLMs claims it knows, it doesn’t actually know that it knows.

I have seen this point made quite a few times since the IMO drama. What folks are missing is, when LLMs claims it knows, it doesn’t actually know that it knows.

OpenAI hypes and every damn time tech twitter scrambles.

All you need to know is:

“We don't plan to release anything with this level of math capability for several months.”

GPT-5 is going to be disappointment - they will then redirect hype to THE reasoning model that’s coming in “… few months”.

All you need to know is:

“We don't plan to release anything with this level of math capability for several months.”

GPT-5 is going to be disappointment - they will then redirect hype to THE reasoning model that’s coming in “… few months”.

July 19, 2025 at 10:37 PM

OpenAI hypes and every damn time tech twitter scrambles.

All you need to know is:

“We don't plan to release anything with this level of math capability for several months.”

GPT-5 is going to be disappointment - they will then redirect hype to THE reasoning model that’s coming in “… few months”.

All you need to know is:

“We don't plan to release anything with this level of math capability for several months.”

GPT-5 is going to be disappointment - they will then redirect hype to THE reasoning model that’s coming in “… few months”.

Strange that Microsoft AI team don’t seem to understand that NEJM cases are often diverse, difficult and obscure and treatment is typically specialist led, which is NOT what they tested.

They handicapped the humans in other ways as well to get their sensational headline.

arxiv.org/pdf/2506.22405

They handicapped the humans in other ways as well to get their sensational headline.

arxiv.org/pdf/2506.22405

June 30, 2025 at 10:00 PM

Strange that Microsoft AI team don’t seem to understand that NEJM cases are often diverse, difficult and obscure and treatment is typically specialist led, which is NOT what they tested.

They handicapped the humans in other ways as well to get their sensational headline.

arxiv.org/pdf/2506.22405

They handicapped the humans in other ways as well to get their sensational headline.

arxiv.org/pdf/2506.22405

Friday AI paper read: arxiv.org/pdf/2506.11928

Still pattern emulation, not reasoning.

LLM approach to AGI is deeply flawed, driven by sunk cost fallacy and peer envy.

CEOs, looking to replace engineers with AI, don’t realize that this charade is going to make engineers more valuable, not less.

Still pattern emulation, not reasoning.

LLM approach to AGI is deeply flawed, driven by sunk cost fallacy and peer envy.

CEOs, looking to replace engineers with AI, don’t realize that this charade is going to make engineers more valuable, not less.

June 21, 2025 at 4:50 PM

Friday AI paper read: arxiv.org/pdf/2506.11928

Still pattern emulation, not reasoning.

LLM approach to AGI is deeply flawed, driven by sunk cost fallacy and peer envy.

CEOs, looking to replace engineers with AI, don’t realize that this charade is going to make engineers more valuable, not less.

Still pattern emulation, not reasoning.

LLM approach to AGI is deeply flawed, driven by sunk cost fallacy and peer envy.

CEOs, looking to replace engineers with AI, don’t realize that this charade is going to make engineers more valuable, not less.

Lot of words to say:

LLMs excel at “reasoning” so long as it has memorized the exact answer.

LLMs excel at “reasoning” so long as it has memorized the exact answer.

June 14, 2025 at 5:37 PM

Lot of words to say:

LLMs excel at “reasoning” so long as it has memorized the exact answer.

LLMs excel at “reasoning” so long as it has memorized the exact answer.

Glad he followed thru 🤣

June 14, 2025 at 2:58 AM

Glad he followed thru 🤣