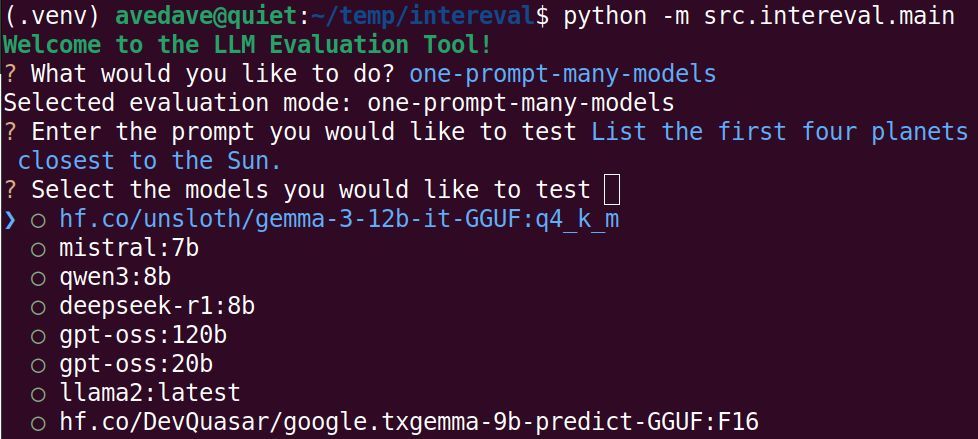

- Interactively test prompt against multiple models.

- Test multiple prompts against the same model

github.com/avedave/inte...

- Interactively test prompt against multiple models.

- Test multiple prompts against the same model

github.com/avedave/inte...

Results (Model, Total Duration, Response Processing)

Gemma:

- 1B (0m:4s, 212 t/s)

- 4B (0m:8s, 108 t/s)

- 12B (0m:18s, 44 t/s)

- 27B (0m:42s, 22 t/s)

DeepSeek R1 70B (7m:31s, 3.04 t/s)

Results (Model, Total Duration, Response Processing)

Gemma:

- 1B (0m:4s, 212 t/s)

- 4B (0m:8s, 108 t/s)

- 12B (0m:18s, 44 t/s)

- 27B (0m:42s, 22 t/s)

DeepSeek R1 70B (7m:31s, 3.04 t/s)

Today marks a special milestone on my journey towards a healthier life!

If you are interested in learning how I did it, leave a comment. I might turn my notes into an actual PDF and share with you.

Today marks a special milestone on my journey towards a healthier life!

If you are interested in learning how I did it, leave a comment. I might turn my notes into an actual PDF and share with you.

Learn more: bit.ly/3E14JP1

Learn more: bit.ly/3E14JP1

What used to be a full-weekend project can now be generated within seconds!

My prompt: "p5js (no HTML) colorful implementation of the game of life"

Worked on the first attempt :)

What used to be a full-weekend project can now be generated within seconds!

My prompt: "p5js (no HTML) colorful implementation of the game of life"

Worked on the first attempt :)

1. Download Llama: ollama.com

2. Install Llama

3. Pull Gemma 3 1B from the repository: ollama pull gemma3:1b

4. Run the model locally: ollama run gemma3:1b

1. Download Llama: ollama.com

2. Install Llama

3. Pull Gemma 3 1B from the repository: ollama pull gemma3:1b

4. Run the model locally: ollama run gemma3:1b

Details:

- 1B, 4B, 12B, 27B sizes

- support for 140+ languages

- multimodal inputs

- 128k context window

- currently the best model that can run on a single GPU or TPU

Details:

- 1B, 4B, 12B, 27B sizes

- support for 140+ languages

- multimodal inputs

- 128k context window

- currently the best model that can run on a single GPU or TPU