Previously @mackelab.bsky.social - machine learning in (neuro)science.

We propose calibration checks to evaluate the models’ uncertainty estimates, to avoid making confidently wrong predictions.

Check out the paper for more 🙂

We propose calibration checks to evaluate the models’ uncertainty estimates, to avoid making confidently wrong predictions.

Check out the paper for more 🙂

1. jointly modeling conditional distributions that are commonly targeted in neuroscience (e.g., encoding 🐭➡️🧠and decoding 🧠 ➡️🐭) and

2. accounting for low-dimensional dynamics underlying both neural activity and behavior. 🌀

1. jointly modeling conditional distributions that are commonly targeted in neuroscience (e.g., encoding 🐭➡️🧠and decoding 🧠 ➡️🐭) and

2. accounting for low-dimensional dynamics underlying both neural activity and behavior. 🌀

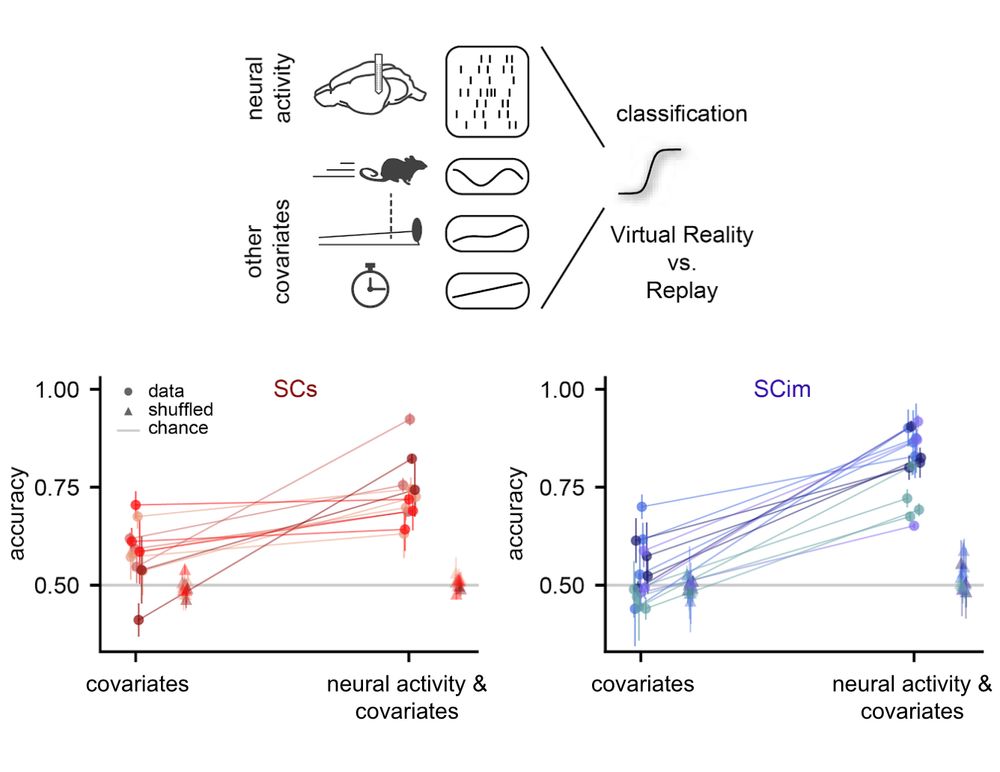

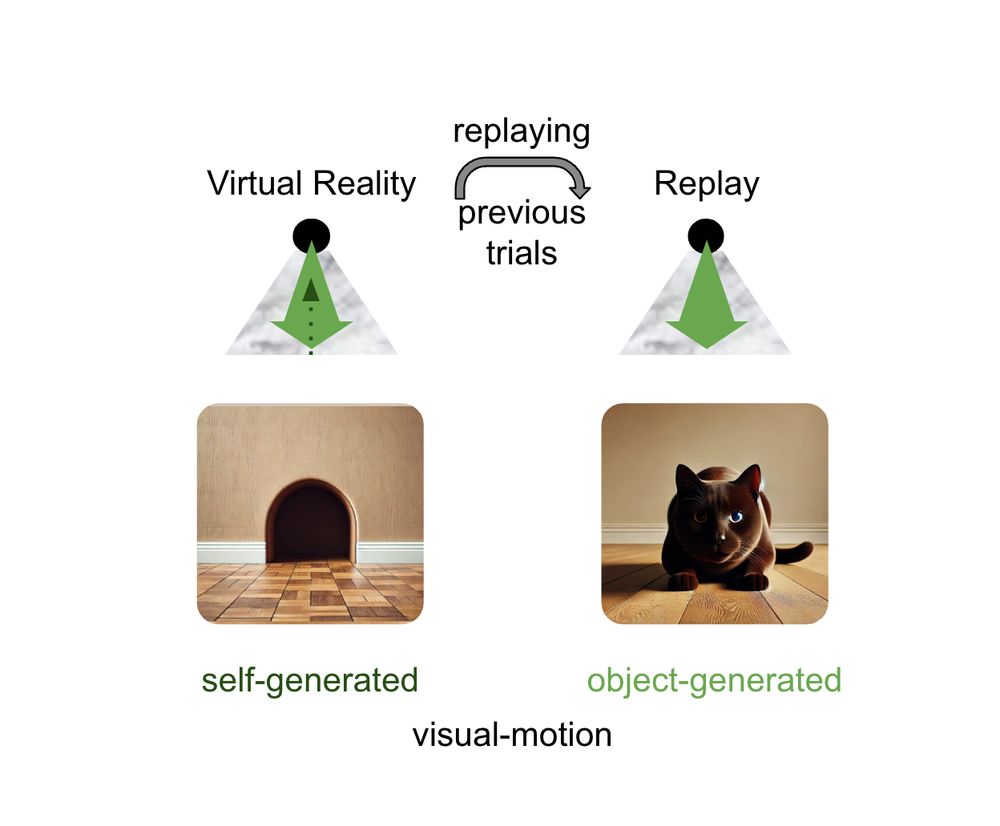

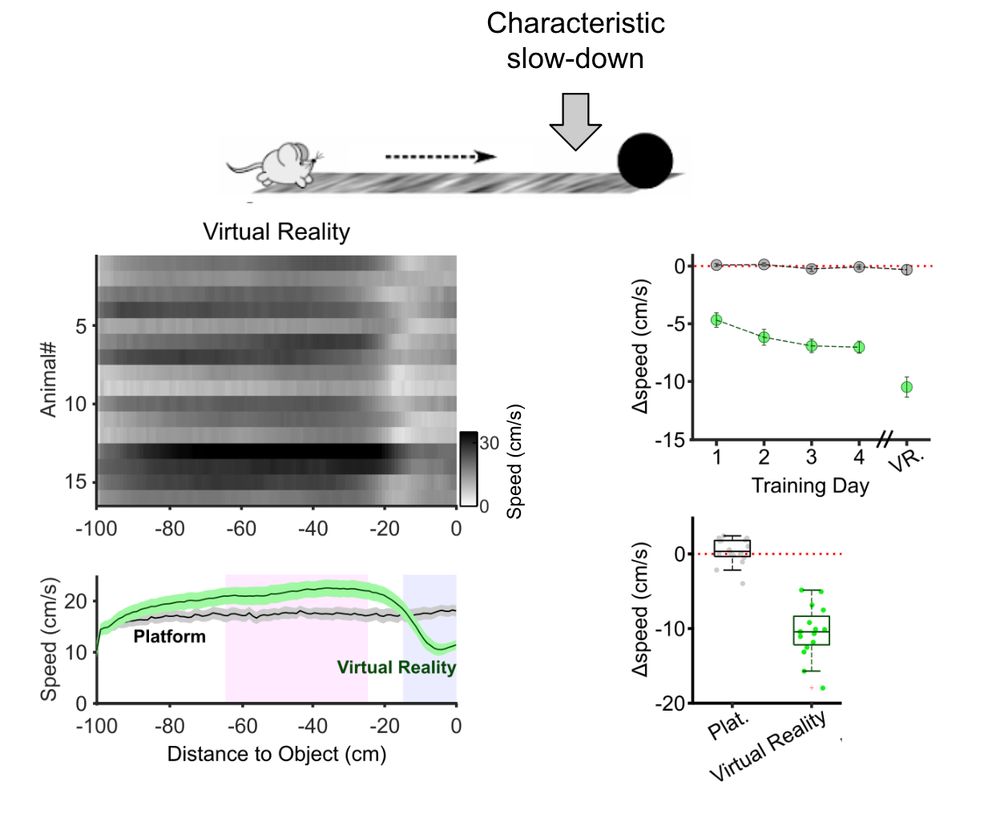

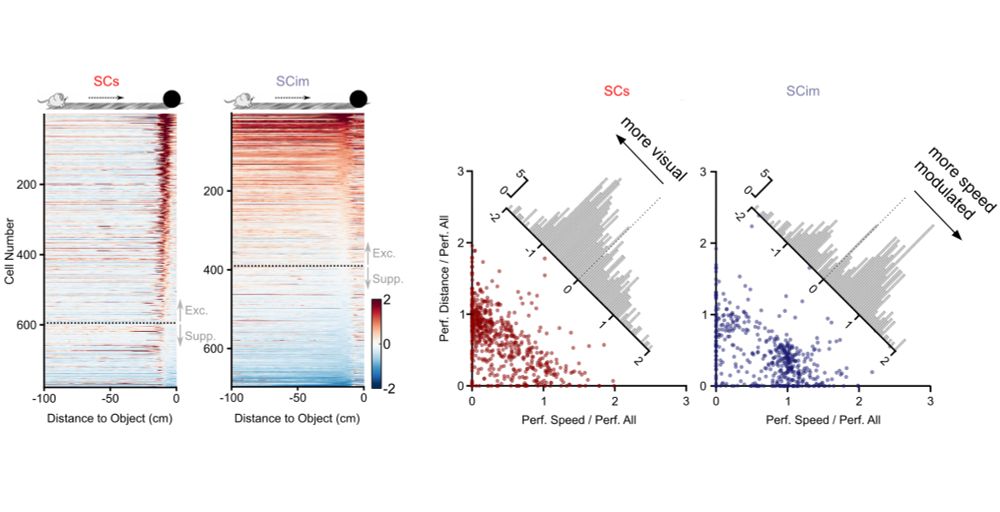

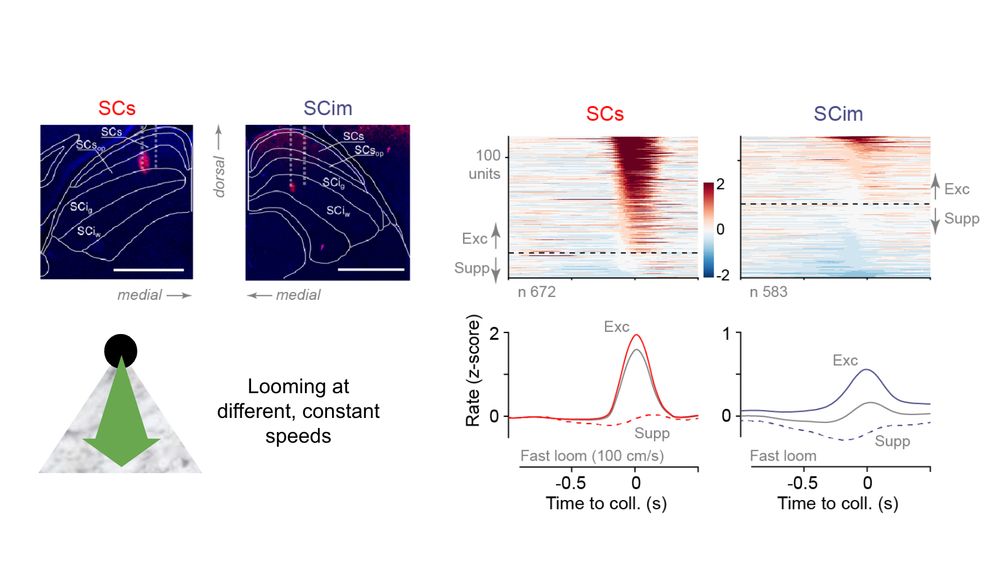

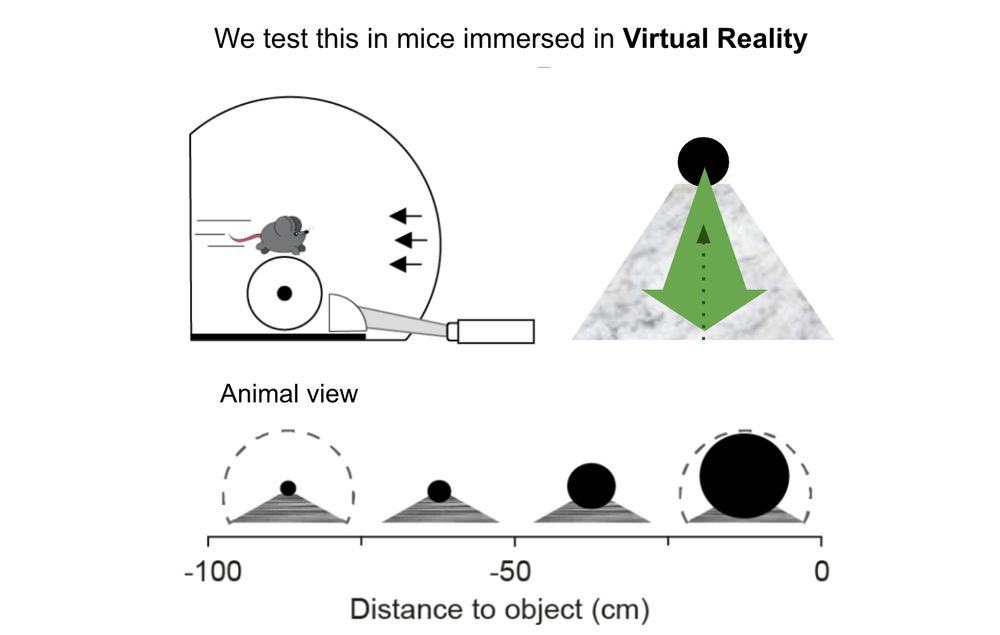

How do mice distinguish self-generated vs. object-generated looming stimuli? Our new study combines VR and neural recordings from superior colliculus (SC) 🧠🐭 to explore this question.

Check out our preprint doi.org/10.1101/2024... 🧵

How do mice distinguish self-generated vs. object-generated looming stimuli? Our new study combines VR and neural recordings from superior colliculus (SC) 🧠🐭 to explore this question.

Check out our preprint doi.org/10.1101/2024... 🧵

LDNS successfully mimicked cortical data during attempted speech—a challenging task due to varying trial lengths.

LDNS successfully mimicked cortical data during attempted speech—a challenging task due to varying trial lengths.

Both latents and PCs thereof reflect the reach direction of reaches used for conditioning.

Both latents and PCs thereof reflect the reach direction of reaches used for conditioning.

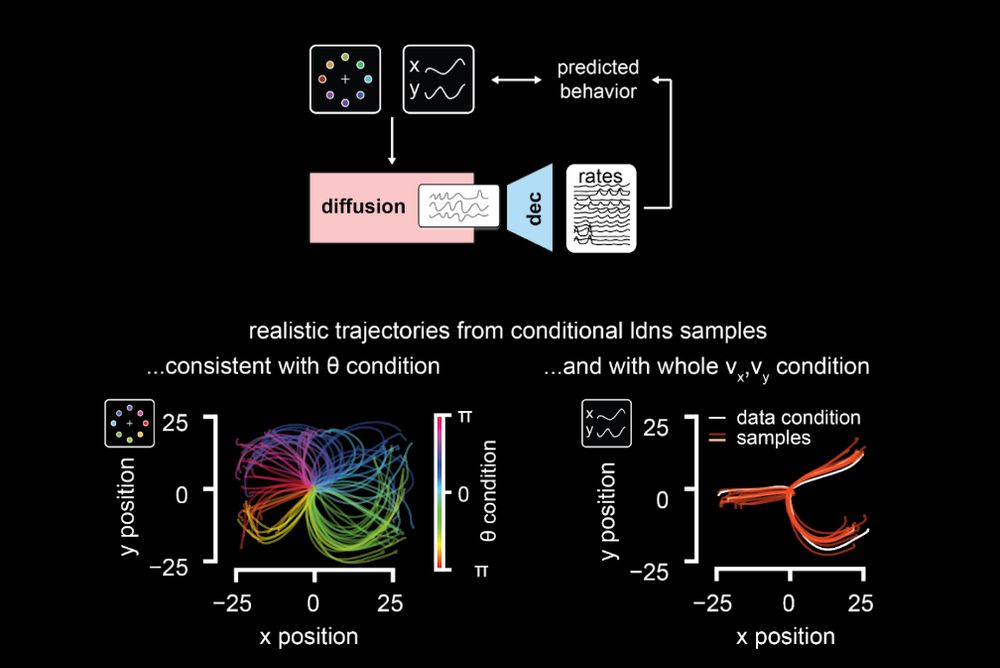

Diffusion models conditioned on either reach direction or velocity trajectories produce neural activity samples that are consistent with the queried behavior.

Diffusion models conditioned on either reach direction or velocity trajectories produce neural activity samples that are consistent with the queried behavior.

(this can be applied to any LVM trained with Poisson log-likelihood!)

(this can be applied to any LVM trained with Poisson log-likelihood!)

LDNS perfectly captured firing rates & underlying dynamics and can length-generalize—producing faithful samples of 16 times the original training length.

LDNS perfectly captured firing rates & underlying dynamics and can length-generalize—producing faithful samples of 16 times the original training length.

The AE first maps spikes to time-aligned latents, which allows training flexible (un)conditional diffusion models on smoothly varying latents, circumventing the issue of diffusion models acting on discrete values.

The AE first maps spikes to time-aligned latents, which allows training flexible (un)conditional diffusion models on smoothly varying latents, circumventing the issue of diffusion models acting on discrete values.

LDNS combines 1) a regularized S4-based autoencoder (AE) with 2) diffusion in latent space, and can model diverse neural spiking data.

Here we consider 3 very different tasks:

LDNS combines 1) a regularized S4-based autoencoder (AE) with 2) diffusion in latent space, and can model diverse neural spiking data.

Here we consider 3 very different tasks:

Latent Diffusion for Neural Spiking data (LDNS), a latent variable model (LVM) which addresses 3 goals simultaneously:

Latent Diffusion for Neural Spiking data (LDNS), a latent variable model (LVM) which addresses 3 goals simultaneously: