Super smooth process, way easier than I expected.

Recording a full step-by-step guide next. Stay tuned!

Super smooth process, way easier than I expected.

Recording a full step-by-step guide next. Stay tuned!

We built an AI Agent that:

- Searches arXiv and generates clean research reports

- Saves findings with persistent memory

- Recalls and builds on past sessions

Built with @nebiustf , @memorilab , @tavilyai and @streamlit

Here's the Demo:

We built an AI Agent that:

- Searches arXiv and generates clean research reports

- Saves findings with persistent memory

- Recalls and builds on past sessions

Built with @nebiustf , @memorilab , @tavilyai and @streamlit

Here's the Demo:

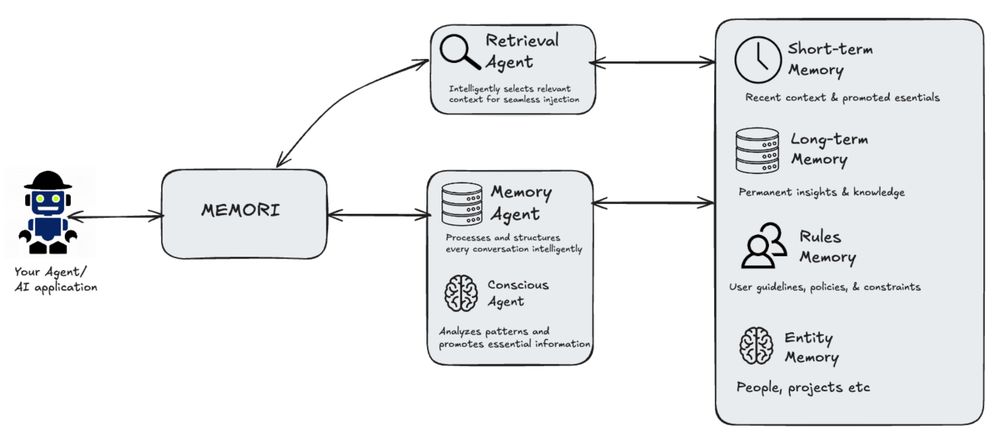

@memorilab's Memori uses traditional SQL to power agent memory:

- Short-term convos in temp tables

- Long-term facts promoted to permanent storage

- Rules, preferences, entities stored as structured data

Proven, efficient, and scalable👇

@memorilab's Memori uses traditional SQL to power agent memory:

- Short-term convos in temp tables

- Long-term facts promoted to permanent storage

- Rules, preferences, entities stored as structured data

Proven, efficient, and scalable👇

But writing code is one thing, shipping reliable code is a whole different game 👀

So I recorded a full breakdown of the exact workflow I use to keep AI-generated code production-ready.

Full video here👇

But writing code is one thing, shipping reliable code is a whole different game 👀

So I recorded a full breakdown of the exact workflow I use to keep AI-generated code production-ready.

Full video here👇

Crazy to think, How far we have come!

Crazy to think, How far we have come!

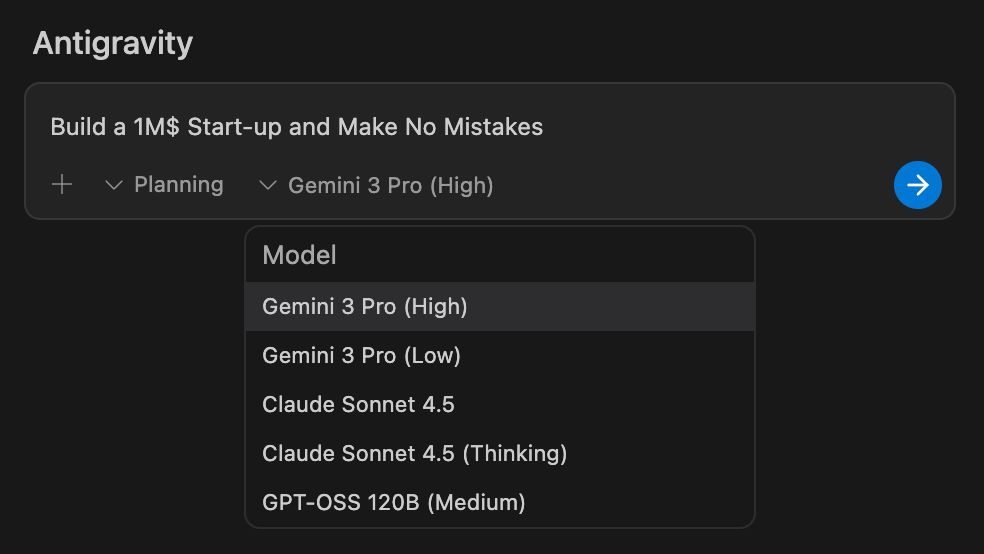

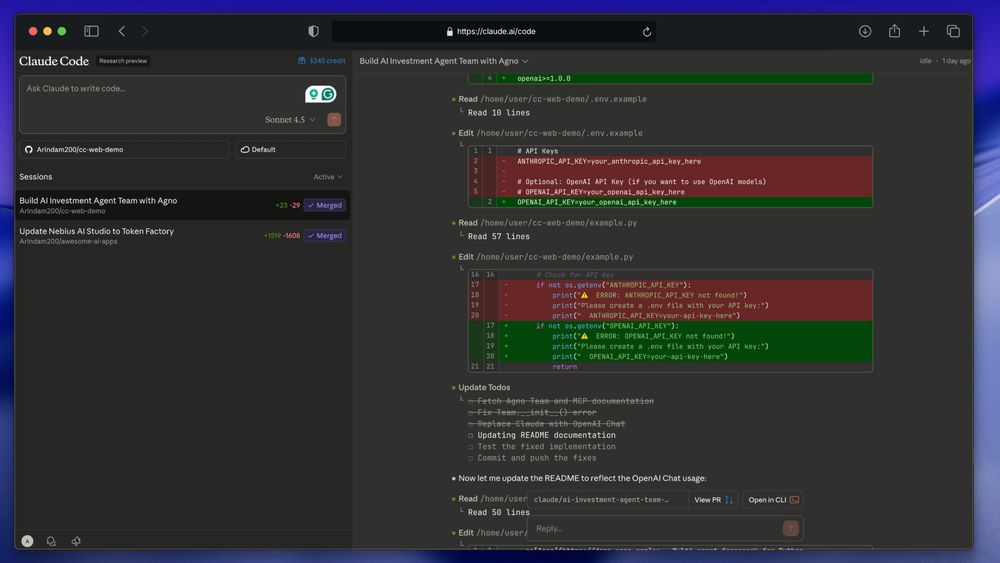

I pushed it on two tasks:

• Fixing API endpoints across a repo

• Building an AI Agent team with @AgnoAgi

The results were… interesting. Some things worked great, some didn’t.

Watch it here👇

youtube.com/watch?v=nIt...

I pushed it on two tasks:

• Fixing API endpoints across a repo

• Building an AI Agent team with @AgnoAgi

The results were… interesting. Some things worked great, some didn’t.

Watch it here👇

youtube.com/watch?v=nIt...

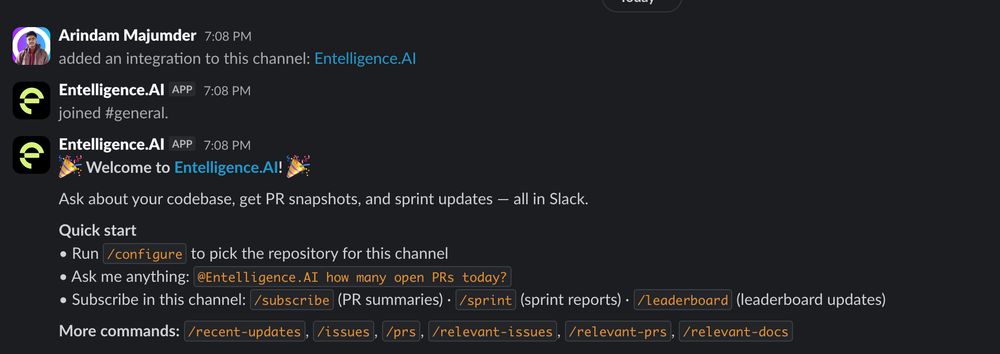

I can instantly check:

- PR summaries

- Sprint reports

- PRs, issues, docs, and recent updates

And you can even chat with it!

All inside Slack. No tab-switching, no context loss.

I can instantly check:

- PR summaries

- Sprint reports

- PRs, issues, docs, and recent updates

And you can even chat with it!

All inside Slack. No tab-switching, no context loss.

With Tool Filtering in the Strands SDK, you can:

- Pick only the tools your agent really needs

- Skip unused ones to keep it fast

- Fine-tune behavior right from the SDK

Example: load just read_documentation, search_documentation, or recommend 👇

With Tool Filtering in the Strands SDK, you can:

- Pick only the tools your agent really needs

- Skip unused ones to keep it fast

- Fine-tune behavior right from the SDK

Example: load just read_documentation, search_documentation, or recommend 👇

Building an AI News Research Assistant with Bright Data + Vercel @aisdk

It scrapes global news, bypasses paywalls, detects bias, and gives smart summaries, all in one workflow.

Think of it as your personal AI journalist, built from scratch.

Read the full breakdown 👇

Building an AI News Research Assistant with Bright Data + Vercel @aisdk

It scrapes global news, bypasses paywalls, detects bias, and gives smart summaries, all in one workflow.

Think of it as your personal AI journalist, built from scratch.

Read the full breakdown 👇

Video coming soon!

Video coming soon!

@memorilab Memori takes a different path:

1. Dual memory (short + long term)

2. Intelligent promotion of what matters

3. Relational DBs for structure, joins, and indexing

Agents that actually remember 👇

@memorilab Memori takes a different path:

1. Dual memory (short + long term)

2. Intelligent promotion of what matters

3. Relational DBs for structure, joins, and indexing

Agents that actually remember 👇

Will share a Detailed Guide Soon!

Will share a Detailed Guide Soon!

With Structured Output, you can:

- Get well-defined responses for any task

- Use invoke_async for async execution

- Simplify downstream parsing and logic

Here's an Example👇

With Structured Output, you can:

- Get well-defined responses for any task

- Use invoke_async for async execution

- Simplify downstream parsing and logic

Here's an Example👇

Not a huge number, and honestly, I haven’t been that consistent 😅

But the support from the community has been amazing!

Time to change gears and stay consistent from here on. Stay tuned!

Not a huge number, and honestly, I haven’t been that consistent 😅

But the support from the community has been amazing!

Time to change gears and stay consistent from here on. Stay tuned!

I ran both on real projects:

- A Monkeytype-style Typing Game

- A 3D Solar System using Three.js

The results were... surprising 👀

Full breakdown + live test in my new video 🎥👇

www.youtube.com/watch?v=aFQ...

I ran both on real projects:

- A Monkeytype-style Typing Game

- A 3D Solar System using Three.js

The results were... surprising 👀

Full breakdown + live test in my new video 🎥👇

www.youtube.com/watch?v=aFQ...

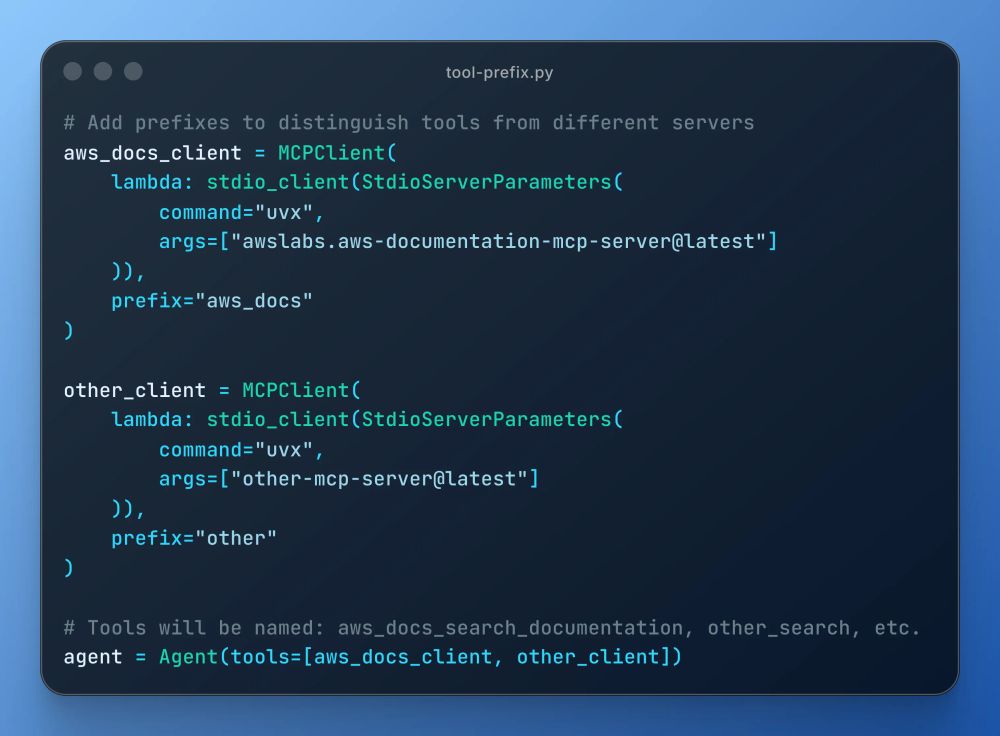

With Tool Name Prefixing, you can:

- Avoid name clashes across multiple MCP servers

- Keep tool namespaces organized

- Run complex setups without confusion

Example: prefix tools from each server to stay conflict-free 👇

With Tool Name Prefixing, you can:

- Avoid name clashes across multiple MCP servers

- Keep tool namespaces organized

- Run complex setups without confusion

Example: prefix tools from each server to stay conflict-free 👇

→ The LLM reads your prompt

→ picks the right tool

→ formats the request

→ gets results

→ decides the next step.

That’s how it thinks and acts autonomously.

Now imagine multiple LLMs doing this together, that’s a multi-agent system!

→ The LLM reads your prompt

→ picks the right tool

→ formats the request

→ gets results

→ decides the next step.

That’s how it thinks and acts autonomously.

Now imagine multiple LLMs doing this together, that’s a multi-agent system!

15 lines to define an agent with access to an MCP server.

I found it fun to work with and appreciated how simple it was to define functions.

15 lines to define an agent with access to an MCP server.

I found it fun to work with and appreciated how simple it was to define functions.

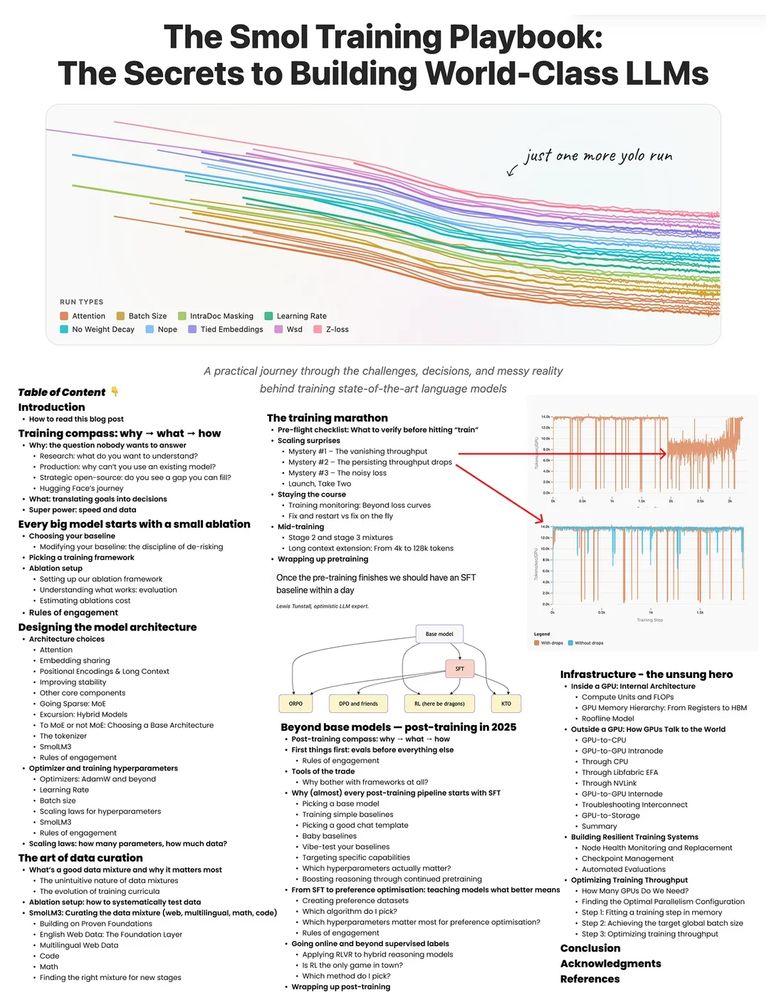

They just dropped a full playbook on how to train an LLM, covering pre-training, post-training, infra, and everything in between.

What worked, what didn’t, and how to make it run reliably.

Link to the Paper: huggingface.co/spaces/Hugg...

They just dropped a full playbook on how to train an LLM, covering pre-training, post-training, infra, and everything in between.

What worked, what didn’t, and how to make it run reliably.

Link to the Paper: huggingface.co/spaces/Hugg...

Honestly… this was bound to happen.

Every time I open Medium or Dev. to, it’s 90% AI slop. Same titles, same tone, same “Top 10 Tools” posts on repeat.

Honestly… this was bound to happen.

Every time I open Medium or Dev. to, it’s 90% AI slop. Same titles, same tone, same “Top 10 Tools” posts on repeat.

From just one video, it generated a lifelike talking avatar with real expressions, gestures, and perfect lip sync.

Honestly, it feels like the future of AI video creation.

Link in comments below:

From just one video, it generated a lifelike talking avatar with real expressions, gestures, and perfect lip sync.

Honestly, it feels like the future of AI video creation.

Link in comments below:

Upload an image or PDF and instantly convert it into structured markdown using LLMs!

Here’s the stack I used:

- Nemotron-Nano-V2-12b on @nebiusaistudio

- @streamlit for the UI

Try it now 👇

x.com/Arindam_172...

Upload an image or PDF and instantly convert it into structured markdown using LLMs!

Here’s the stack I used:

- Nemotron-Nano-V2-12b on @nebiusaistudio

- @streamlit for the UI

Try it now 👇

x.com/Arindam_172...